FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- DAX Commands and Tips

- Re: Help with optimizing DAX measure

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Help with optimizing DAX measure

Hello everybody,

I have a Fact table that contains 200Millions rows

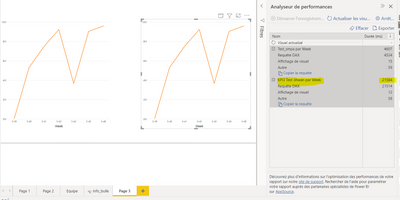

I need to optimize this measure to improve response times ,the calculation of my measures takes too much time to compute (6 to 7 seconds everytime you click on something. I tried to do it with two different formulas :

KPI 1 = SUMX(DISTINCT(SUMMARIZE(FILTER('Fact_Table','Fact_Table'[level]="Low"),'Fact_Table'[id_cv],'FACT_Table'[Server_id])),1)

KPI 2 = countrows(SUMMARIZE(FILTER('Fact_Table','Fact_Table'[level]="Low"),'Fact_Table'[id_cv],'Fact_Table'[Server_id]))

but the response time is almost the same which is 7 seconds.

anyone have a best prosposition?

Any help with this would be appreciated.

Thank you

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

Unfortunately these don't look like particularly inefficient queries to me. (Obviously without seeing the real data).

The immediate thing that springs to mind is an aggregation table, so the query can be run on a table with much less than 200m rows. https://docs.microsoft.com/en-us/power-bi/transform-model/aggregations-advanced

However, you'll immediately hit a limitation of agg tables: your 'Fact Table' would need to be in DirectQuery mode. This article explains a workaround for that: https://dax.tips/2019/11/15/creative-aggs-part-vi-shadow-models/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do any of these columns exist in a dimension table or are they all exclusive to the 'Fact_Table'? Summarizing over dimensions is likely faster.

Is your situation such that you could use SUMMARIZECOLUMNS here?

KPI =

COUNTROWS (

SUMMARIZECOLUMNS (

'Fact_Table'[id_cv],

'Fact_Table'[Server_id],

TREATAS ( { "Low" }, 'Fact_Table'[level] )

)

)

You could also try counting one or the other summarized columns using DISTINCTCOUNT or SUMX:

KPI 1 =

SUMX (

SUMMARIZECOLUMNS (

'Fact_Table'[id_cv],

TREATAS ( { "Low" }, 'Fact_Table'[level] ),

"@Rows", CALCULATE ( DISTINCTCOUNT ( 'Fact_Table'[Server_id] ) )

),

[@Rows]

)

KPI 2 =

SUMX (

SUMMARIZECOLUMNS (

'Fact_Table'[Server_id],

TREATAS ( { "Low" }, 'Fact_Table'[level] ),

"@Rows", CALCULATE ( SUMX ( VALUES ( 'Fact_Table'[id_cv] ), 1 ) )

),

[@Rows]

)- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

Unfortunately these don't look like particularly inefficient queries to me. (Obviously without seeing the real data).

The immediate thing that springs to mind is an aggregation table, so the query can be run on a table with much less than 200m rows. https://docs.microsoft.com/en-us/power-bi/transform-model/aggregations-advanced

However, you'll immediately hit a limitation of agg tables: your 'Fact Table' would need to be in DirectQuery mode. This article explains a workaround for that: https://dax.tips/2019/11/15/creative-aggs-part-vi-shadow-models/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PaulOlding @smpa01 : thank you both for your help i will try the solution with the aggregation table to decrease the data volume and reduce the response time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous I second with @PaulOlding; as much as any DAX enthusiast would love optimizing an inefficient measure, 6sec for a 200 mil dataset does not sound unreasonable unless you bring an aggregated table from the server and run the measure which will astronomically reduce the evaluation time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Please try the below.

KPI 2 =

COUNTROWS (

CALCULATETABLE (

SUMMARIZE ( 'Fact_Table', 'Fact_Table'[id_cv], 'Fact_Table'[Server_id] ),

FILTER ( 'Fact_Table', 'Fact_Table'[level] = "Low" )

)

)

If this post helps, then please consider accepting it as the solution to help other members find it faster, and give a big thumbs up.

Click here to visit my LinkedIn page

Click here to schedule a short Teams meeting to discuss your question.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous does this improve?

KPI 1 =

COUNTROWS (

FILTER (

SUMMARIZE (

'Fact_Table',

'Fact_Table'[level],

'Fact_Table'[id_cv],

'FACT_Table'[Server_id]

),

'Fact_Table'[level] = "Low"

)

)

or this

KPI1 =

COUNTROWS (

CALCULATETABLE (

SUMMARIZE ( 'Table', 'Table'[Server_id], 'Table'[id_cv], 'Table'[level] ),

KEEPFILTERS ( 'Table'[level] = "Low" )

)

)

@Anonymous please test out the above two

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@smpa01 thanks for your answer.

Unfortunately no, the response time is the same: 6 seconds

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 19 | |

| 13 | |

| 8 | |

| 4 | |

| 4 |

| User | Count |

|---|---|

| 29 | |

| 19 | |

| 18 | |

| 11 | |

| 10 |