- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Power BI Embedded & maximun size of datasets

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Power BI Embedded & maximun size of datasets

Hi,

I have a workspace which is associated to a A1 Embedded capacity (Gen2). I'm having a hard time figuring out what the maximum size of datasets is.

Question1: Option "Large datasets" disabled: the maximun size is 1GB correct? Even if I have a PPU license.

Question2: Option "Large datasets" enabled: the maximun size is 10GB correct? Even if I have a PPU license.

Question3: The maximun size can grows until 100GB only if I associate the PPU capacity to the workspace (and in this case I will loose the embedded features of the Embedded SKU), correct?

Thank you all.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Strizzolo ,

Answer 1:

With A1 SKU, the size of the dataset published to the Service does not exceed 1GB.

Answer 2:

The upload size limit is unaffected by the large dataset storage format. With large dataset storage format, the dataset size is limited only by the Power BI Premium capacity size.

Previously, datasets in Power BI Premium have been limited to 10 GB after compression. With large models, the limitation is removed and dataset sizes are limited only by the capacity size, or a maximum size set by the administrator.

Answer 3:

Premium capacity storage is set to 100 TB per capacity node.

As far as I know, there should be no 'DataSet' available within Power BI Embedded.

The report, dashboard or visuals are all stored in Power BI Service (Server side, or back end) side, what Power BI Embedded do is just get the report from Power BI Service and then make it available in your own Application, or saying: End User interface.

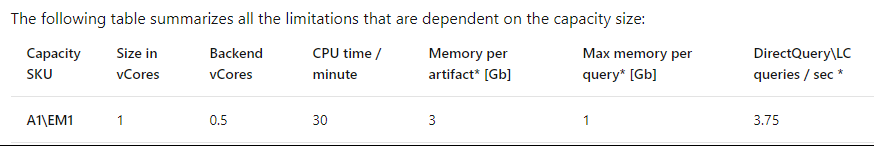

In Gen2, there is no memory Limit for the capacity as a whole. Instead, individual artifacts (such as datasets, dataflows, paginated reports) are subject to the following RAM limitations:

- A single artifact cannot exceed the amount of memory the capacity SKU offers.

The limitation includes all the operations (interactive and background) being processed for the artifact while in use (for example, while a report is being viewed, interacted with, or refreshed).

Dataset operations like queries are also subject to individual memory limits, just as they are in the first version of Premium.

What is Microsoft Power BI Premium? - Power BI | Microsoft Docs

If the problem is still not resolved, please provide detailed error information or the expected result you expect. Let me know immediately, looking forward to your reply.

Best Regards,

Winniz

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Strizzolo ,

Answer 1:

With A1 SKU, the size of the dataset published to the Service does not exceed 1GB.

Answer 2:

The upload size limit is unaffected by the large dataset storage format. With large dataset storage format, the dataset size is limited only by the Power BI Premium capacity size.

Previously, datasets in Power BI Premium have been limited to 10 GB after compression. With large models, the limitation is removed and dataset sizes are limited only by the capacity size, or a maximum size set by the administrator.

Answer 3:

Premium capacity storage is set to 100 TB per capacity node.

As far as I know, there should be no 'DataSet' available within Power BI Embedded.

The report, dashboard or visuals are all stored in Power BI Service (Server side, or back end) side, what Power BI Embedded do is just get the report from Power BI Service and then make it available in your own Application, or saying: End User interface.

In Gen2, there is no memory Limit for the capacity as a whole. Instead, individual artifacts (such as datasets, dataflows, paginated reports) are subject to the following RAM limitations:

- A single artifact cannot exceed the amount of memory the capacity SKU offers.

The limitation includes all the operations (interactive and background) being processed for the artifact while in use (for example, while a report is being viewed, interacted with, or refreshed).

Dataset operations like queries are also subject to individual memory limits, just as they are in the first version of Premium.

What is Microsoft Power BI Premium? - Power BI | Microsoft Docs

If the problem is still not resolved, please provide detailed error information or the expected result you expect. Let me know immediately, looking forward to your reply.

Best Regards,

Winniz

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks,

it was very helpful!

I have just few more questions:

Question1: The documentation says that the limit of memory per artifact for a A1 SKU is 3GB, so why you say that the limit is 1GB?

Question2: if I enable "Large datasets", am I able to cross the limit of the memory per artifact? Is it valid for A1 too?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Strizzolo ,

Answer 1:

1GB is the size limit when the PBIX file created in the Power BI Desktop can be published to Service.

When you embed the report in Power BI Embedded, if a query is generated, Power BI will load the dataset into the memory for subsequent queries. At this time, the single dataset loaded into the memory does not exceed 3GB, which is the RAM limit of A1 SKU.

Answer 2:

Even if you enable "Large datasets", the size of the dataset published from the local to Power BI Service still cannot exceed 1GB.

But in the Service, you can refresh its size to a maximum of 100TB, which is the size of one capacity node owned by A1 SKU.

If the problem is still not resolved, please provide detailed error information or the expected result you expect. Let me know immediately, looking forward to your reply.

Best Regards,

Winniz

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Last question I promise 😄 (thanks in advance)

Is it correct to say that, if I have Large Datasets enabled, my dataset can grow (for example) up to 100TB but it is still limited to 3GB in memory?

(This is only a theoretical question, in this case I would of course use another type of SKU!)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The answer is no, I am obviously limited to the memory assigned to me. If I want to grow my dataset, I also have to grow my SKU. For example, if I have a dataset whose size is 90GB, I have to use a P3/A6 SKU (and enable Large datasets because the model is >10GB). Thanks!

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.