FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Learn from the best! Meet the four finalists headed to the FINALS of the Power BI Dataviz World Championships! Register now

- Power BI forums

- Forums

- Get Help with Power BI

- Report Server

- Question about scheduled refresh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question about scheduled refresh

Hi all,

I have a quick question about the scheduled refresh of reports in 'import mode' deployed to a Report Server. FYI, we have obtained Power BI Report Server as part of a purchase of SQL Server Enterprise with active Software Assurance (SA). We retrieve our data from a single SQL Server database.

Now, I'm questioning my decision to create reports in 'import mode' because of limitations in the scheduled data refresh. I'm confused by the following:

- Power BI Premium has a scheduled refresh limit of 48 times a day

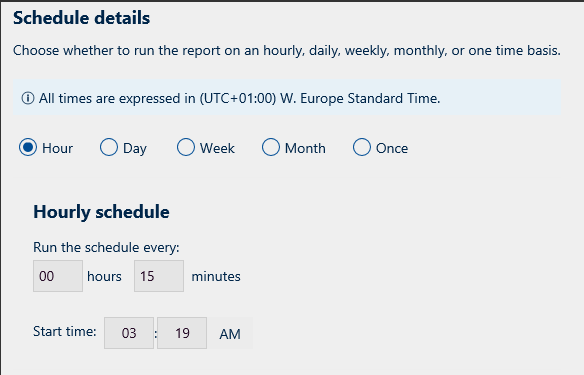

- I've seen examples where people are able to set the scheduled refresh at a minimum of 1 minute (15 minutes in the screenshot below):

Now what situation am I in? I would really like to be in the second situation. 🙂

In any case, 48 times a day would be a questionable frequency since my end-users prefer a report that's updated once a minute or every few minutes. I assume that would require me to create reports in DirectQuery mode (or Live?).

Advice is very welcome. 🙂

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If your users absolutely cannot have the data be older than 1 minute then you should build your report using Direct Query against a database. Note that you will need to make sure that your database has appropriate resources and is appropriately tuned to handle the additional query load, but this sort of low latency requirement is one of the use cases that Direct Query was designed to address.

A live connection probably would not help as you would still need to load the data into a SSAS Tabular model, but it might be an option if you could make use of an incremental processing stategy in order to reduce your processing time. (since PBIRS currently does not support any form of incremental processing)

As a rough rule of thumb once you get under 10-15 minutes for a refresh you are getting into the range where the cost of constantly loading and compressing the data could outway the benefits. If you have large data volumes or a high number of user queries you may need to push these times out, or if you have small datasets you might be able to get away with a smaller window.

You may even want to consider 2 models, one that is larger and more complex and has more historical data but is updated less frequently and a second that is in Direct Query mode that may be simpler and only have access to more recent data (to keep the queries small and fast). It depends on your requirements, I just wanted to point out that you may not have to solve all your reporting needs in a single data model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

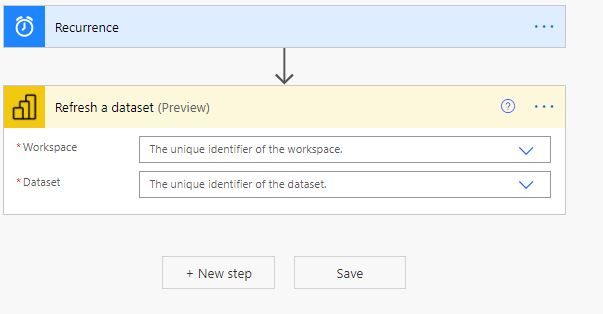

@SYM1986 I don't believe you can get Power Automate to trigger actions on an on premise Report Server. I believe this only works for the Power BI cloud service

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

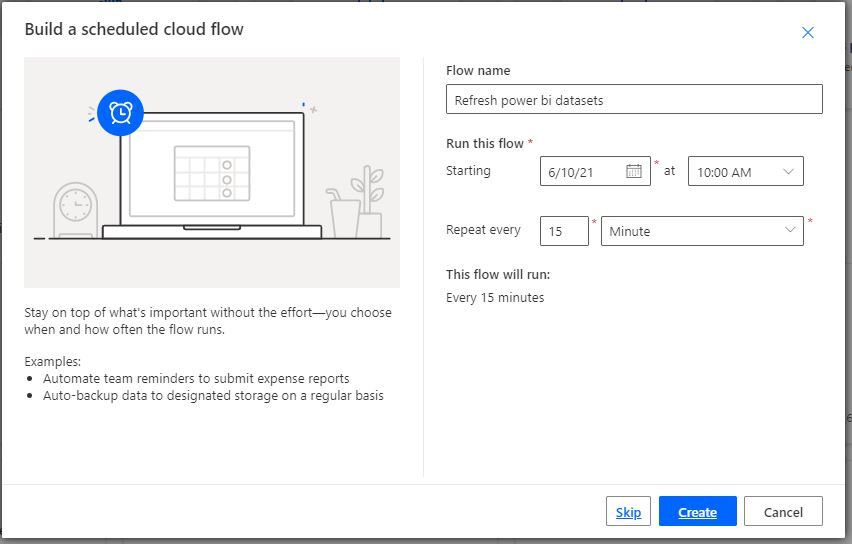

Hi @Anonymous ,i thinks you can use PowerAutomate to do this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If your users absolutely cannot have the data be older than 1 minute then you should build your report using Direct Query against a database. Note that you will need to make sure that your database has appropriate resources and is appropriately tuned to handle the additional query load, but this sort of low latency requirement is one of the use cases that Direct Query was designed to address.

A live connection probably would not help as you would still need to load the data into a SSAS Tabular model, but it might be an option if you could make use of an incremental processing stategy in order to reduce your processing time. (since PBIRS currently does not support any form of incremental processing)

As a rough rule of thumb once you get under 10-15 minutes for a refresh you are getting into the range where the cost of constantly loading and compressing the data could outway the benefits. If you have large data volumes or a high number of user queries you may need to push these times out, or if you have small datasets you might be able to get away with a smaller window.

You may even want to consider 2 models, one that is larger and more complex and has more historical data but is updated less frequently and a second that is in Direct Query mode that may be simpler and only have access to more recent data (to keep the queries small and fast). It depends on your requirements, I just wanted to point out that you may not have to solve all your reporting needs in a single data model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@d_gosbell Thanks for your elaborate answer! I will take it all into consideration.

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Power BI Monthly Update - February 2026

Check out the February 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 6 | |

| 5 | |

| 4 | |

| 3 | |

| 3 |