FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- What is generally faster - Table.ReplaceValue or T...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is generally faster - Table.ReplaceValue or Table.TransformColumns, for multiple replacements?

I’ve got a question - if I need to do several replacements in a table column - what is the fastest way to do that, by design (does NOT matter that one way allows to make more types of transofmations)?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@andreyminakov,

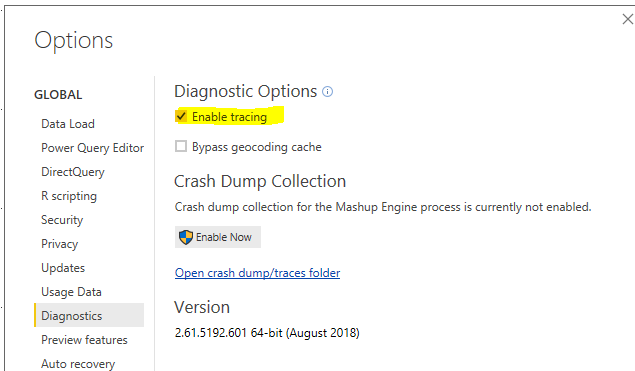

You can analyze the power query performance by using trace log. See https://www.excelando.co.il/en/analyzing-power-query-performance-source-large-files/.

Regards,

Lydia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@andreyminakov,

It depends on your data. How would you replace the values in the column? To optimize multiple replacements. you can combine Table.ReplaceValue and Table.TransformColumns functions.

There is a similar thread for your reference:

https://community.powerbi.com/t5/Desktop/Optimizing-multiple-replacements/td-p/102389

Regards,

Lydia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Multiple calls of Table.ReplaceValue (it's possible to make these call through List.Generate, but it doesn't influence the time of execution, I guess).

Table.ReplaceValue(Source,".","",Replacer.ReplaceText,{Table.ColumnNames(Source)})

Table.ReplaceValue(Source,",","",Replacer.ReplaceText,{Table.ColumnNames(Source)})

...2. Call of Table.TransformColumnsTable.TransformColumns(3. Call Custom Function in Table.ReplaceValue

Source,

List.Zip({

Table.ColumnNames(Source),

List.Repeat(

{each Text.Remove(_, Text.ToList(".:;?!<>@#$%^&*=+"))},

Table.ColumnCount(Source))

})

)

Table.ReplaceValue(And the question is - what is quicker by design of PQ?

Source,

".:;?!<>@#$%^&*=+",

"",

(x,y,z) => List.Accumulate(

Text.ToList(y),

x,

(s, c) => Text.Remove(s, c)

),

Table.ColumnNames(Source)

)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@andreyminakov,

You can analyze the power query performance by using trace log. See https://www.excelando.co.il/en/analyzing-power-query-performance-source-large-files/.

Regards,

Lydia

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!