Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Synapse forums

- Forums

- Get Help with Synapse

- General Discussion

- Parallel Execution

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Parallel Execution

Hi,

This is multiple questions in one....

I know we have two methods of parallel executions, one is the mssparkutils.runmultiple and the other is the for/each in a pipeline.

Am I correct in assuming the mssparkutils.runmultiple uses the high concurrency mode, which needs to be enabled in the workspace, while the pipelines are not capable to do so yet?

Both of them can receive errors related to the capacity. I'm testing with a pipeline and 5 parallel executions already generates errors in my environment. How can we calculate how many parallel executions are possible to make in each of these two scenarios ?

Thank you in advance !

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DennesTorres,

High concurrency mode in Spark allows you to share the Spark Compute to execute notebooks in an instant fashion instead of waiting to spin up new compute for each notebook. The runMultiple run uses the compute engine's multi-threading to run the different notebooks. In a way, both of them help achieve high concurrency using the same compute power.

High concurrency mode that you enable while using Spark is more for interactive approach at this moment. So let's say you are parallely working in many notebooks and you want to share the same compute so that you can start instantly as well as save costs, high concurrency mode is the way to go. For doing the same in code, you are using runMultiple which uses the same approach.

It is true that pipelines don't do concurrent execution using the same session. But it is in the roadmap: https://learn.microsoft.com/en-us/fabric/release-plan/data-engineering#concurrency

So for now, as you mentioned, we can use pipeline to run a notebook that uses runMultiple to run different notebooks to achieve concurrency.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I got an explanation from the support about the never ending session.

According to them, the runmultiple DAG should never be used with more than 50 activities. Strange things could happen if we try, such as the session never ending.

Kind Regards,

Dennes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @DennesTorres

try to add timeout arg to the activity

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DennesTorres,

High concurrency mode in Spark allows you to share the Spark Compute to execute notebooks in an instant fashion instead of waiting to spin up new compute for each notebook. The runMultiple run uses the compute engine's multi-threading to run the different notebooks. In a way, both of them help achieve high concurrency using the same compute power.

High concurrency mode that you enable while using Spark is more for interactive approach at this moment. So let's say you are parallely working in many notebooks and you want to share the same compute so that you can start instantly as well as save costs, high concurrency mode is the way to go. For doing the same in code, you are using runMultiple which uses the same approach.

It is true that pipelines don't do concurrent execution using the same session. But it is in the roadmap: https://learn.microsoft.com/en-us/fabric/release-plan/data-engineering#concurrency

So for now, as you mentioned, we can use pipeline to run a notebook that uses runMultiple to run different notebooks to achieve concurrency.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you. This matches with my findings as well, but I was a bit confuse because I found articles talking about adjusting the session DOP for the runmultiple, which didn't make sense. For the runmultiple, biggest is the pool, the better.

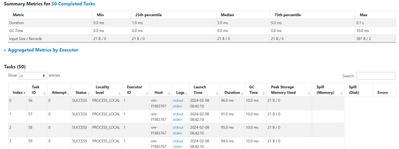

On the other hand, on my tests with runmultiple, I had two problems: First, it only reaches the maximum resources made available close to the "end" of the script, and I don't understand why. I don't know what to look into the logs to find the reason and fix it.

Second, it never ends. It continues to run continously for hours until I cancel the session. Am I missing some end statement? I'm using mssparkutil.notebook.exit .

The image below illustrates the problems:

Thank you in advance!

Dennes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DennesTorres,

By going to spark history server, we can look at the different types of logs.

And then going to each stage and see how each partition gets executed.

Debugging a spark application for performance is going to be lot harder, but this might be a good place to start.

Do you use notebook.exit in all the notebooks?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I managed to get a new error message !!!

After I decided to join the code blocks again and make one more execution, finally, for the first time, the runMultiple listed the status of the execution of the tasks, as many articles said it should do.

Some (few) tasks executed sucessfully, but most of the tasks got the following error "Fetch notebook content for 'notebook name here' failed with exception: Timeout waiting for connection from pool."

I'm not sure what's the cause, or how to solve this.

Kind Regards,

Dennes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Yes, all the notebooks use notebook.exit.

I tried something different: I created a new code block (there was only one before). I left the first code block with the DAG creation, and on the second code block I included one single statement - the runMultiple.

The result was absolutely terrible. First, it allocated only 1 executor (8 instances). When it was on a single code block it went up to 40 instances.

Second, the exeuction "hang" around job number 450. When it was on a single code block it hangs around 950.

I have checked the spark UI, logs, but I can't figure out the meaning/reason for most of it.

Kind Regards,

Dennes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DennesTorres ,

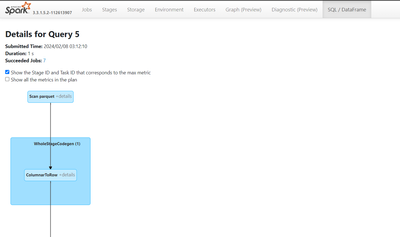

I will need more information from spark UI to debug. Usually we see the Spark DAG to understand what is happening under each job and try to figure out the best way to resolve it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

When you say Spark DAG, is it the one I generated as parameter, or something I should extract from the spark UI?

Kind Regards,

Dennes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DennesTorres,

It is the one that we get from Spark UI. This is the one I am talking about. This provides a way to understand how exactly Spark executes the query and where it is taking too long.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DennesTorres

We haven’t heard from you on the last response and was just checking back to see if you have a resolution yet. Otherwise, will respond back with the more details and we will try to help.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

No, I don't have a solution yet. I opened a support case(2402080050003234), but any help is welcome.

The problem about the DAG on the Spark UI is that I'm feeding the runMultiple with more than 1000 activities. The execution gets stuck sometimes around 950, sometimes more, I can see this in the history, get into the spark ui for each one of them and each one of them has a DAG.

As a result, I get lost about which execution/DAG I should look for.

Kind Regards,

Dennes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I got an explanation from the support about the never ending session.

According to them, the runmultiple DAG should never be used with more than 50 activities. Strange things could happen if we try, such as the session never ending.

Kind Regards,

Dennes