Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Synapse forums

- Forums

- Get Help with Synapse

- General Discussion

- Re: Error DeltaTableIsInfrequentlyCheckpointed whe...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Error DeltaTableIsInfrequentlyCheckpointed when accessing lakehouse table

I have no idea how it got into this state. Any help with fixing this would be appreciated.

I'm getting this error when i try and view a table in a datalake or manually run a vacuum

Delta table 'zsd_ship' has atleast '100' transaction logs, since last checkpoint. For performance reasons, it is recommended to regularly checkpoint the delta table more frequently than every '100' transactions. As a workaround, please use SQL or Spark to retrieve table schema.

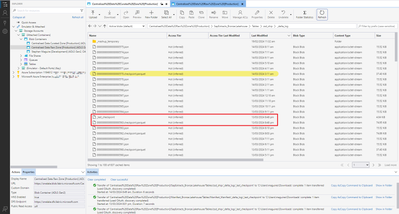

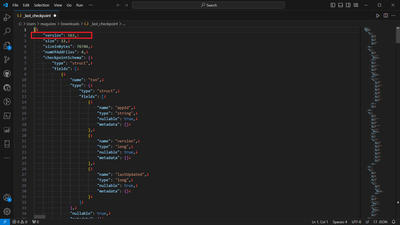

The "_last_checkpoint" file contents are:

{"version":10,"size":4}

However the last checkpoint is file is file "00000000000000000340.checkpoint.parquet"

Can i just change the version in the last checkpoint file?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I received a support call yesterday and they had me run this code in a notebook, then refresh the lakehouse table that had the error, and it worked. Maybe it will work for you. Good luck!

%%spark

import org.apache.spark.sql.delta.DeltaLog

DeltaLog.forTable(spark,"Tables/yourtablenamehere").checkpoint()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks very much for this code. I was able to do some testing my end with a dataflow that I have running hourly.

@v-gchenna-msft It looks to me like the _last_checkpoint" file isn't being updated when the automatic checkpoints are being created.

However, running the code from @kblackburn is updating the "_last_checkpoint" file to point to the newly created checkpoint.

So, it would seem that regularly running the below code in a notebook (which will update all tables for all lakehouses in the same workspace as the notebook's default lakehouse) is the workaround until this is resolved.

%%spark

import org.apache.spark.sql.delta.DeltaLog

val lakehouses = spark.catalog.listDatabases()

lakehouses.collect().sortWith(_.name < _.name).foreach { lakehouse =>

if (lakehouse.name != "DataflowsStagingLakehouse") {

val tables = spark.catalog.listTables(lakehouse.name)

tables.collect().sortWith(_.name < _.name).foreach { table =>

DeltaLog.forTable(spark, s"${lakehouse.locationUri}/${table.name}").checkpoint()

println(s"Completed code run for lakehouse: ${lakehouse.name}, table: ${table.name}")

}

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks kblackburn ! This is a good workaround. Thanks for sharing! I hope they'll fix this soon. Perhaps any news on a fix?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This worked for me.

For anyone getting an error looking for the org.apache.spark.sql.delta.DeltaLog import, make sure you include the first line "%%spark" indicating that this is Scala code (not pyspark).

@kblackburn I have hundreds of tables with this issue, is-there an easy to to have this code run for every table in my lakehouse? I am not very familiar with Scala, so your help would be appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This will iterate through all tables for all lakehouses (except the dataflows staging one) in the same workspace as the default lakehouse for the notebook.

%%spark

import org.apache.spark.sql.delta.DeltaLog

val lakehouses = spark.catalog.listDatabases()

lakehouses.collect().sortWith(_.name < _.name).foreach { lakehouse =>

if (lakehouse.name != "DataflowsStagingLakehouse") {

val tables = spark.catalog.listTables(lakehouse.name)

tables.collect().sortWith(_.name < _.name).foreach { table =>

DeltaLog.forTable(spark, s"${lakehouse.locationUri}/${table.name}").checkpoint()

println(s"Completed code run for lakehouse: ${lakehouse.name}, table: ${table.name}")

}

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello. I submitted a ticket yesterday. I'll update here when I have some additional insight. As a follow up, after a week or so, I was able to run optimizer and vacuum successfully but the sql endpoit and semantic model are not updating / not in sync with lakehouse

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perhaps any news on this topic? I'm having the same issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am having the same issue. I noticed that my Gen2 flow had not appended any data to my lakehouse table in several days. Then today is the first day that I see the error message. I can also confim that if you create a new table, the flow works and the lakehouse table is rendered without error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same issue here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@v-gchenna-msft I am seeing the exact same issue. It is appearing on older tables that were loaded via Dataflows Gen2. The message just started appearing in the past few days.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

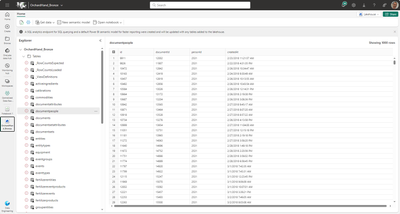

We've been experiencing the same thing for the past 5 days. The Lakehouse we are seeing this issue in has close to 40 delta tables. This error is only occuring on the older delta tables that have had Dataflow Gen2 flows loading data to them for the last 30 days. I've created some newer delta tables in the last couple days. The new tables aren't having the same issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @samaguire ,

Thanks for using Fabric Community.

Can you please help me understand the issue?

What was the last operation you performed and from when you are facing this issue?

What was the error you encountered while using VACCUM command?

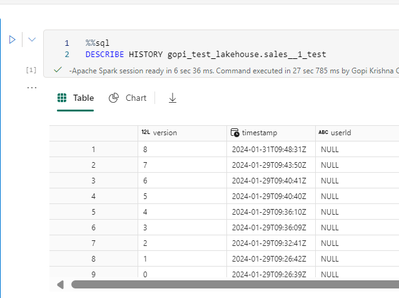

Try to share the output of this command -

It would be helpful if you can share few screenshots.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

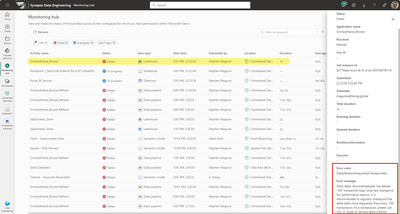

I nuked the original table and recreated it (problem gone), however, another Datalake we are running in the same way is having this issue as well.

The Datalake is updated via a Gen2 Dataflow that runs hourly and is replacing tables.

Each one of the tables in the Datalake has this error message when you hover over the red exclimation mark on the table.

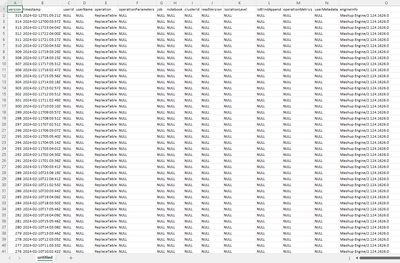

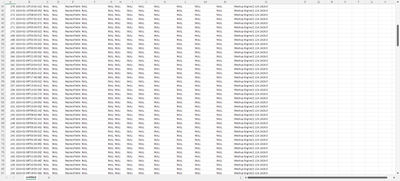

Results of the Spark SQL for the last ~115 rows:

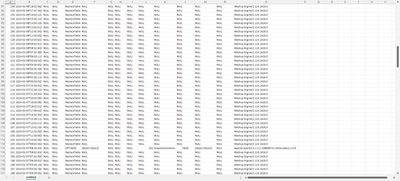

Results of the failed manual table vacuum

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @samaguire , @audrianna21

Thanks for using Fabric community.

Apologies for the issue you have been facing. I would like to check are you still facing this issue?

It's difficult to tell what could be the reason for this performance.

If the issue still persists, please reach out to our support team so they can do a more thorough investigation on why this it is happening. If its a bug, we will definitely would like to know and properly address it.

Please go ahead and raise a support ticket to reach our support team:

https://support.fabric.microsoft.com/support

After creating a Support ticket please provide the ticket number as it would help us to track for more information.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I opened at support ticket yesterday. Someone contacted me to say they would look into it and get back to me. I haven't heard back.

i can confirm if you delete the table and recreate it the error goes away but that's not a feasible option for me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @audrianna21 ,

Can you please share the support ticket number, I will try to get any updates from the team internally?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The support request ID is: 2402120040003273.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @audrianna21 ,

I haven't got any updates internally. Incase if there is any update I will share it over here.

Thank you