Join us at the 2025 Microsoft Fabric Community Conference

Microsoft Fabric Community Conference 2025, March 31 - April 2, Las Vegas, Nevada. Use code FABINSIDER for a $400 discount.

Register now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Webinars and Video Gallery

- Data Stories Gallery

- Themes Gallery

- Power BI DataViz World Championships Gallery

- Quick Measures Gallery

- R Script Showcase

- COVID-19 Data Stories Gallery

- Community Connections & How-To Videos

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI DataViz World Championships are on! With four chances to enter, you could win a spot in the LIVE Grand Finale in Las Vegas. Show off your skills.

- Power BI forums

- Forums

- Get Help with Power BI

- Developer

- Re: Share your thoughts on Power BI Project files ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Share your thoughts on Power BI Project files (PBIP) and Fabric Git Integration

Hit Reply to tell us about your experience with Power BI Project files (PBIP) and Fabric Git Integration for Power BI Semantic Models and Reports so we can continue to improve.

For example:

- What changes would you like to see?

- What are your main challenges/issues?

- Any suggestions for additional settings or capabilities?

Thanks,

-Power BI team

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reporting. Its a bug and a fix will roll out in the next few days.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. This seems to be fixed now in the latest version.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would like to be able to have git integration included in Power BI Desktop for easier colaboration.

With that said exclusive lock rights would then be super awesome so you can not work on the same file with multiple people at once.

And it would be nice if it would be possible turn off the creation of the cache.abf in pbip because it generates a lot of storage space on a shared Teams/OneDrive location(because of the automatic versioning).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

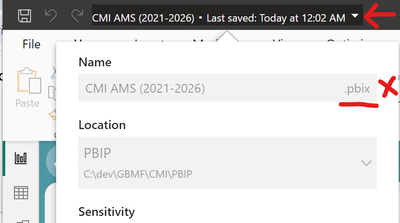

I noticed that after I save a PBIX file in PBIP format, the pop-up accessed from the top of the Power BI Desktop window frame still shows ".pbix" next to the file name.

As this is the only place I am aware of to get this info ("which file do I have open?"), it seems important to get it right.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We fixed this in March release, with the new developer mode flyout. Any thoughts/feedback on that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes that looks great - thanks.

But now you've raised the bar, and the old flyout (for PBIX files) is missing a link to the Location 😄

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a fairly large PBIX (150MB) which is also fairly complex (110+ tables, 40+ pages). On my laptop it opens in 3 minutes from PBIX format, but 4 minutes from PBIP+TMDL format.

Not a showstopper by any means, but perhaps a step in the wrong direction. I imagined it would be a bit faster in PBIP+TMDL format, as no need to unzip a large file at the start, parallel I/O etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are aware of some performance issues on open/save of PBIP vs PBIX and will improve it in upcoming releases to be as fast or faster than PBIX. The reason PBIP is still as slow as PBIX, its because of the save of the cache.abf file.

Thanks for the feedback.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very happy to see the product moving in this direction, I've been using external tools to do similar things for a while but always prefer supported formats, should also encourage further external tool development.

I have bumped up against the Windows path limit (256 characters by default), when saving a PBIX as PBIP format. One trigger was the lengthy subfolder name for a Custom Visual, including a GUID. But IAC this seems a risk for any editing work using this format, e.g. add a new table with a longer table name and blow the limit, saving will crash.

Can you add some logic to Power BI Desktop to detect and avoid these issues?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the feedback. In your opinion what would be the best experience? Block you from creating the table because it will generate a long path? Today we block on save, that let you save to another location if you wish.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think your current functionality is the most practical.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Continuous deployment from Azure Devops (with PBIP) to dev workspaces is the most important planned feature for me.

I see there is some progress there with the new API and pipeline scripts, but I am unwilling to implement preview features across the enterprise.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Hi,

Congrats for the solution.

- Could be great one interface or VS Code extention that solves are the project relations and dependencies automatic like we have the VS Code MS SQL project.

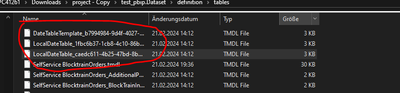

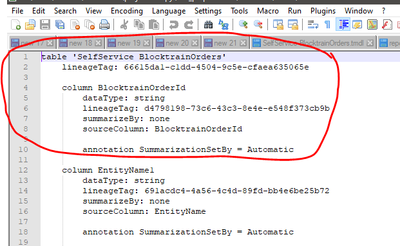

- Could be great improve the doc to expalin the logic for the TMDL Language, we have for example "LocalDateTable_caedc611..." that are created in definition that and we don't have documentation for understand.

Or The defenition of columns notation and options:

The doc available don't provid it:

https://learn.microsoft.com/en-us/power-bi/developer/projects/projects-overview

https://learn.microsoft.com/en-us/power-bi/developer/projects/projects-dataset

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the feedback.

About PBIP deployment, you may use the new Fabric APIs:

https://learn.microsoft.com/en-us/rest/api/fabric/articles/item-management/item-management-overview

Example using PowerShell: https://github.com/microsoft/Analysis-Services/tree/master/pbidevmode/fabricps-pbip

About the local date tables, they are not specific to TMDL. They show up in your model due to the AutoDatetime feature enabled for your model: https://learn.microsoft.com/en-us/power-bi/transform-model/desktop-auto-date-time

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @RuiRomanoMS ,

Our biggest limitation when using the .pbip format is that there does not seem to be a way to broadcast new or updated semantic models across a large number of enterprise workspaces in an app-owns-data embedded solution using the Fabric APIs.

Currently, service principals cannot call the Fabric - Core - Git APIs, so we are unable to programatically create workspaces and initialize them to a Git branch. Workspaces are also limited to one Power BI deployment pipeline stage, and therefore one deployment pipeline, so even having a source workspace from which we deploy content to hundreds of workspaces is serialized.

Combined with the fact that modifying a semantic model through the XMLA endpoint via a third-party tool like Tabular Editor results in exporting to a .pbix failing, we are struggling to find a way to deploy the .pbip format at scale.

Are there any updates on the horizon for enabling service principal and service principal profile support for Git APIs or lifting the one workspace-to-one pipeline limitation? We would love to continue using the .pbip format in our CI/CD process, but dropping and re-publishing a .pbix seems to be the way to go at the moment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your feedback.

It is possible to use Fabric REST APIs to deploy PBIP using Service Principals. But for now only reports and semantic models are supported, see these examples:

Analysis-Services/pbidevmode/fabricps-pbip at master · microsoft/Analysis-Services (github.com)

Deploy a Power BI project using Fabric APIs - Microsoft Fabric REST APIs | Microsoft Learn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Rui,

Thank you for referring me to this resource. I was confused when I saw this at first because the Item Overview - Power BI table made me think that it wouldn't be possible to update a semantic model's properties or definition once deployed.

This looks like it could meet our needs. I do still have some concerns about scalability, 429 errors, and parallelization when it comes to updating semantic model properties because it is writing out to the file.

Are there any plans to allow service principal profile support? What about lifting the one workspace-to-one-pipeline limitation or letting service principals call the Fabric Git APIs?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

LocalDateTable entry are an indication that you forgot to disable Auto Date/Time. They should never be part of an enterprise semantic model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

We have a lot of reports connected live to on-prem ssas (Fabric is on the road map)

I would like to add the report-part of these files into source control, and I think I succeded with it yesterday.

I save the thin report as pbip > commit to devops repo > connect from workspace to repo.

But trying the setup from scatch again today, when I click on Update all in the workspace I just get: Failed to discover dependencies ... Azure Analysis Services and SQL Server Analysis Services hosted semantic models are not supported....

Is it possible?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are working to support the AS/AAS scenario. Until then of all you want to source control the thin reports, if you live connect the report to the model in the service and not to Analsysis Services it should work because the connection in definition.pbir will be to the model in the workspace and not to Analysis Services. I believe the error you get its because the connection in definition.pbir is to the Analysis Services server. Can you confirm?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great that you are working on it.

Yes, I can confirm. There is no model in the service, I can connect to, as the reports are connected through a gateway to on-prem SSAS. So I have saved the AS connected PBIX as a PBIP, and get the error when I try to sync from Azure DevOps repo into an empty workspace. The definition.pbir connectionString is pointing at the SSAS server.

Helpful resources

Join us at the Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount!

Power BI Monthly Update - February 2025

Check out the February 2025 Power BI update to learn about new features.

| User | Count |

|---|---|

| 11 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |