FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Why is Merging so Slow in Power Query?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why is Merging so Slow in Power Query?

I have a table named "Employee Snapshot"

It has dimensional attributes like Employee Class, Employee Code, Employee Status etc.

So, I am removing all the dimensional columns and keeping only dimension keys linked between Employee Snapshot & Dimension Tables

Steps done already

- Only have required columns in Snapshot table

- Have filtered only top 10 records

- Disabled multiple settings in Background

- Table.Join instead of Table.NestedJoin isn't working- may be I am unsure how to apply it

Now, my fact tables needs 14 Merge Operations to get 14 Keys instead of 14 Dimensional Values

The problem is that it keeps loading forever whenever I am making any development and is affecting my work massively

Please help- thanks

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

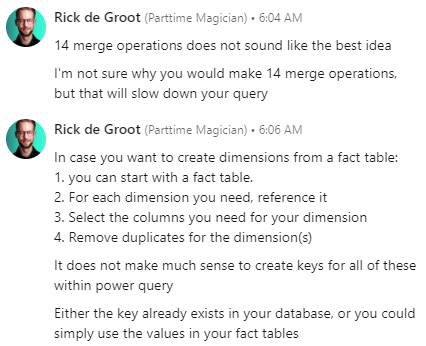

I received help on Linkedin from Power Query Champion @rick De Groot

Wish I could tag him here

However, his response made me think deep and I redesigned the whole thing more efficiently

This is what he replied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I received help on Linkedin from Power Query Champion @rick De Groot

Wish I could tag him here

However, his response made me think deep and I redesigned the whole thing more efficiently

This is what he replied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can filter any one value in both the tables which you are merging than go back to the base table and remove filtered row from Steps taken it is one way to improve merging performance,

Proud to be a Super User! |

|

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tried that- wasn't of much help

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

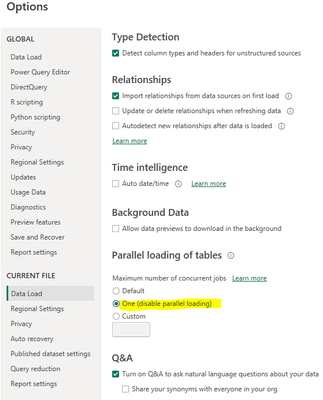

@GauravGG there is no way to deny that PQ is slow and not possibly scalable for large dataset. However, make sure you stick to the following settings that might slightly improve the performance (however, I doubt if it would be any good for 14 joins).

Having said that, if it is a data-level task and you have premium capacity, you can bring all those tables to a datamart and use SQL endpoint to write fully qualified sql tables (basically data transformations shift from PQ to SQL). If you have Fabric, options are even wider (Blazing fast Scala, Apache Spark, Spark SQL, TSQL, Python, R).

If this is an anlysis-level task you can achieve this by building a data-model (it is the join that gets committed to the memory without needing explicit join) and writing DAX calculations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried the option you showed to disable Parallel Loading- didn't help much

Also, I am unsure if I understood this part of your response

If this is an anlysis-level task you can achieve this by building a data-model (it is the join that gets committed to the memory without needing explicit join) and writing DAX calculations

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 40 | |

| 35 | |

| 34 | |

| 31 | |

| 28 |

| User | Count |

|---|---|

| 136 | |

| 102 | |

| 68 | |

| 66 | |

| 58 |