FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Vote for your favorite vizzies from the Power BI Dataviz World Championship submissions. Vote now!

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Understanding the Vertipaq Engine

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Understanding the Vertipaq Engine

Hello

I am doing a deep dive into the Vertipaq engine and I have a few questions regarding a concrete use case.

I have a .pbix file with two Fact Tables:

- Raw (300k rows and 17 columns) with the following structure:

| Location | A | B | C | D | … | O | P |

| London | 644 544 | 417 834 | 961 806 | 367 504 | 83 035 | 893 560 | |

| Vancouver | 717 302 | 153 883 | 616 675 | 997 484 | 779 110 | 672 422 | |

| Copenhagen | 643 329 | 17 978 | 215 068 | 935 608 | 270 478 | 827 192 | |

| London | 279 920 | 224 229 | 774 350 | 764 692 | 770 781 | 929 427 | |

| Vancouvert | 158 089 | 104 762 | 209 378 | 22 990 | 938 295 | 93 513 | |

| Vancouver | 436 206 | 70 139 | 223 948 | 482 096 | 136 600 | 930 017 | |

| Copenhagen | 290 725 | 986 045 | 260 513 | 714 680 | 491 096 | 293 309 | |

| … |

- Unpiv which is the unpivoted version of the 'Raw' table above (4.8 Million rows and 3 columns). So it has the following structure:

| Location | Type | Sales |

| London | A | 644 544 |

| London | B | 417 834 |

| London | C | 961 806 |

| London | D | 367 504 |

| London | O | 83 035 |

| London | P | 893 560 |

| Vancouver | A | 717 302 |

| Vancouver | B | 153 883 |

| Vancouver | C | 616 675 |

| … | ||

| Copenhagen | A | 643 329 |

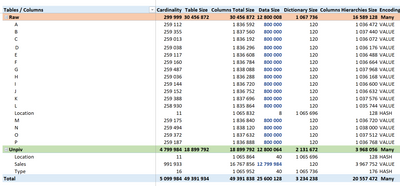

Obviously, I used the super Vertipaq analyzer from @marcorusso & @AlbertoFerrari to understand data compression.

Not surprinsingly, because Vertipaq uses a columnar storage, my 'Unpiv' Table is ~45% smaller than 'Raw' table:

We clearly see (i) the impact of the cardinality of the columns on their total size (ii) the relevance of unpivoting the table. Fair.

Here are my questions :

1. As we can see above, the size difference between 'Raw' and 'Unpiv' is mainly due to the "Column Hierarchies". What is it about ? I found no real explanation on the web or on Marco Russo's book. I read somewhere that it has to do with MDX structure...?!

2. How is the size of Column Hierarchy computed? How can it be estimated or perhaps turned off? I understand the logic of value encoding , dictionnary encoding and RLE encoding. Is it something similar ?

3. What is the unit of the measures displayed by the Vertipaq analyser in the pivot table above ? When I save my .pbix file on my disk, its total size is: 74MB. I have no relationships between 'Raw' & 'Unpiv' and 0 graph on my report. so the data model itself is ~ 73MB (extracted by zipping the .pbix file).

--> As a result, I would expect the Total Table size from the Vertipaq analyzer of my two tables above (49 391 934) to be similar to the 73 MB of the data model. Where does this difference come from ?

Thanks in advance for your answers !

Tristan

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Columns Hierarchies are internal structures storing a sorted textual version of the content of each column. It is required for MDX, and it is now used also internally for optimizing certain queries (in other words - we cannot get rid of it, even for columns we never use un MDX)

- See above - even numbers are converted into strings

- I don't understand the question - but VertiPaq Analyzer reports the memory consumption of the data model, whereas the size of the files could be different (serialization and additional structures).

I hope it helps!

Marco Russo - SQLBI

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Columns Hierarchies are internal structures storing a sorted textual version of the content of each column. It is required for MDX, and it is now used also internally for optimizing certain queries (in other words - we cannot get rid of it, even for columns we never use un MDX)

- See above - even numbers are converted into strings

- I don't understand the question - but VertiPaq Analyzer reports the memory consumption of the data model, whereas the size of the files could be different (serialization and additional structures).

I hope it helps!

Marco Russo - SQLBI

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Marco,

I hear you in this video sayng that is possible to disable Hier Size in SSAS, when not used, of course.

And now I can also see the green tickbox just on top, which makes me think that is really possible.

Could you be so kind to address me to some specific article where I can see how to do that?

Also let me thank you for all the availability, support and videos.

You are making a great job among PBI and Dax world! A Big thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should set the "Available In MDX" property to False. The internal property name is "isAvailableInMdx" and it is described here: Chris Webb's BI Blog: How The New IsAvailableInMDX Property For Analysis Services Tabular Can Reduce...

This property is available only in Tabular Editor and Visual Studio, you cannot modify it from Power BI Desktop.

Disabling it in Power BI Desktop is not supported - it could work, I never tried it. It is supported for Analysis Services (Azure and SSAS). It should work on Power BI Service as well through XMLA endpoint.

Don't disable this feature on columns that could be used as filter or exposed as visible columns to Excel. Disable only when a column is only used in an aggregation like SUM (...) and it is never visible to user.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will definitely follow your recommendations. Thanks a lot for replying quickly.

Hopefully I will get the best from VertyPaq analyzer and improve performance of the Tabular Model which we have.

Thanks again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the folks on this thread, there is a new 'Available in MDX' feature in Azure Analysis Services, SSAS 2017 (and soon Power BI Premium) which answers my questions n°1 and n°2 above: https://blog.crossjoin.co.uk/2018/07/02/isavailableinmdx-ssas-tabular/

Helpful resources

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 58 | |

| 57 | |

| 45 | |

| 17 | |

| 17 |

| User | Count |

|---|---|

| 113 | |

| 108 | |

| 38 | |

| 35 | |

| 26 |