- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Earn a 50% discount on the DP-600 certification exam by completing the Fabric 30 Days to Learn It challenge.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: RANK based on DISTINCT Count of occurrences

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RANK based on DISTINCT Count of occurrences

I want to achieve the following SQL query in PowerBI measure using DAX (SUMMARISED table😞

SELECT A,B,C, COUNT(D), COUNT(DISTINCT D), RANK() OVER(ORDER BY COUNT(DISTINCT D) DESC)

FROM #TABLE

GROUP BY A,B,C

[A, B, C & D] are columns

Actual requirement:

Rank an item based on Distinct occourrences such that maximum distinct count would be ranked 1.

Also note that, applying any filter should dynamically compute the Rank.

Cannot use direct query or table. I need a DAX measure.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Take a look at my file: https://1drv.ms/u/s!AiiWkkwHZChHjyDG5LGALpkRvYll

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Gnanasekar wrote:I want to achieve the following SQL query in PowerBI FetLife IMVU Canva measure using DAX (SUMMARISED table😞

SELECT A,B,C, COUNT(D), COUNT(DISTINCT D), RANK() OVER(ORDER BY COUNT(DISTINCT D) DESC)

FROM #TABLE

GROUP BY A,B,C

[A, B, C & D] are columns

Actual requirement:

Rank an item based on Distinct occourrences such that maximum distinct count would be ranked 1.

Also note that, applying any filter should dynamically compute the Rank.

Cannot use direct query or table. I need a DAX measure.

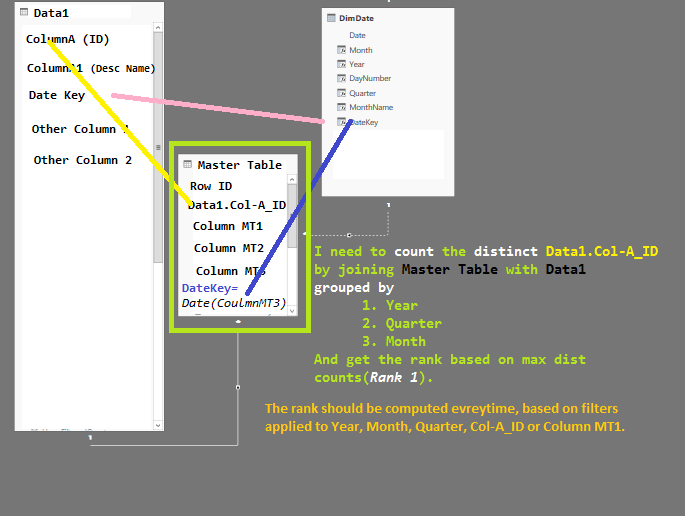

I ought to achive my normal outcome (Image shared prior) utilizing the accompanying table structure. Where Data1 and Data2 are transported in from SQL DB utilizing SQL inquiry. Lines demonstrate the relationship.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @LivioLanzo,

Apologies for confusing you.

I have uploaded a sample file with a similar table model, please find the GDRIVE link here.

@v-lili6-msft, you may also try the link for better understanding of my requirement.

My expectation is possible in SQL, not sure why its not in PowerBI or how it needs to be written using DAX. Is there any fundamental issue that I have not noticed?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Take a look at my file: https://1drv.ms/u/s!AiiWkkwHZChHjyDG5LGALpkRvYll

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @LivioLanzo,

Thank you so much ![]() , I have applied the implementation to my original requirement. It's working beautifully!!

, I have applied the implementation to my original requirement. It's working beautifully!!

Would you mind sharing your twitter id?

I added a column to fetch EmployeeCode using the LogID. Also, can you please explain, why this wasn't working without a schema model? I am basically a SQL BI guy, and PowerBI's intelligence seems to be confusing to me at times!

Thanks & Regards,

-Nitin

(on behalf of Gnanasekar)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your model was following a sort of Header/Detail scheme so I normalized the EmpID and the Tower Update Date into the DoorLog table and created a star schema, this made it simpler and you did not need to traverse the the TowerLog table anymore to reach the DoorLog table.

Would you mind sharing your twitter id?

I am not too active on Twitter but you can check my blog which is still new but getting there: https://xcelanz.com/ , you will find a link to my LinkedIn if you wanna add me there

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you once again @LivioLanzo.

Basically, I have summarised the Log table to form dimensions, thus kind of normalising it. I understand that it would be better to have tables in normalised - start schema for better functionality, in PowerBI.

-Nitin.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

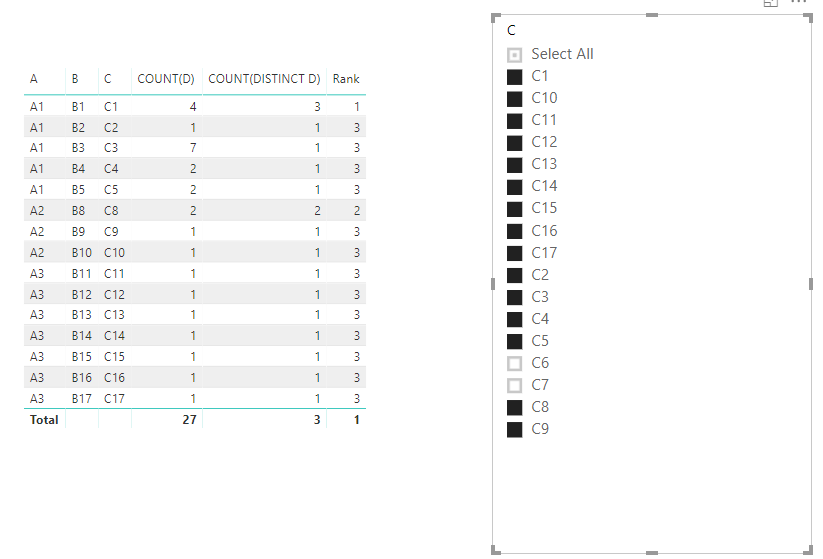

hi, @Gnanasekar

After my test on simple sample data,

you may try to use this measure as below:

Rank =

RANKX (

ALLSELECTED(Data),

CALCULATE ( DISTINCTCOUNT ( Data[D] ),ALLEXCEPT(Data,Data[A],Data[B],Data[C]) ),

,

DESC,

DENSE

)Result:

and after select data from slicer

here is pbix, please try it.

If it is not your case, please share a simple sample pbix file and expected output. You can upload it to OneDrive or Dropbox and post the link here. Do mask sensitive data before uploading.

Best Regards,

Lin

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@v-lili6-msft This works on importing a table and then summarising it using a DAX and then writing a measure, But I need this to be directly used on the imported table by means of a measure, rather than summarizing the m,aster table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Gnanasekar Please post a sample of your table, along with expected result

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

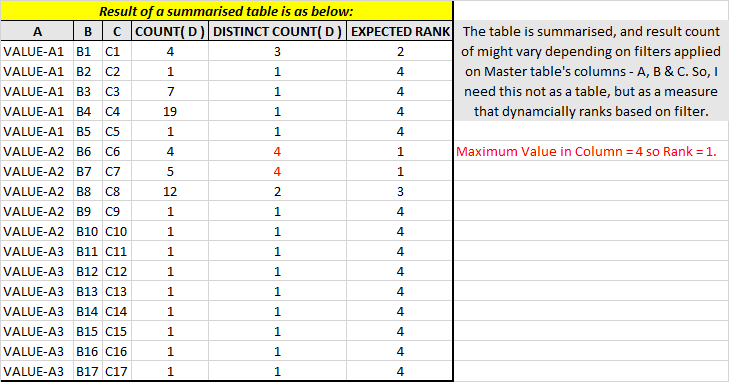

Hi @LivioLanzo

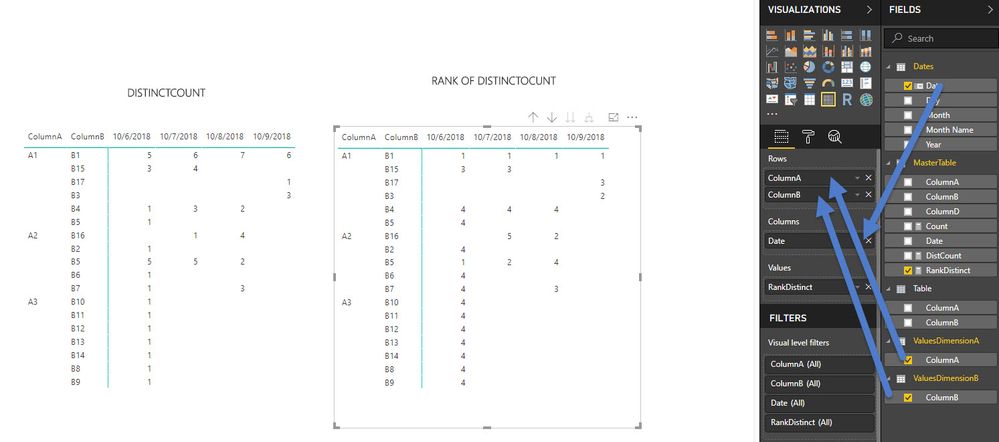

The following is my expectation (a summarised result of a Master table having columns A, B & C using SUMMARISE DAX expression, while D & Rank are aggregated using expressions),

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I rebuilt your original table and then used these measures:

Count =

COUNTROWS ( Data )

DistinctCountD =

DISTINCTCOUNT ( Data[D] )

Rank =

RANKX (

CALCULATETABLE (

SUMMARIZE ( Data, Data[A], Data[B], Data[C] ),

ALLSELECTED ( Data )

),

CALCULATE ( DISTINCTCOUNT ( Data[D] ) ),

,

DESC,

DENSE

)

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @LivioLanzo,

It is working but, the slicing & dicing is not happening due to summarised table. The following is the structure of my table

'Master Table' = { [Column-A], [Column-B], [Column-C], [Column-D], [Column-DateKEY] }

If I create a summary table named

'Data' = SUMMARIZE ( Data1, Data1[A], Data1[B], DimDate[year], DimDate[Quarter] & DimDate[Month] ) ==> I will be loosing the ability to slice & dice. cuz i can't group by [Column-DateKEY].

So, I tried the following :

[1] t1 Count = COUNTROWS(Data1)

[2] t2 Distinct =

VAR TABLE1 = SUMMARIZE(Data1, Data1[Column-A],Data1[Column-B], DimDate[Year], DimDate[Month], Data2[Column-D])

RETURN COUNTX(TABLE1, Data2[Column-D])

Basically, I tried to modify the DAX as below:

[3] t3 RankHelp =

VAR TABLE2 = SUMMARIZE(Data1, Data1[Column-A],Data1[Column-B], DimDate[Year], DimDate[Month], Data2[Column-D])

RETURN

RANKX (

CALCULATETABLE (

TABLE2,

ALLSELECTED ( TABLE2 ) ==> This was throwing some error

),

CALCULATE ( COUNTX(TABLE2, Data1[Column-D])),

,

DESC,

DENSE

)

Hope you could understand my objective, i.e. trying to use the table imported via SQL (select * from MasterTable) and directly using it to find RANK without creating another summarised table so that I could retain relationship.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

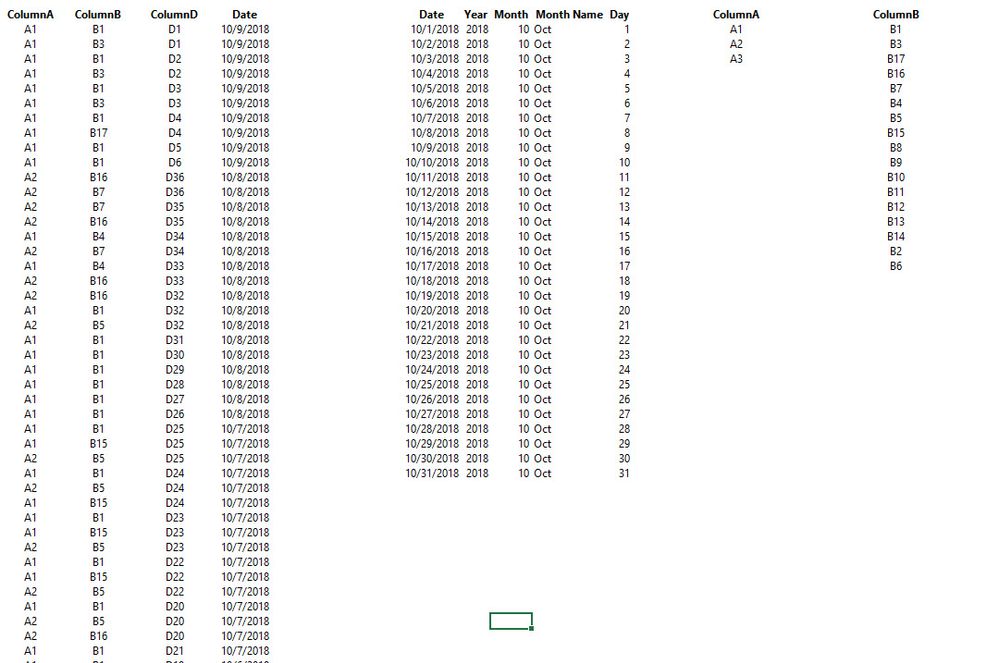

Hi @LivioLanzo,

I should achive my expected result (Image shared earlier) using the following table structure. Where Data1 & Data2 are imported from SQL DB using SQL query. Lines indicate the relationship.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @LivioLanzo,

As requested plese find the sample data:

Note:

- Column 2 - is a result of joining Tables Data1 & Data2 on IDs.

- DimDate table has datekey column to link datekey of tables Data1 & Data2 on Datekeys (extracted from date columns).

data sample

| SELECT * FROM [Master Table] | ||||

| DISTINCT COUNT & Rank based on below: | dimension 1 | dimension 2 | dimension 3 | |

| RowID | Data1[Column-D] :: Data2[Column-A2] | [Column-A] | [Column-B] | [Column-C] |

| 1 | 1 :: VALUE-D1 | VALUE-A1 | VALUE-B1 | 10/9/18 8:29 PM |

| 2 | 1 :: VALUE-D1 | VALUE-A1 | VALUE-B3 | 10/9/18 8:30 PM |

| 3 | 2 :: VALUE-D2 | VALUE-A1 | VALUE-B1 | 10/9/18 8:16 PM |

| 4 | 2 :: VALUE-D2 | VALUE-A1 | VALUE-B3 | 10/9/18 8:16 PM |

| 5 | 3 :: VALUE-D3 | VALUE-A1 | VALUE-B1 | 10/9/18 8:05 PM |

| 6 | 3 :: VALUE-D3 | VALUE-A1 | VALUE-B3 | 10/9/18 8:06 PM |

| 7 | 4 :: VALUE-D4 | VALUE-A1 | VALUE-B1 | 10/9/18 7:50 PM |

| 8 | 4 :: VALUE-D4 | VALUE-A1 | VALUE-B17 | 10/9/18 7:50 PM |

| 9 | 5 :: VALUE-D5 | VALUE-A1 | VALUE-B1 | 10/9/18 7:28 PM |

| 10 | 6 :: VALUE-D6 | VALUE-A1 | VALUE-B1 | 10/9/18 2:56 PM |

| 11 | 36 :: VALUE-D36 | VALUE-A2 | VALUE-B16 | 10/8/18 5:52 PM |

| 12 | 36 :: VALUE-D36 | VALUE-A2 | VALUE-B7 | 10/8/18 6:16 PM |

| 13 | 35 :: VALUE-D35 | VALUE-A2 | VALUE-B7 | 10/8/18 6:16 PM |

| 14 | 35 :: VALUE-D35 | VALUE-A2 | VALUE-B16 | 10/8/18 5:51 PM |

| 15 | 34 :: VALUE-D34 | VALUE-A1 | VALUE-B4 | 10/8/18 1:28 PM |

| 16 | 34 :: VALUE-D34 | VALUE-A2 | VALUE-B7 | 10/8/18 6:17 PM |

| 17 | 33 :: VALUE-D33 | VALUE-A1 | VALUE-B4 | 10/8/18 12:30 PM |

| 18 | 33 :: VALUE-D33 | VALUE-A2 | VALUE-B16 | 10/8/18 5:52 PM |

| 19 | 32 :: VALUE-D32 | VALUE-A2 | VALUE-B16 | 10/8/18 5:52 PM |

| 20 | 32 :: VALUE-D32 | VALUE-A1 | VALUE-B1 | 10/8/18 12:26 PM |

| 21 | 32 :: VALUE-D32 | VALUE-A2 | VALUE-B5 | 10/8/18 12:26 PM |

| 22 | 31 :: VALUE-D31 | VALUE-A1 | VALUE-B1 | 10/8/18 12:25 PM |

| 23 | 30 :: VALUE-D30 | VALUE-A1 | VALUE-B1 | 10/8/18 12:23 PM |

| 24 | 29 :: VALUE-D29 | VALUE-A1 | VALUE-B1 | 10/8/18 12:23 PM |

| 25 | 28 :: VALUE-D28 | VALUE-A1 | VALUE-B1 | 10/8/18 12:22 PM |

| 26 | 27 :: VALUE-D27 | VALUE-A1 | VALUE-B1 | 10/8/18 12:21 PM |

| 27 | 26 :: VALUE-D26 | VALUE-A1 | VALUE-B1 | 10/8/18 12:10 PM |

| 28 | 25 :: VALUE-D25 | VALUE-A1 | VALUE-B1 | 10/7/18 5:08 PM |

| 29 | 25 :: VALUE-D25 | VALUE-A1 | VALUE-B15 | 10/7/18 5:08 PM |

| 30 | 25 :: VALUE-D25 | VALUE-A2 | VALUE-B5 | 10/7/18 5:09 PM |

| 31 | 24 :: VALUE-D24 | VALUE-A1 | VALUE-B1 | 10/7/18 4:06 PM |

| 32 | 24 :: VALUE-D24 | VALUE-A2 | VALUE-B5 | 10/7/18 4:06 PM |

| 33 | 24 :: VALUE-D24 | VALUE-A1 | VALUE-B15 | 10/7/18 4:11 PM |

| 34 | 23 :: VALUE-D23 | VALUE-A1 | VALUE-B1 | 10/7/18 3:56 PM |

| 35 | 23 :: VALUE-D23 | VALUE-A1 | VALUE-B15 | 10/7/18 3:57 PM |

| 36 | 23 :: VALUE-D23 | VALUE-A2 | VALUE-B5 | 10/7/18 3:59 PM |

| 37 | 22 :: VALUE-D22 | VALUE-A1 | VALUE-B1 | 10/7/18 1:52 PM |

| 38 | 22 :: VALUE-D22 | VALUE-A1 | VALUE-B15 | 10/7/18 1:54 PM |

| 39 | 22 :: VALUE-D22 | VALUE-A2 | VALUE-B5 | 10/7/18 1:54 PM |

| 40 | 20 :: VALUE-D20 | VALUE-A1 | VALUE-B1 | 10/7/18 11:45 AM |

| 41 | 20 :: VALUE-D20 | VALUE-A2 | VALUE-B5 | 10/7/18 11:45 AM |

| 42 | 20 :: VALUE-D20 | VALUE-A2 | VALUE-B16 | 10/7/18 12:20 PM |

| 43 | 21 :: VALUE-D21 | VALUE-A1 | VALUE-B1 | 10/7/18 11:20 AM |

| 44 | 19 :: VALUE-D19 | VALUE-A1 | VALUE-B1 | 10/6/18 6:10 PM |

| 45 | 19 :: VALUE-D19 | VALUE-A2 | VALUE-B5 | 10/6/18 6:11 PM |

| 46 | 18 :: VALUE-D18 | VALUE-A1 | VALUE-B1 | 10/6/18 5:34 PM |

| 47 | 18 :: VALUE-D18 | VALUE-A1 | VALUE-B15 | 10/6/18 5:34 PM |

| 48 | 18 :: VALUE-D18 | VALUE-A2 | VALUE-B5 | 10/6/18 5:34 PM |

| 49 | 16 :: VALUE-D16 | VALUE-A1 | VALUE-B1 | 10/6/18 5:28 PM |

| 50 | 16 :: VALUE-D16 | VALUE-A1 | VALUE-B15 | 10/6/18 5:28 PM |

| 51 | 16 :: VALUE-D16 | VALUE-A2 | VALUE-B5 | 10/6/18 5:28 PM |

| 52 | 7 :: VALUE-D7 | VALUE-A1 | VALUE-B1 | 10/6/18 5:22 PM |

| 53 | 7 :: VALUE-D7 | VALUE-A2 | VALUE-B5 | 10/6/18 5:22 PM |

| 54 | 8 :: VALUE-D8 | VALUE-A1 | VALUE-B1 | 10/6/18 4:18 PM |

| 55 | 8 :: VALUE-D8 | VALUE-A3 | VALUE-B8 | 10/6/18 4:20 PM |

| 56 | 8 :: VALUE-D8 | VALUE-A3 | VALUE-B9 | 10/6/18 4:21 PM |

| 57 | 8 :: VALUE-D8 | VALUE-A3 | VALUE-B10 | 10/6/18 4:21 PM |

| 58 | 8 :: VALUE-D8 | VALUE-A3 | VALUE-B11 | 10/6/18 4:21 PM |

| 59 | 8 :: VALUE-D8 | VALUE-A3 | VALUE-B12 | 10/6/18 4:21 PM |

| 60 | 8 :: VALUE-D8 | VALUE-A3 | VALUE-B13 | 10/6/18 4:22 PM |

| 61 | 8 :: VALUE-D8 | VALUE-A3 | VALUE-B14 | 10/6/18 4:24 PM |

| 62 | 8 :: VALUE-D8 | VALUE-A1 | VALUE-B5 | 10/6/18 4:27 PM |

| 63 | 8 :: VALUE-D8 | VALUE-A1 | VALUE-B15 | 10/6/18 4:33 PM |

| 64 | 8 :: VALUE-D8 | VALUE-A1 | VALUE-B4 | 10/6/18 4:33 PM |

| 65 | 8 :: VALUE-D8 | VALUE-A1 | VALUE-B4 | 10/6/18 4:34 PM |

| 66 | 8 :: VALUE-D8 | VALUE-A2 | VALUE-B5 | 10/6/18 4:46 PM |

| 67 | 9 :: VALUE-D9 | VALUE-A2 | VALUE-B2 | 10/6/18 2:10 PM |

| 68 | 9 :: VALUE-D9 | VALUE-A2 | VALUE-B7 | 10/6/18 2:11 PM |

| 69 | 9 :: VALUE-D9 | VALUE-A2 | VALUE-B6 | 10/6/18 2:12 PM |

| 70 | 10 :: VALUE-D10 | VALUE-A2 | VALUE-B5 | 10/8/18 1:01 AM |

| 71 | 10 :: VALUE-D10 | VALUE-A1 | VALUE-B4 | 10/7/18 11:17 PM |

| 72 | 17 :: VALUE-D17 | VALUE-A1 | VALUE-B4 | 10/7/18 11:20 PM |

| 73 | 5 :: VALUE-D5 | VALUE-A1 | VALUE-B4 | 10/7/18 11:20 PM |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

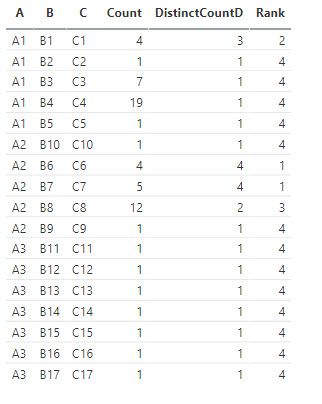

Hi again @Gnanasekar

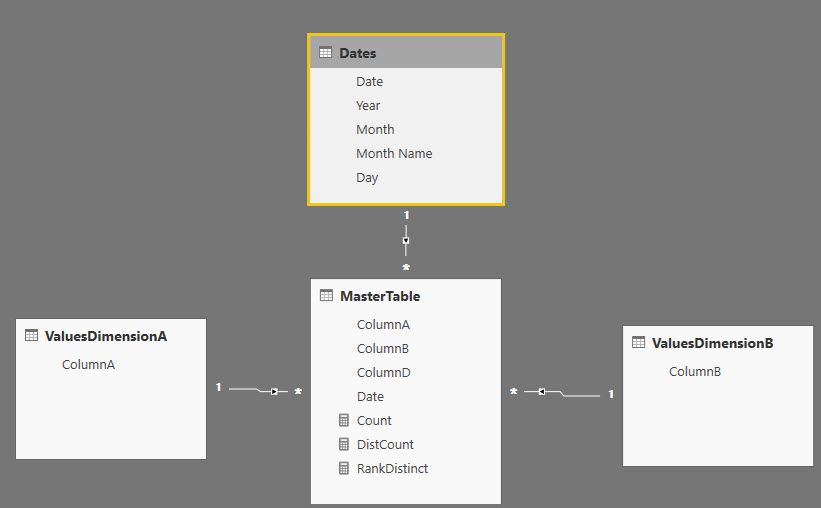

So, I have built the below model with the below data:

Then I have added the below measure from which I have got the below results:

Notice that I have switched off the totals from the visuals, otherwise I had to add some additional conditions to the measure as in this case having totals would make no sense.

You may also want to change the last argument of RANKX from Skip to Dense, in my formula it is set to the default, which is SKIP.

RankDistinct =

IF (

ISEMPTY ( MasterTable ),

BLANK (),

RANKX (

CALCULATETABLE (

SUMMARIZE ( MasterTable, ValuesDimensionA[ColumnA], ValuesDimensionB[ColumnB] ),

ALLSELECTED ( ValuesDimensionA ),

ALLSELECTED ( ValuesDimensionB )

),

CALCULATE ( DISTINCTCOUNT ( MasterTable[ColumnD] ) )

)

)

Is it what you were after ?

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @LivioLanzo,

Can you please try the same using such that you dont group column by Date. Please refer to my expectation image shared earlier.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Gnanasekar you can apply filters to year, month, date and it should still work as far as I can see

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Gnanasekar

would it be possible to share some sample data? also I am assuming you want to slice it by date?

Did I answer your question correctly? Mark my answer as a solution!

Proud to be a Datanaut!

Helpful resources

| User | Count |

|---|---|

| 98 | |

| 90 | |

| 82 | |

| 73 | |

| 67 |

| User | Count |

|---|---|

| 115 | |

| 102 | |

| 98 | |

| 71 | |

| 67 |