FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

View all the Fabric Data Days sessions on demand. View schedule

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Power BI in-memory RAM = Overload

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

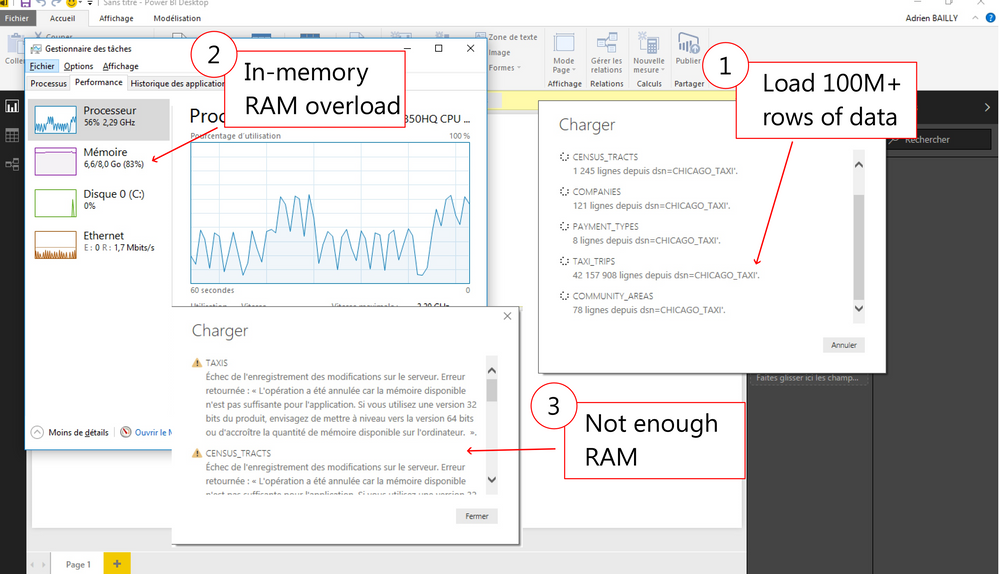

Power BI in-memory RAM = Overload

Hi,

I'm facing a problem while trying to get data from "OData Connector", trying to get a 100M+ rows dataset.

After 45M rows loaded my RAM is overloaded (see screenshot).

How to analyze a high volumetry dataset ? (looking for both free and paid options)

Regards,

Adry

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try to use Direct Query instead of Import mode, or try to add extra memory for your computer.

Best Regards,

Herbert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In order to reduce the amount of RAM your Data Model uses you must consider casting the columns to the appropriate data types. Very often a forgotten text column which actually represents a number would consume all of your RAM in no time.

Also worth mentioning that the data types that you have in your data model are not necessary reflective of those that you get in your query. That is why checking how your data is structured in your Data Model is a quick win for RAM consumption.

Secondly, depending from where you get your data from you consider changin your M Query. The quick wins here are streaming the data or if you are making any intensive calculations in your queries Buffer the intermediarry steps.

And finally consider loading the data one query at a time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In order to reduce the amount of RAM your Data Model uses you must consider casting the columns to the appropriate data types. Very often a forgotten text column which actually represents a number would consume all of your RAM in no time.

Also worth mentioning that the data types that you have in your data model are not necessary reflective of those that you get in your query. That is why checking how your data is structured in your Data Model is a quick win for RAM consumption.

Secondly, depending from where you get your data from you consider changin your M Query. The quick wins here are streaming the data or if you are making any intensive calculations in your queries Buffer the intermediarry steps.

And finally consider loading the data one query at a time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try to use Direct Query instead of Import mode, or try to add extra memory for your computer.

Best Regards,

Herbert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the tips ![]()

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!