Join us at the 2025 Microsoft Fabric Community Conference

Microsoft Fabric Community Conference 2025, March 31 - April 2, Las Vegas, Nevada. Use code FABINSIDER for a $400 discount.

Register now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Webinars and Video Gallery

- Data Stories Gallery

- Themes Gallery

- Power BI DataViz World Championships Gallery

- Quick Measures Gallery

- R Script Showcase

- COVID-19 Data Stories Gallery

- Community Connections & How-To Videos

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI DataViz World Championships are on! With four chances to enter, you could win a spot in the LIVE Grand Finale in Las Vegas. Show off your skills.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: OLE DB or ODBC error: [Expression.Error] We co...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

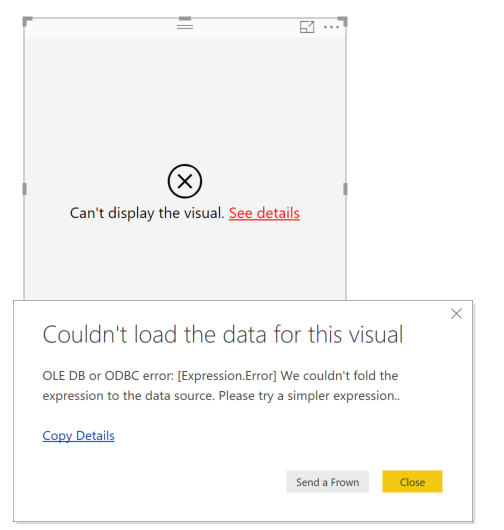

OLE DB or ODBC error: [Expression.Error] We couldn't fold the expression to the data source.

When I try to use Direct Query, an error occurs like "OLE DB or ODBC error: [Expression.Error] We couldn't fold the expression to the data source. ".

It's a third ODBC Driver which can run when using Import mode.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@QIAO @Anonymous @yan @Arebgb612

i was able to resolve this issue by moving my report level dax fields to backend source. Looks like PBI is not able to fold the data because I was using report level dax fields inside another field(shown below).

Original Formula

DAX_Column=COMBINEVALUES(" ", column1_fromsource, column2_fromsource, column3_fromsource, column4_fromreportdax)

New Formula

DAX_Column=COMBINEVALUES(" ", column1_fromsource, column2_fromsource, column3_fromsource, column4_fromsource)

Note- i moved column4_fromreportdax to my backend source and later used that new field in my source query.

This all was working when my source was SAP HANA. I had to make above changes when i changed my source to Snowflake.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm not sure if my problem is related to this one, but I would appreciate any help here, please

I have the following Error Message:

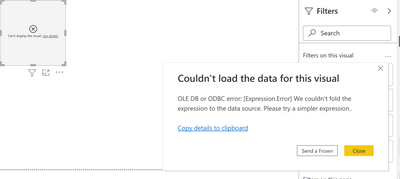

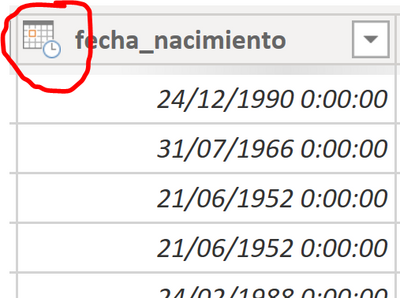

OLE DB or ODBC error: [Expression.Error] We couldn't fold the expression to the data source. Please try a simpler expression.

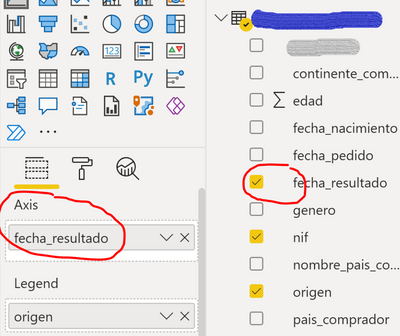

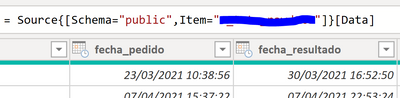

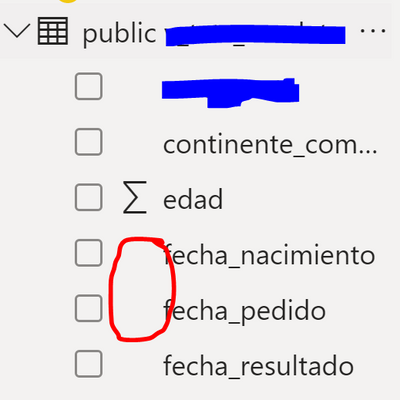

I am connected to a Postgre Database in Azure in Direct Query mode and I think something is wrong with the Data Type (which I cannot edit in Transform data since it is not supported by Direct Query mode), because when I try to transform data the data type is correct

But when I try to create my model Power Bi doesn't recognize the data type, therefore Power BI is unable to creace my graph.

Could you please help me?

Many thanks in advance!

Marina

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

check what data type the 'fetcha_resultado' is - it most probably isn't a supported type in PBI. Since you can't transform on the fly (direct mode), cast or convert that field to a supported type (string, numeric,...) at the DBMS-level, here PostGres.

How ? Create a SQL view based on the source table and use that view as source in PBI instead.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Many thanks for your help Yan!

I have created a SQL view and it happends the same....

I have also tried with a Native query and same result

= Value.NativeQuery(PostgreSQL.Database

............#(lf)p.fecha_pedido::timestamp,#(lf)r.fecha::timestamp as fecha_resultado,................, null, [EnableFolding=true])

Any other suggestion I can try, please?

Many thanks for your help again!

Marina

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see. Since these are meant to be timestamp, either the formatting is off (weird as postgres to pbi data types is standard), or you have an invalid value on postgres side like 00:00:00).

To confirm, cast the fetcha fields in your view to string, do an import in pbi (temporarily) and transform them there to dates. PBI should tell you what value it couldn't convert. Then adapt your view to clean these fields before trying direct query again.

PBI is pretty bad in telling you what value and record it could not coerce (convert implicitly). The generic message doesn't help troubleshoot at all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yan,

I switched DirectQuery to import mode, and PBI directly recognized the column as Date :'( Therefore, I assume the date format in Postgre is properly created, and the problem is in the direct query mode... could it be a Bug in PBI? Do you know how can we report it to Microsoft for them to solve it?

Just to share our conclussiones, what we are going to do is to create 3 columns in the Postgre DDBB with the year, month and date, in order to create some graphs with these data and try if it is enough to represent what we need...

Many many thanks for your help, it has been very helpful!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is something that I'm still digging into but in my understanding the folding error has to do with query run-time data type mapping and the subsequently associated flags.

I'm trying to roll my own ODBC Direct Query solution because we have some proprietary data types (![]() ) which have to be handled. A lot of this has to do with what it included in the custom connector so far (https://github.com/Microsoft/DataConnectors/blob/master/docs/odbc.md) So what I've learned so far:

) which have to be handled. A lot of this has to do with what it included in the custom connector so far (https://github.com/Microsoft/DataConnectors/blob/master/docs/odbc.md) So what I've learned so far:

Super common folding errors:

- "Data Type of column order_status with searchable property UNSEARCHABLE should be SEARCHABLE or ALL_EXCEPT_LIKE." = Text column not being recognized as searchable (One of my proprietary text columns which didn't get correctly cast to WLONGVARCHAR) (ex. sort functions)

- "This ODBC driver doesn't support SQL_FN_TD_TIMESTAMPDIFF. You can override this by using SqlGetInfo for SQL_TIMEDATE_FUNCTIONS." = Date column that is being cast to Timestamp for an odd reason and so Date math cannot be applied. (ex. date filters)

- "This ODBC Driver does not support SQL_GB_NO_RELATION. You can override this by using SqlCapabilities" = Driver does not support the highest level of Group By Requirements, has to be set in custom driver as another one of these values:

SQL_GB_NOT_SUPPORTED = 0,

SQL_GB_GROUP_BY_EQUALS_SELECT = 1,

SQL_GB_GROUP_BY_CONTAINS_SELECT = 2,

SQL_GB_NO_RELATION = 3,

SQL_GB_COLLATE = 4

(ex. group numeric by name) - "This ODBC driver doesn't support SQL_AF_SUM. You can override this by using SqlGetInfo for SQL_AGGREGATE_FUNCTIONS" = Flag not set to allow standard aggregate functions / not picked up in Sql92Conformity parameter.

(ex. any numeric "default summarizations")

At least... that's how far I've gotten so far. If you're interested in contributing, here's my repo.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am having the same issue with the Amazon Redshift connector in Direct Query mode. Including any text column in the query produces this error when I try to create a slicer, table or matrix visual. Number columns work fine.

I have reduced the number of columns retrieved, and it doesn't matter if I restrict the number of rows retrieved by setting filters on date columns in Query Editor. Even a small number of rows and columns fails when I try to create a visual.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@SamTrexlerI have faced the exact issue even after more than 2 years ☹️! Any workaround for this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

https://github.com/microsoft/DataConnectors

Please read above url and there should be some solution to solve the problem.

I have solved the problem using idea from the link. In reality, I don't remember too much as it's the first and last connector I wrote. I borrowed some idea from Kylin ODBC driver and wrote an ODBC driver to connect to AWS Athena using direct query 2 years ago.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sam - enabling diagnostics tracing in PBI could give you more details on why folding isn't working. I understand MS may push back on the driver to fix and make it compliant e.g. specific attributes values, but we should get guidance on what to request from the driver's author.

For BigQuery, the driver is embedded in PBI desktop so it's all in MS hands...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @QIAO,

You can connect the database in Direct Query, when you try to create a visual, it display the error message, right? What version of Power BI desktop do you use? Please update it to the latest one and check if it works fine.

Best Regards,

Angelia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting same error on custom data connector on ODBC. I have built for Apache Kylin ODBC. Preview and schema working fine but data loading fails with same error

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

https://github.com/Microsoft/DataConnectors/blob/master/samples/ODBC/SqlODBC/SqlODBC.pq

Sample for ODBC to support Direct Query.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Have you solved your problems? I just met the same problem when I want to connect Clickhouse with Power BI by using Direct query way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I have the same problem with big query connector

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@pstanek what's the datatype of the aggregated field in BigQuery ? If NUMERIC, I just reported a bug about it.

Unfortunately MS / PBI doesn't make the SQL datatype mapping (ODBC driver <--> PBI) configurable. There are many sources and ODBC drivers out there and PBI determines if folding is relevant only if the driver returns all criteria for a given data type.

It would really be great if such mapping and the attributes were editable, in a config file for instance. This would allow to "force" for query folding (=send native sql to source) where actually supported, and as such increase acceptance of PBI.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting same error on custom data connector on ODBC. I have built for Apache Kylin ODBC. Preview and schema working fine but data loading fails with same error

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I get the same error when connecting to Snowflake using Direct Query. I am using the latest version of Power BI (April 2018). When I try to use any field from the Date dimension or a Date field in the Fact table in a visual, I get the error posted above.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I see it's an old post. But I am getting the same error when I change Snowflake from import mode to direct query. Were you able to solve the issue? Any specific pointers for Snowflake?

Helpful resources

Join us at the Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount!

Power BI Monthly Update - February 2025

Check out the February 2025 Power BI update to learn about new features.

| User | Count |

|---|---|

| 86 | |

| 78 | |

| 54 | |

| 39 | |

| 35 |

| User | Count |

|---|---|

| 102 | |

| 84 | |

| 48 | |

| 48 | |

| 48 |