New Offer! Become a Certified Fabric Data Engineer

Check your eligibility for this 50% exam voucher offer and join us for free live learning sessions to get prepared for Exam DP-700.

Get Started- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

Don't miss out! 2025 Microsoft Fabric Community Conference, March 31 - April 2, Las Vegas, Nevada. Use code MSCUST for a $150 discount. Prices go up February 11th. Register now.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: No way to export data from PBI above 30 K rows...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No way to export data from PBI above 30 K rows ??

Hello Guys,

Now I've spent many days reading and learning about PBI and also bought books for $250, as it really seems to be a good investment to learn DAX and PBI. Just uploaded a typical CSV file for me : 1,3 million rows and 20 columns. Did some basic filtering and wanted to export the filtered result as it is to be used in an online application as a database. Found out about the 30K limit of exporting the hard way. Now that is is very very bad judgement call from someone to limit export sizes.

Understand that there is supposedly some way to export to a cloud - hmm - anyone know how that works ?

I can't get OneDrive to work on my work computer, seems to be limited out somehow.

Possibly could do that on another computer, still not been able to logon there.

The other solutions found on the forum are workarounds, such as slicing into chunks of 20K and then reassembling. Obviously a possible one-off but impossible for ongoing basis

A BI-solution however nice is rendred worthless if there is no way to export data

Very worried at this point

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

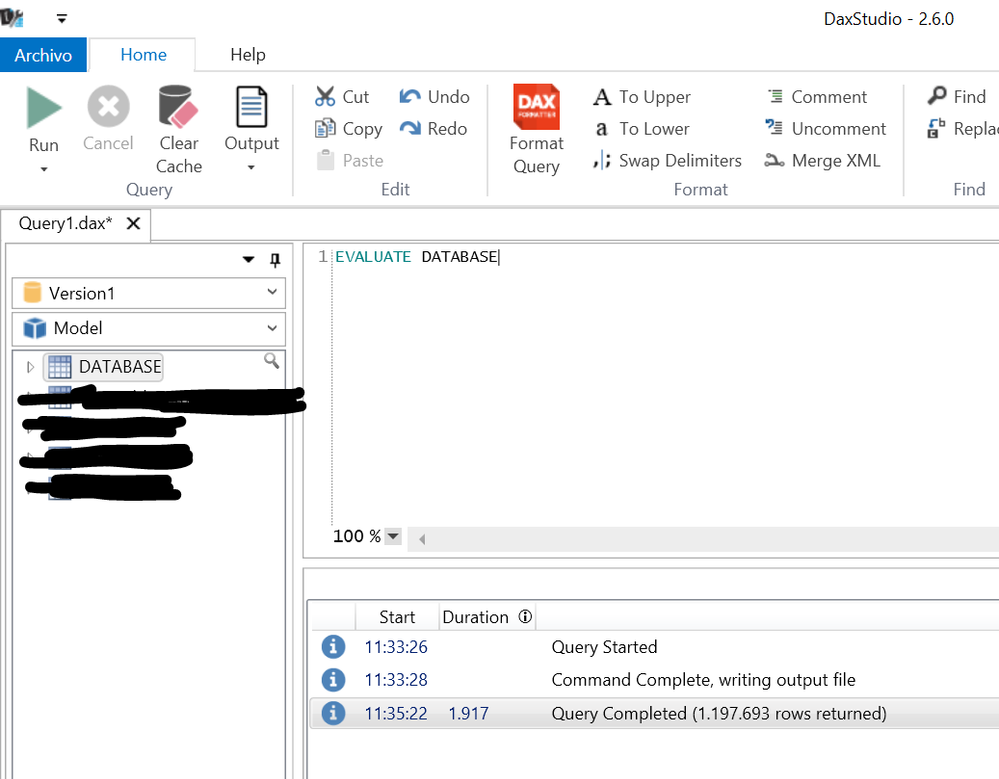

The easiest way is by using DAX Studio https://daxstudio.codeplex.com/

1. Connect DAX Studio to your Power BI

2. Type EVALUATE yourtable

3. Click Output --> File

4. Run

PS. works for more than 1M rows

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This effectively blocks out any professional. 150 K rows is also "nothing"

Limitations and Considerations

When you export data from a Power BI visual into Excel, keep the following considerations in mind:

- With a Power BI Pro license, users can export up to 150,000 rows of data for each export. For Power BI Free users, you can export up to 30,000 rows of data.

- Custom visuals, and R visuals, are not currently supported.

https://powerbi.microsoft.com/en-us/documentation/powerbi-service-export-to-excel/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@xprezz why would you want to take that much data from the presentation layer (a.k.a. powerbi visual) back into a "data source" layout? I don't know about your specific scenario but what I try to do usually is to create PowerBI reports where appropriate and additional Excel reports on the same datasource when necessary. From my point of view a well designed data store is key and can be used with any frontend

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well Frank everybody's requirement are ofcourse different. I truely enjoy the ease of use in creating a good visual, but most often in my profession the visuals are used to monitor the overview and then when an interesting anomoly or sitution is discovered the drill down method is used to identify the culprit. So far all this is very standard.

Most often we need to filter (by some method or another) a specific BU or transactionperiod and then export a grouping for further analysis - in excel.

To solve the situation rigth now, I will split the file into 2 datasets and load into excel and perform the filter actions and then rejoin the two resulting files. I am in need of 540 K rows of the 1,3 MK rows

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you create an "Export"-query that compresses your data before exporting, you can expand the row-limit by factors up to 10000 (so 3.000.000.000 rows within the standard-edition): http://www.thebiccountant.com/2016/12/06/how-to-store-tables-longer-than-11-mio-rows-in-excel/

(This technique also works for PBI)

pls let me know if you need any help here to implement it for your case.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Would be nice to walk me through how to solve the function with the example provided on the web page if you please ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@xprezz, sorry, I missed your answer here.

1) Open the Excel-file from the blogpost

2) Copy the function "fnToJsonTable" to PBI and run it, passing the name of your table as an argument to it

3) Create a visual with that table and export

4) In the Excel-file: Import the exported csv-file (or the excel-table, if you've used PBI service)

5) Run the function "fnBackToTable", passing the name of the query from 4) to the function

Hope this helps, otherwise pls specify where the problem lies.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi imkeF.

I have some problems with the point 4 and 5.

Can you confirm me that this solution work to export data over 30k rows with power bi report published on PBI Report Server?

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's been a while when I last tested it, but then it worked.

But as so much changes, you should better test that yourself.

Would be great if you could report back here - thanks.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That was a brilliant solution.

Will have to study your solution and try this. I quite often come across datasets which are much larger than 1,1 MRows.

Once again thank you for sharing such a remarkable solution

Happy new year

Helpful resources

Join us at the Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount! Prices go up Feb. 11th.

Join our Community Sticker Challenge 2025

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2025

Check out the January 2025 Power BI update to learn about new features in Reporting, Modeling, and Data Connectivity.

| User | Count |

|---|---|

| 144 | |

| 75 | |

| 63 | |

| 51 | |

| 48 |

| User | Count |

|---|---|

| 204 | |

| 86 | |

| 64 | |

| 59 | |

| 56 |