Join us at the 2025 Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount! Early bird discount ends December 31.

Register Now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

Be one of the first to start using Fabric Databases. View on-demand sessions with database experts and the Microsoft product team to learn just how easy it is to get started. Watch now

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Join Data on Date Range

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Join Data on Date Range

I work for a customer service center where we have customers come into a lobby and are served with tickets (kind of like the old "take a number" system). In our office, we have two big datasets. The first is from our queueing system, and the second is from our cashiering system. These systems are not linked in any way. The queueing system has a table called history and the cashiering system has a table called transactions.

Here is what the queueing system's history table contains:

StartDate | EndDate | CustomerID | DeskID

09-14-2017 12:47pm | 09-14-2017 1:02 PM | 101 | 12

09-14-2017 12:50 pm | 09-14-2017 1:16 PM | 102 | 15

The transactions table will contain something like this:

TransactionDate | AmountPaid | DeskID

09-14-2017 1:01 PM | 45.25 | 12

09-14-2017 1:14 PM | 102.60 | 15

Only one customer can possibly transact one transaction at one DeskID at one time. Therefore, I should be able to join the data in such a way that I can check the date range and the DeskID and determine which transaction belongs to which history entry. That would result in something like this:

history.StartDate | history.EndDate | transaction.TransactionDate | transaction.AmountPaid | DeskID | history.CustomerID

I have this working somewhat in SQL (both of these data sets are on SQL Azure) by doing a JOIN, but it is SLOW, and I'd rather bring the datasets into Power BI natively and then let PowerBI join them. I just don't see a way of doing it.

Thoughts?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@shanebo3239 wrote:

I work for a customer service center where we have customers come into a lobby and are served with tickets (kind of like the old "take a number" system). In our office, we have two big datasets. The first is from our queueing system, and the second is from our cashiering system. These systems are not linked in any way. The queueing system has a table called history and the cashiering system has a table called transactions.

Here is what the queueing system's history table contains:

StartDate | EndDate | CustomerID | DeskID

09-14-2017 12:47pm | 09-14-2017 1:02 PM | 101 | 12

09-14-2017 12:50 pm | 09-14-2017 1:16 PM | 102 | 15

The transactions table will contain something like this:

TransactionDate | AmountPaid | DeskID

09-14-2017 1:01 PM | 45.25 | 12

09-14-2017 1:14 PM | 102.60 | 15

Only one customer can possibly transact one transaction at one DeskID at one time. Therefore, I should be able to join the data in such a way that I can check the date range and the DeskID and determine which transaction belongs to which history entry. That would result in something like this:

history.StartDate | history.EndDate | transaction.TransactionDate | transaction.AmountPaid | DeskID | history.CustomerID

I have this working somewhat in SQL (both of these data sets are on SQL Azure) by doing a JOIN, but it is SLOW, and I'd rather bring the datasets into Power BI natively and then let PowerBI join them. I just don't see a way of doing it.

Thoughts?

You could try to implement the similar JOIN logic in DAX when creating a calculated table.

Table =

FILTER (

CROSSJOIN (

SELECTCOLUMNS (

history,

"history.StartDate", history[StartDate],

"history.Enddate", history[EndDate],

"history.CustomerID", history[CustomerID],

"history.DeskID", history[DeskID]

),

transactions

),

[history.DeskID] = transactions[DeskID]

&& transactions[TransactionDate] > [history.StartDate]

&& transactions[TransactionDate] <= [history.Enddate]

)

However, I would concern about the performance when the dataset is huge. I'd still suggest you do the JOIN in Azure database. As to performance aspect, try to create proper index and apply proper where clause to narrow down the date range to shrink the data size.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@shanebo3239 wrote:

I work for a customer service center where we have customers come into a lobby and are served with tickets (kind of like the old "take a number" system). In our office, we have two big datasets. The first is from our queueing system, and the second is from our cashiering system. These systems are not linked in any way. The queueing system has a table called history and the cashiering system has a table called transactions.

Here is what the queueing system's history table contains:

StartDate | EndDate | CustomerID | DeskID

09-14-2017 12:47pm | 09-14-2017 1:02 PM | 101 | 12

09-14-2017 12:50 pm | 09-14-2017 1:16 PM | 102 | 15

The transactions table will contain something like this:

TransactionDate | AmountPaid | DeskID

09-14-2017 1:01 PM | 45.25 | 12

09-14-2017 1:14 PM | 102.60 | 15

Only one customer can possibly transact one transaction at one DeskID at one time. Therefore, I should be able to join the data in such a way that I can check the date range and the DeskID and determine which transaction belongs to which history entry. That would result in something like this:

history.StartDate | history.EndDate | transaction.TransactionDate | transaction.AmountPaid | DeskID | history.CustomerID

I have this working somewhat in SQL (both of these data sets are on SQL Azure) by doing a JOIN, but it is SLOW, and I'd rather bring the datasets into Power BI natively and then let PowerBI join them. I just don't see a way of doing it.

Thoughts?

You could try to implement the similar JOIN logic in DAX when creating a calculated table.

Table =

FILTER (

CROSSJOIN (

SELECTCOLUMNS (

history,

"history.StartDate", history[StartDate],

"history.Enddate", history[EndDate],

"history.CustomerID", history[CustomerID],

"history.DeskID", history[DeskID]

),

transactions

),

[history.DeskID] = transactions[DeskID]

&& transactions[TransactionDate] > [history.StartDate]

&& transactions[TransactionDate] <= [history.Enddate]

)

However, I would concern about the performance when the dataset is huge. I'd still suggest you do the JOIN in Azure database. As to performance aspect, try to create proper index and apply proper where clause to narrow down the date range to shrink the data size.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

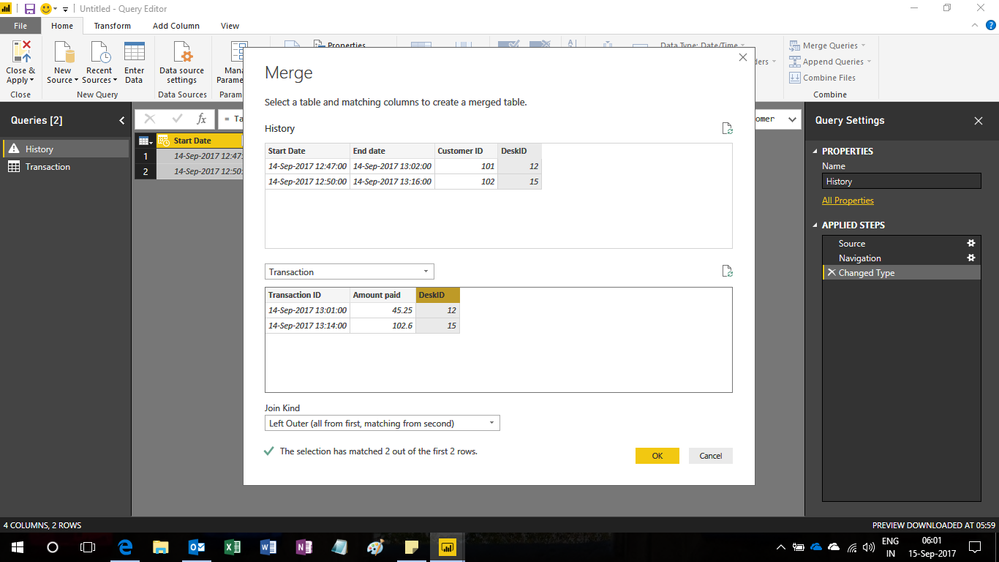

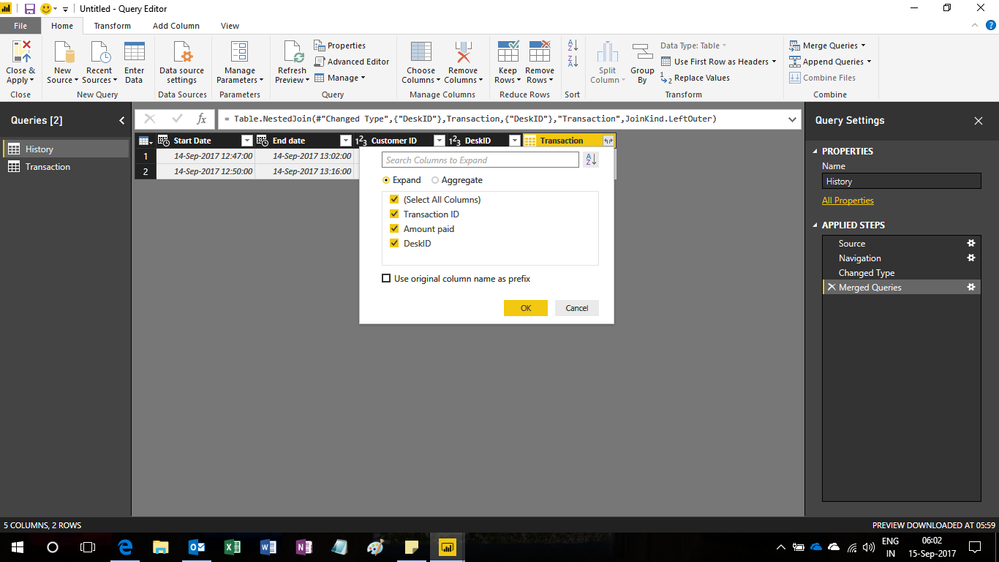

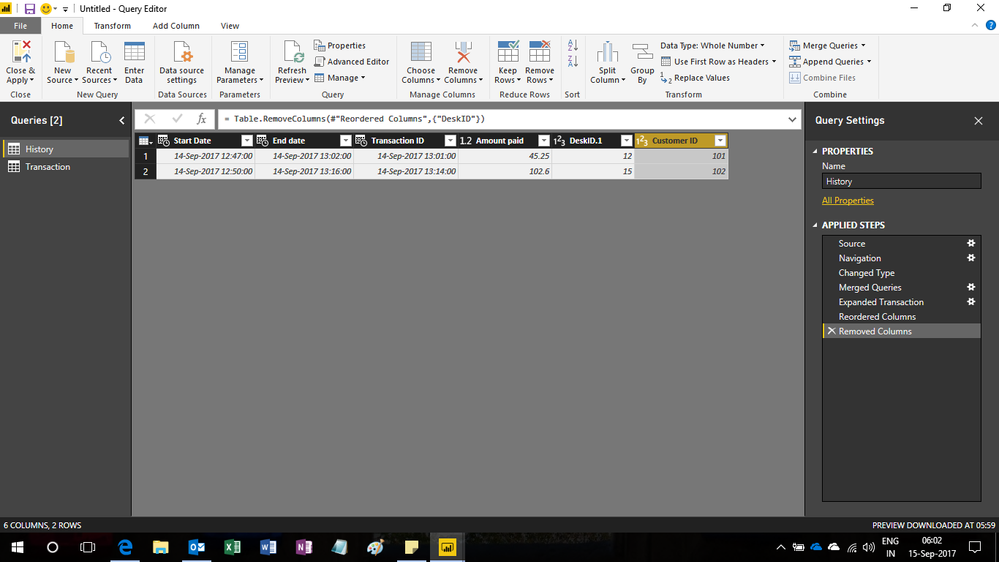

You can Merge queries. These screenshots should help.

Regards,

Ashish Mathur

http://www.ashishmathur.com

https://www.linkedin.com/in/excelenthusiasts/

Helpful resources

Join us at the Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount!

We want your feedback!

Your insights matter. That’s why we created a quick survey to learn about your experience finding answers to technical questions.

Microsoft Fabric Community Conference 2025

Arun Ulag shares exciting details about the Microsoft Fabric Conference 2025, which will be held in Las Vegas, NV.

| User | Count |

|---|---|

| 123 | |

| 85 | |

| 85 | |

| 70 | |

| 51 |

| User | Count |

|---|---|

| 205 | |

| 157 | |

| 97 | |

| 79 | |

| 69 |