Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Help optimizing DAX with SUMX and RELATEDTABLE

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Help optimizing DAX with SUMX and RELATEDTABLE

Hi everyone,

Here is the challenge. I have a table of donors, and a related table of mailings to those donors. I need to calculate the first-year cost of those mailings, on a per-donor basis (the cost of the mailings to each donor in the first year after each donor is added to the file).

I have some code that works, but I'm getting out of memory errors when I load in a few hundred million rows of data. Is there a more efficient way to perform this calculation?

This is the calculated column:

_______________

Year1 Cost=

CALCULATE (

SUMX (

RELATEDTABLE ( Mailing ),

Mailing[Cost]

),

FILTER (

RELATEDTABLE ( Mailing ),

Mailing[MailDate]<= Donor[AddDate] + 365

)

)

_______________

By the way, I also have to calculate Year 2 and Year 3 costs, so the memory issue is compounded.

Thanks for any suggestions!

Dan

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

Based on your description, I’d like suggest you to try below methods:

1. Use “Direct Query” mode to connect data.

If you use SSAS database, you can create the measure on database side. If you use another database, you can try to use “Stored Procedures”/”Custom function” or other to instead of the measure.

2. Use “Query Editor” to filter the data range.(“Import Data Mode”)

Sample: You want to get the “2016” data, use query editor to filter the records to which you need.

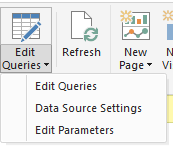

1. Connect to the database and choose the selected table, click edit button to open the query editor.

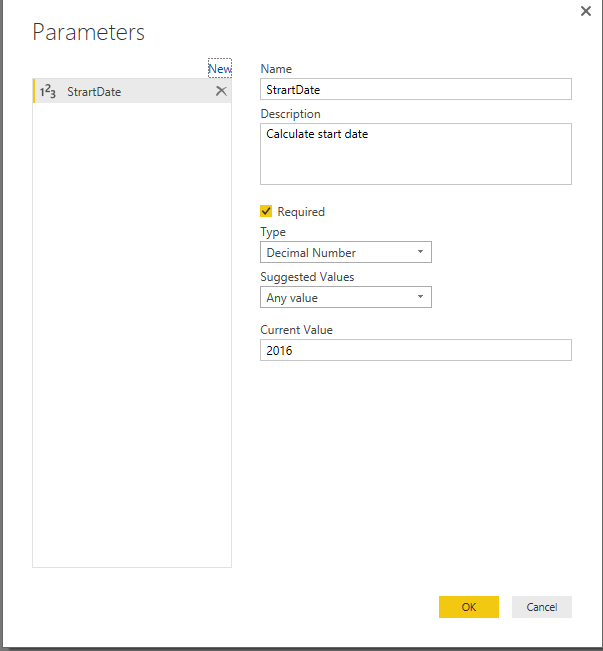

2. Add parameters.

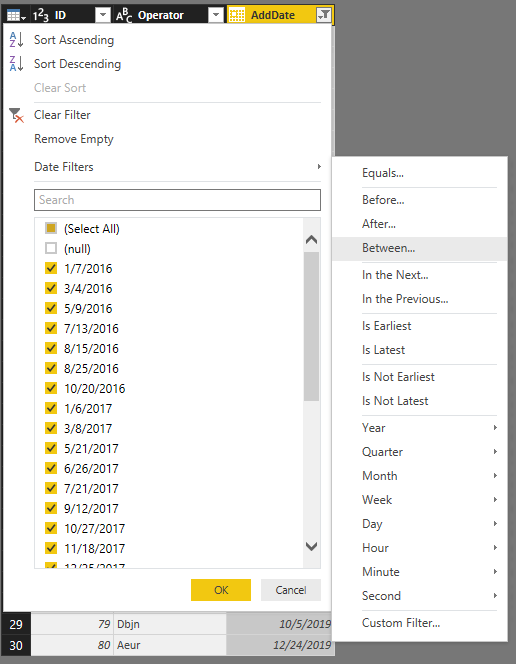

3. Enable the filter on donor table.

4. Add two date to the conditions and click “Ok” to general the formula:

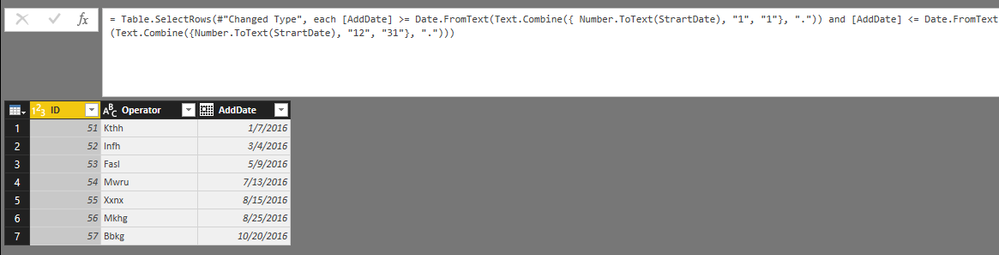

5. Modify the query to enable the parameter:

= Table.SelectRows(#"Changed Type", each [AddDate] >= Date.FromText(Text.Combine({ Number.ToText(StrartDate),"1","1"}, ".")) and [AddDate] <= Date.FromText(Text.Combine({Number.ToText(StrartDate), "12", "31"},".")))

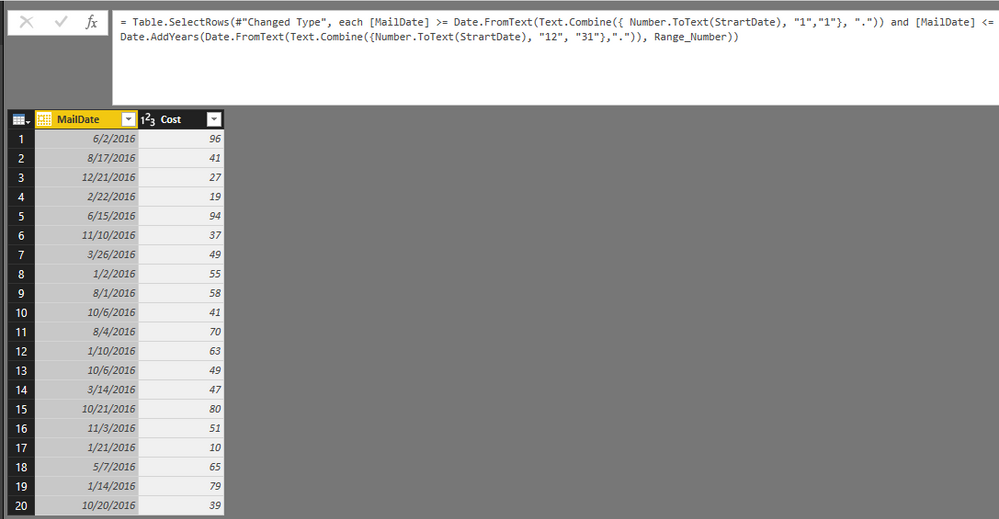

6. Do the same operation on mailing table:

= Table.SelectRows(#"Changed Type", each [MailDate] >= Date.FromText(Text.Combine({ Number.ToText(StrartDate), "1","1"}, ".")) and [MailDate] <=

Date.AddYears(Date.FromText(Text.Combine({Number.ToText(StrartDate), "12", "31"},".")), Range_Number))

7.Click “Close & Apply” to save these steps.

Then you can modify the parameters to control the records range.

Regards,

Xiaoxin Sheng

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

Based on your description, I’d like suggest you to try below methods:

1. Use “Direct Query” mode to connect data.

If you use SSAS database, you can create the measure on database side. If you use another database, you can try to use “Stored Procedures”/”Custom function” or other to instead of the measure.

2. Use “Query Editor” to filter the data range.(“Import Data Mode”)

Sample: You want to get the “2016” data, use query editor to filter the records to which you need.

1. Connect to the database and choose the selected table, click edit button to open the query editor.

2. Add parameters.

3. Enable the filter on donor table.

4. Add two date to the conditions and click “Ok” to general the formula:

5. Modify the query to enable the parameter:

= Table.SelectRows(#"Changed Type", each [AddDate] >= Date.FromText(Text.Combine({ Number.ToText(StrartDate),"1","1"}, ".")) and [AddDate] <= Date.FromText(Text.Combine({Number.ToText(StrartDate), "12", "31"},".")))

6. Do the same operation on mailing table:

= Table.SelectRows(#"Changed Type", each [MailDate] >= Date.FromText(Text.Combine({ Number.ToText(StrartDate), "1","1"}, ".")) and [MailDate] <=

Date.AddYears(Date.FromText(Text.Combine({Number.ToText(StrartDate), "12", "31"},".")), Range_Number))

7.Click “Close & Apply” to save these steps.

Then you can modify the parameters to control the records range.

Regards,

Xiaoxin Sheng

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi I would like to suggest you to test your data model in DAX Studio to find out the memory outage issue. Can you please post the every single detail of the data model including no. of rows in tables, your machine memory and any calculated columns you have created...

This would help us to test the impact of various calculations in your data model. DAX Studio/SQL profiler will give you more insights regarding where you need to make adjustments depending on which you can design your calculation.

Thanks & Regards,

Bhavesh

Bhavesh

Love the Self Service BI.

Please use the 'Mark as answer' link to mark a post that answers your question. If you find a reply helpful, please remember to give Kudos.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Bhavesh. The out-of-memory errors occur when I am processing the model, and I believe they are due to the calculated columns. How would I go about testing the model in DAX studio? I know how to test the server timing on measures, but since the columns are calculated at the time the model is processed, is there a way to capture the server timing in DAX Studio for those calculations?

Thanks,

Dan

Helpful resources

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

Power BI Monthly Update - October 2025

Check out the October 2025 Power BI update to learn about new features.