FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

View all the Fabric Data Days sessions on demand. View schedule

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Forcing Vertipaq to update data type encoding

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Forcing Vertipaq to update data type encoding

Hi guys!

I'm working on a PBIX with a large dataset, and trying to keep under the 1GB compressed limit. My largest fact table has a cardinality of 28 million, with an integer primary key.

Problem is, no matter what I try, Vertipaq keeps encoding the primary key column as HASH, instead of VALUE, which eats up space. I have cast it as an integer upstream, in the database layer; I have changed its datatype in Power Query to a whole number; I have deleted and re-built the table numerous times.

I can't find any definitive documentation on the steps needed to update the encoding on a single integer column. What I have found states that Integer = VALUE encoding by default, yet that doesn't seem to be the case here. What am I missing? Is there a limit on the cardinality that overrides this? Is it really necessary to delete the table and re-build it every time I make a change?

Once again, the lack of clear and complete documentation is causing me to burn excess man-hours trying to figure this out.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After the first scan, if it sees an outlier that can trigger a reencoding.

"Once the decision is made, SSAS start to compress the column using the chosen algorithm. Occasionally, values that were not in the original sampling can cause the process to require reevaluation. For example, SAS might read a few million rows in which the values are in the range of 100-200, making value encoding the best choice. However, after those millons of rows, an outlier might suddenly appear - for example a large number like 60,000,000 ~ triggering a reencoding of the column."

This is from "Tabular Modeling in Microsoft SQL Server Analysis Services" 2nd edition. p 356

Based on that I think you want them to be as close to sequential as they can be. We have even gone so far as to replace a key column that was Client_Claim_Seq_ID like "ABC000012357007" with an integer identity on the master table where the data is inserted so it would help it to be VALUE encoded since the identity on the table increments by 1 with each record insert.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since trial and error seems to be the only way to truly understand how PBI works under the hood, I'm spending more time today tinkering.

Theory: The encoding depends upon the absolute cardinality.

Experiment: Load the same columns from the same table, including my integer PK, but differing number of rows.

Outcomes:

- Loading just the first 100,000 rows: VALUE encoding

- Loading just the first 5,000,000 rows: VALUE encoding

- Loading just the first 15,000,000 rows: VALUE encoding

- Loading just the first 20,000,000 rows: VALUE encoding

- Loading just the first 28,000,000 rows: VALUE encoding

- Loading all 28,032,233 rows: HASH encoding

However, based on what @jdbuchanan71 said upthread, I noticed that the top 1% of my rows - the last 28,000 or so - have integer PKs that are significantly larger than the majority of the table. In other words, there's a big gap in my PK structure.

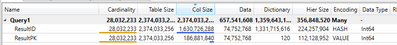

So I created a surrogate PK, using ROW_NUMBER(), that guarantees that the surrogates are a) much smaller in value, and b) absolutely sequential and consecutive. Re-loading all 28,032,233 rows and including the new surrogate PK column alongside the original PK from the database tables, we have a winner! In this screenshot, the "ResultID" column is my original PK from the database, and the "ResultPK" column is my new sequential surrogate PK. That's a nice size savings!

I have also learned from this exercise that the Vertipaq engine will re-assess the encoding with each change of source query and reload. That's good to know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is a great space save! Glad you were able to get it to work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After the first scan, if it sees an outlier that can trigger a reencoding.

"Once the decision is made, SSAS start to compress the column using the chosen algorithm. Occasionally, values that were not in the original sampling can cause the process to require reevaluation. For example, SAS might read a few million rows in which the values are in the range of 100-200, making value encoding the best choice. However, after those millons of rows, an outlier might suddenly appear - for example a large number like 60,000,000 ~ triggering a reencoding of the column."

This is from "Tabular Modeling in Microsoft SQL Server Analysis Services" 2nd edition. p 356

Based on that I think you want them to be as close to sequential as they can be. We have even gone so far as to replace a key column that was Client_Claim_Seq_ID like "ABC000012357007" with an integer identity on the master table where the data is inserted so it would help it to be VALUE encoded since the identity on the table increments by 1 with each record insert.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @jdbuchanan71 ! But - already tried that. I had seen the mention of ranking them in some Microsoft documentation somewhere, but that too failed to force the engine to switch it to VALUE. Do they need to be consecutive, with no gaps in between? Do the values need to be spaced evenly (consistent gap between them)? Or is it sufficient for them to simply be ranked in ascending order? Once again, the documentation doesn't state, and the behavior doesn't match what the documentation does state.

I'm still wondering exactly what it takes to trigger a re-evaluation by the engine. One document says that the engine will evaluate based on the "first scan" of the table. Does that mean that it uses those values from then on, regardless of changes that I make in the upstream layer? Or does "first scan" mean "first scan after each change is made" (which doesn't exactly make much sense to me)?

I will look into the "Available in MDX"; haven't seen mention of that before, so thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You might try sorting the primary key column on the upstream source. Vertipaq makes it encoding decision based on a sample of the first rows (I'm not sure of the sample size) but if it sees a series like 1, 2, 3, 1297956, 4, 5 it will pick HASH. Sorting it should stop it from running into that scenario. You can also set an encoding hint on a column using Tabular Editor which may help. You can also look at turning off 'Available in MDX' which will save you the heirarchy size for the key column.

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!