FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Finding Average Item rate while grouping by It...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Finding Average Item rate while grouping by Item code for a date range (slicer)

Hi guys

Scratching my head with this one.

We have the odd situation where agents will enter dummy values for the Item Rate "£0.00" and "£2.22" on sales orders.

After the sales are signed off, we then have to go into the orders and correct these dummy orders by working out the average Item Rate per Item code from all the other sales orders, and then we use this as the Item Rate instead of the dummy values.

For example see same data below

| Document Number | Date | Item | Item Rate | Originally Ordered Qty | Scheduled Sell |

| SO0235961 | 25/04/2022 | 2 | £0.81 | 500 | £405.75 |

| SO0235993 | 25/04/2022 | 2 | £0.85 | 500 | £426.10 |

| SO0235994 | 25/04/2022 | 2 | £0.85 | 500 | £426.10 |

| SO0235995 | 25/04/2022 | 2 | £0.00 | 500 | £0.00 |

| SO0236179 | 24/04/2022 | 2 | £0.96 | 600 | £577.74 |

| SO0234542 | 26/04/2022 | 3 | £0.00 | 553 | £0.00 |

| SO0240064 | 26/04/2022 | 3 | £1.45 | 1747 | £2,532.28 |

| SO0233996 | 24/04/2022 | 3 | £1.45 | 4053 | £5,876.85 |

| SO0234139 | 21/04/2022 | 3 | £1.45 | 1686 | £2,444.70 |

| SO0236394 | 26/04/2022 | 3 | £2.22 | 1021 | £2,266.62 |

| SO0236448 | 21/04/2022 | 3 | £2.22 | 243 | £539.46 |

| SO0241025 | 24/04/2022 | 3 | £0.00 | 921 | £0.00 |

| SO0241411 | 24/04/2022 | 3 | £2.22 | 463 | £1,027.86 |

| SO0241428 | 21/04/2022 | 3 | £0.00 | 782 | £0.00 |

| SO0241435 | 21/04/2022 | 3 | £2.22 | 1350 | £2,997.00 |

| SO0236975 | 26/04/2022 | 5 | £0.65 | 3716 | £2,415.40 |

| SO0236976 | 26/04/2022 | 5 | £0.00 | 1372 | £0.00 |

| SO0236977 | 21/04/2022 | 5 | £0.65 | 2999 | £1,949.35 |

| SO0236986 | 26/04/2022 | 5 | £0.65 | 1600 | £1,040.00 |

| SO0236987 | 21/04/2022 | 5 | £0.00 | 1998 | £0.00 |

| SO0236988 | 21/04/2022 | 5 | £0.65 | 3499 | £2,274.35 |

| SO0237010 | 24/04/2022 | 5 | £0.65 | 2025 | £1,316.25 |

| SO0240441 | 24/04/2022 | 5 | £0.96 | 474 | £455.66 |

| SO0240447 | 21/04/2022 | 5 | £0.96 | 3367 | £3,236.70 |

| SO0242788 | 21/04/2022 | 5 | £2.22 | 500 | £1,110.00 |

| SO0242790 | 21/04/2022 | 5 | £2.22 | 1252 | £2,779.44 |

So for Item code 3 we first remove all rows where the Item Rate is £0.00 or £2.22 leaving the following:

| Document Number | Date | Item | Item Rate | Originally Ordered Qty | Scheduled Sell |

| SO0240064 | 22/04/2022 | 3 | £1.45 | 1747 | £2,532.28 |

| SO0233996 | 24/04/2022 | 3 | £1.45 | 4053 | £5,876.85 |

| SO0234139 | 21/04/2022 | 3 | £1.45 | 1686 | £2,444.70 |

We then total the Originally Ordered Qty and Delivered Sell for the remaining orders

Originally Ordered Qty Total = 7486

Scheduled Sell Total = £10,853.83

Then divide the Scheduled Sell Total / Originally Ordered Qty Total to get the Average Item Rate = £1.45

You then have to put the removed lines back in and add a column with the Average Item Rate you've just calculated.

Now using the new Average Item Rate I can calculate the new "Average Scheduled Sell" price.

| Document Number | Date | Item | Item Rate | Originally Ordered Qty | Scheduled Sell | Average Item Rate | Average Scheduled Sell |

| SO0234542 | 21/04/2022 | 3 | £0.00 | 553 | £0.00 | £1.45 | £801.85 |

| SO0240064 | 22/04/2022 | 3 | £1.45 | 1747 | £2,532.28 | £1.45 | £2533.15 |

| SO0233996 | 24/04/2022 | 3 | £1.45 | 4053 | £5,876.85 | £1.45 | £5876.85 |

| SO0234139 | 21/04/2022 | 3 | £1.45 | 1686 | £2,444.70 | £1.45 | £2444.70 |

| SO0236394 | 22/04/2022 | 3 | £2.22 | 1021 | £2,266.62 | £1.45 | £1480.45 |

| SO0236448 | 24/04/2022 | 3 | £2.22 | 243 | £539.46 | £1.45 | £352.35 |

| SO0241025 | 24/04/2022 | 3 | £0.00 | 921 | £0.00 | £1.45 | £1335.45 |

| SO0241411 | 21/04/2022 | 3 | £2.22 | 463 | £1,027.86 | £1.45 | £671.35 |

| SO0241428 | 22/04/2022 | 3 | £0.00 | 782 | £0.00 | £1.45 | £1133.90 |

| SO0241435 | 24/04/2022 | 3 | £2.22 | 1350 | £2,997.00 | £1.45 | £1957.50 |

This all needs to be grouped by the Item code and affected by the Date range on the page slicer.

I've only managed to get part way there by duplicating the orders table and manipulating it in power query but this is incredibly slow and is not affected by the date as it takes the average across all orders.

Anyone brave enough to have a go? 🙂

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Da Geemta

I think this is what you are asking for.

Create a table visual with item number and then the Ave rate.

Please click thumbs up and Accept As Solution because I was "brave enough" to have a go 😀

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again DaGemsta

Firstly you are being a bit cheeky adding a new problem to the ticket. 😀

Please raise 1 problem per ticket. If you need to change or expand the ticket because you did not ask the right question, them accept the solution and raise a new ticket and give the solver twice the kudos scores for doing twice the work. Thank you. 👍

Secondly, you have now mention a "Received" field which is not is you example data ! 🤔

May I suggest that you never use / in Power BI.

Always use DIVIDE to capature any divsion by zero errors. 😇

I cant answer you exact questioin because you hace not provided the "Received" field, but I am pretty sure this info will help.

Look at these 2 measures carefully:-

Sum value = SUM('Table'[Originally Ordered Qty]) * [Ave rate]

Both will return the same answer for each a row in table visual with

Document number, Date, Item, Item Rate, Ave Rate, Sum value, Sumxvalue.

However, only the Sumx value will return the correct sub totals and grand totals.

This is because SUMX is an iterator. see https://docs.microsoft.com/en-us/dax/sumx-function-dax

Click here to download my example

Now please smash those thumbs up and Accept As Solution buttons to give kudos for helping you. Thank you! 😎

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again DaGemsta

Firstly you are being a bit cheeky adding a new problem to the ticket. 😀

Please raise 1 problem per ticket. If you need to change or expand the ticket because you did not ask the right question, them accept the solution and raise a new ticket and give the solver twice the kudos scores for doing twice the work. Thank you. 👍

Secondly, you have now mention a "Received" field which is not is you example data ! 🤔

May I suggest that you never use / in Power BI.

Always use DIVIDE to capature any divsion by zero errors. 😇

I cant answer you exact questioin because you hace not provided the "Received" field, but I am pretty sure this info will help.

Look at these 2 measures carefully:-

Sum value = SUM('Table'[Originally Ordered Qty]) * [Ave rate]

Both will return the same answer for each a row in table visual with

Document number, Date, Item, Item Rate, Ave Rate, Sum value, Sumxvalue.

However, only the Sumx value will return the correct sub totals and grand totals.

This is because SUMX is an iterator. see https://docs.microsoft.com/en-us/dax/sumx-function-dax

Click here to download my example

Now please smash those thumbs up and Accept As Solution buttons to give kudos for helping you. Thank you! 😎

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Awesome Source!!! Thanks Speedy 😁

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again DeGemsta

I am on the mobile phone at the moment and will reply properly when I get chance on the laptop.

It sounds like you may need to use the SUMX iterator.

SUMX is a classic method for getting grand totals from multiple rows with value = unit price x quantity.

Obviously you cant SUM unit price x quantity. That wont make sense.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! Looking forward to it 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Da Geemta

I think this is what you are asking for.

Create a table visual with item number and then the Ave rate.

Please click thumbs up and Accept As Solution because I was "brave enough" to have a go 😀

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

lol Thanks speedramps 🙂

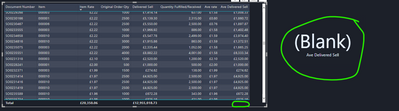

I've added a measure to work out delivered sell using the ave rate you generated

The above works great on the one visual but I can't total it and I can't then use it to calculate the net profit etc for the report.

Am I doing something wrong?

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!