- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Find a single specific value in column and create ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Find a single specific value in column and create new column populated with this value

I am trying to automate part of the update of a dashboard we have that uses flat files.

the file looks something like this:

Scenario | Optimistic |

|

Date | 12/24/2020 |

|

|

|

|

Curreny | Product | Sales |

USD | A | 320 |

EUR | B | 121 |

Cl | A | 198032 |

USD | A | 231 |

EUR | B | 536 |

These flat files are dropped in an update folder and then appended.

I am aware that the script has to be created in the Helper Queries > Transform Sample File in order to apply it to every file that is in the update folder.

I was able to remove top rows (4) and promote 1 row as headers. Nonetheless, before this, I need to add a column named scenario and date with the data displayed so I can identify them after the append.

So the table should look like this before applying the removal of top rows and promotion of headers:

Column 1 | Column 2 | Column 3 | Column 4 | Column 5 |

Scenario | Optimistic | Null | Optimistic | 12/24/2020 |

Date | 12/24/2020 | Null | Optimistic | 12/24/2020 |

Null | Null | Null | Optimistic | 12/24/2020 |

Curreny | Product | Sales | Optimistic | 12/24/2020 |

USD | A | 320 | Optimistic | 12/24/2020 |

EUR | B | 121 | Optimistic | 12/24/2020 |

Cl | A | 198032 | Optimistic | 12/24/2020 |

USD | A | 231 | Optimistic | 12/24/2020 |

EUR | B | 536 | Optimistic | 12/24/2020 |

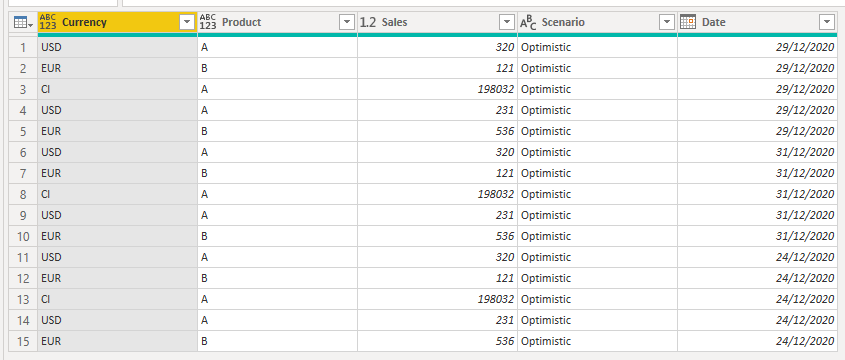

And then like this after applying script (also adding the names of the columns):

Curreny | Product | Sales | Scenario | Date |

USD | A | 320 | Optimistic | 12/24/2020 |

EUR | B | 121 | Optimistic | 12/24/2020 |

Cl | A | 198032 | Optimistic | 12/24/2020 |

USD | A | 231 | Optimistic | 12/24/2020 |

EUR | B | 536 | Optimistic | 12/24/2020 |

Or this is how I picture the process in my head.

Could you guys help me out with this? or do you have a simpler process in mind?

Regards! and thanks in advance.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you edit the Transform Sample File Query you can acheve this. Here's an example PBIX file with the following code. You will need to edit the Source step in the Sample File query to point to your own folder.

1. After importing the sample file, add a column picking the scenario type from Column1 and then Fill Down that column.

2. Remove the top row. You will need to adjust your later queries to only remove 3 rows, you mentioned you were already removing 4.

3. Add a column with the date from this file, from Column2

4. Fill Down the newly added date column

These changes will now be applied to all files you import from that folder.

The Transform Sample File query will look like this

let

Source = Csv.Document(Parameter1,[Delimiter=" ", Columns=3, Encoding=65001, QuoteStyle=QuoteStyle.None]),

#"Added Custom" = Table.AddColumn(Source, "Custom", each if [Column1] = "Scenario" then [Column2] else null),

#"Filled Down" = Table.FillDown(#"Added Custom",{"Custom"}),

#"Removed Top Rows" = Table.Skip(#"Filled Down",1),

#"Added Custom1" = Table.AddColumn(#"Removed Top Rows", "Custom1", each if [Column1] ="Date" then [Column2] else null),

#"Filled Down1" = Table.FillDown(#"Added Custom1",{"Custom1"})

in

#"Filled Down1"

Giving results like this

Regards

Phil

Did I answer your question? Then please mark my post as the solution.

If I helped you, click on the Thumbs Up to give Kudos.

Blog :: YouTube Channel :: Connect on Linkedin

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you edit the Transform Sample File Query you can acheve this. Here's an example PBIX file with the following code. You will need to edit the Source step in the Sample File query to point to your own folder.

1. After importing the sample file, add a column picking the scenario type from Column1 and then Fill Down that column.

2. Remove the top row. You will need to adjust your later queries to only remove 3 rows, you mentioned you were already removing 4.

3. Add a column with the date from this file, from Column2

4. Fill Down the newly added date column

These changes will now be applied to all files you import from that folder.

The Transform Sample File query will look like this

let

Source = Csv.Document(Parameter1,[Delimiter=" ", Columns=3, Encoding=65001, QuoteStyle=QuoteStyle.None]),

#"Added Custom" = Table.AddColumn(Source, "Custom", each if [Column1] = "Scenario" then [Column2] else null),

#"Filled Down" = Table.FillDown(#"Added Custom",{"Custom"}),

#"Removed Top Rows" = Table.Skip(#"Filled Down",1),

#"Added Custom1" = Table.AddColumn(#"Removed Top Rows", "Custom1", each if [Column1] ="Date" then [Column2] else null),

#"Filled Down1" = Table.FillDown(#"Added Custom1",{"Custom1"})

in

#"Filled Down1"

Giving results like this

Regards

Phil

Did I answer your question? Then please mark my post as the solution.

If I helped you, click on the Thumbs Up to give Kudos.

Blog :: YouTube Channel :: Connect on Linkedin

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@carlosgmeyer You can reference previous steps within the same query, so start by getting it to a table with one row and two columns: Scenario, Date

Then go back and get the other three columns: Currency, Product, Sales

Then add a new custom column that references that single row table.

Paste this code into Advanced Editor to see what I mean (it might need some adaptation for your file):

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WckksSVXSUTI00jcy0TcyMDIAcpRidaKVQDSU6VxaVJSaVwnkBhTlp5QmlwBZwYk5qcVg2dBgFyDfEYiNgbpBIq6hQUCeE9hYQ4gJOVAlhpYWBsZGaPqMjA3R9JkamynFxgIA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Scenario = _t, Optimistic = _t, #"(blank)" = _t]),

#"Demoted Headers" = Table.DemoteHeaders(Source),

#"Changed Type" = Table.TransformColumnTypes(#"Demoted Headers",{{"Column1", type text}, {"Column2", type text}, {"Column3", type text}}),

#"Kept First Rows" = Table.FirstN(#"Changed Type",2),

#"Removed Other Columns" = Table.SelectColumns(#"Kept First Rows",{"Column1", "Column2"}),

#"Transposed Table" = Table.Transpose(#"Removed Other Columns"),

#"Promoted Headers" = Table.PromoteHeaders(#"Transposed Table", [PromoteAllScalars=true]),

ScenarioDate = Table.TransformColumnTypes(#"Promoted Headers",{{"Scenario", type text}, {"Date", type date}}),

#"Raw Data" = #"Changed Type",

#"Removed Top Rows" = Table.Skip(#"Raw Data",3),

#"Promoted Headers1" = Table.PromoteHeaders(#"Removed Top Rows", [PromoteAllScalars=true]),

#"Changed Type2" = Table.TransformColumnTypes(#"Promoted Headers1",{{"Curreny", type text}, {"Product", type text}, {"Sales", Int64.Type}}),

#"Added Custom" = Table.AddColumn(#"Changed Type2", "Scenario", each #"ScenarioDate"),

#"Expanded Scenario" = Table.ExpandTableColumn(#"Added Custom", "Scenario", {"Scenario", "Date"}, {"Scenario.1", "Date"})

in

#"Expanded Scenario"

Please @mention me in your reply if you want a response.

Copying DAX from this post? Click here for a hack to quickly replace it with your own table names

Has this post solved your problem? Please Accept as Solution so that others can find it quickly and to let the community know your problem has been solved.

If you found this post helpful, please give Kudos C

I work as a Microsoft trainer and consultant, specialising in Power BI and Power Query.

www.excelwithallison.com

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 107 | |

| 94 | |

| 75 | |

| 63 | |

| 62 |

| User | Count |

|---|---|

| 143 | |

| 104 | |

| 104 | |

| 80 | |

| 66 |