FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! It's time to submit your entry. Live now!

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Faster API updates

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Faster API updates

Good day,

We get data from Walkscore for our properties through an API. Every month, I update our properties with a new table in Power Query, re-add custom columns with the API code, and save. When I do so, my file is out of commission for the next ten minutes because it's fetching data from the API. Same applies when I save and close, and refresh the data in the home screen.

(I ran the same table in DAX studio, and it only took 36 milliseconds.)

How can I make our API run faster??

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yep, dataflows have been in in General Availability since April 2019 and it's generally considered a best practice to at least stage, and often transform, your source data in them, upstream of datasets. This gives you separation of concerns with ETL in dataflows and data modeling in datasets.

I'd do the raw API calls in one dataflow, or if there's a lot of historical data I'd partition API calls in two dataflows (historical + refreshable). I'd then input that into another dataflow where additional logic (e.g. calculated columns) is performed, and that's what I would finally ingest in the dataset in Power BI Desktop.

You don't have to do it this way, but this is my considered advice based on having been there and done that quite a few times.

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

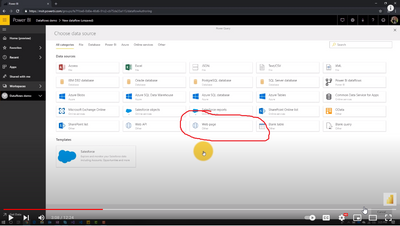

Thanks for the reply. Do you mean like this:

Adding the API as a datasource in the Service:

And then connecting to it once I'm back in the desktop?

I want to do all my work in the desktop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, that's what I mean, though those screenshots are old as dataflows went out of beta years ago.

If you don't want to use dataflows then don't, I guess being locked out of Power BI Desktop for ten minutes is not such a big deal after all.

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just copied the screenshots off a 2018 instructional video.

https://www.youtube.com/watch?v=veuxofp0ZIg

I do want to circumvent these constant updates. I just added a calculated column to the table, and that alone prompted another 10-minute call to the API.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yep, dataflows have been in in General Availability since April 2019 and it's generally considered a best practice to at least stage, and often transform, your source data in them, upstream of datasets. This gives you separation of concerns with ETL in dataflows and data modeling in datasets.

I'd do the raw API calls in one dataflow, or if there's a lot of historical data I'd partition API calls in two dataflows (historical + refreshable). I'd then input that into another dataflow where additional logic (e.g. calculated columns) is performed, and that's what I would finally ingest in the dataset in Power BI Desktop.

You don't have to do it this way, but this is my considered advice based on having been there and done that quite a few times.

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

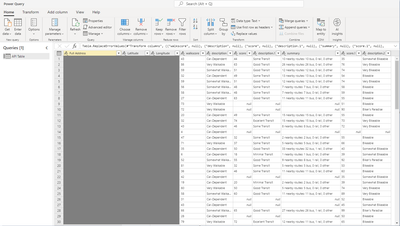

So I've put my API in the service, but I'm not sure how to integrate this into my data model. It's a post request, so I have brought in the necessary address columns into the service for it to figure out the scores it needs to return.

Now when I'm back in the desktop, I have connected to the dataflow, but it returns nothing, even after I refresh in both the service and desktop.

How do I get it to populate?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you saved and refreshed the dataflow? It's distinct from dataset refreshes.

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I had... but I just ran it again, and now it's working. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sounds like you're doing all of this in Power BI Desktop, correct? If that's the case consider moving your API calls to dataflows. You might have to adapt your Power Query code so that it can work in the service but in my experience it's well worth it.

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! It's time to submit your entry.

| User | Count |

|---|---|

| 52 | |

| 41 | |

| 32 | |

| 26 | |

| 24 |

| User | Count |

|---|---|

| 132 | |

| 118 | |

| 57 | |

| 45 | |

| 43 |