Jumpstart your career with the Fabric Career Hub

Find everything you need to get certified on Fabric—skills challenges, live sessions, exam prep, role guidance, and more.

Get started- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Grow your Fabric skills and prepare for the DP-600 certification exam by completing the latest Microsoft Fabric challenge.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Expand datetime range to four hour periods

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Expand datetime range to four hour periods

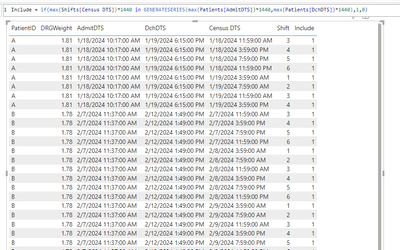

Hi. I have rows in a data table with patient id, admit datetime and discharge datetime. My goal is to count patients and create other measures in four-hour intervals so I need to explode these rows out to have a row for each four hour period. Think of dividing the day into four hour shifts, like this:

| Shift | Period |

| 1 | 12AM-4AM |

| 2 | 4AM-8AM |

| 3 | 8AM-12PM |

| 4 | 12PM-4PM |

| 5 | 4PM-8PM |

| 6 | 8PM-12AM |

For example, say I have the following row for a patient encounter:

| PatientID | DRGWeight | AdmitDTS | DchDTS |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM |

| B | 1.78 | 2/7/24 11:37 AM | 2/12/24 1:49 PM |

What I need is something like the following:

| PatientID | DRGWeight | AdmitDTS | DchDTS | CensusDTS | Shift |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM | 1/18/24 11:59 AM | 3 |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM | 1/18/24 3:59 PM | 4 |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM | 1/18/24 7:59 PM | 5 |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM | 1/18/24 11:59 PM | 6 |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM | 1/19/24 3:59 AM | 1 |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM | 1/19/24 7:59 AM | 2 |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM | 1/19/24 11:59 AM | 3 |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM | 1/19/24 3:59 PM | 4 |

| A | 1.81 | 1/18/24 10:17 AM | 1/19/24 6:15 PM | 1/19/24 7:59 PM | 5 |

And of course the same for PatientID B, C, D, etc. Any ideas?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sh

let

Source = {0..200},

#"Converted to Table" = Table.FromList(Source, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Added Custom" = Table.AddColumn(#"Converted to Table", "CensusDTS", each #datetime(2024,1,18,3,59,0) + #duration(0,[Column1]*4,0,0),type datetime),

#"Added Custom1" = Table.AddColumn(#"Added Custom", "Shift", each Number.Mod([Column1],6)+1,Int64.Type)

in

#"Added Custom1"

Pat

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("LcsxDoAgDEbhq5DOxPpXFOgGO4l7w/2voVSnl7zkM6NGkbAVrDAKSwrYFTm08a261qU4wz1oRqPuIpc3wtkB9PiBMMSXpupgPg==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [PatientID = _t, DRGWeight = _t, AdmitDTS = _t, DchDTS = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"PatientID", type text}, {"DRGWeight", type number}, {"AdmitDTS", type datetime}, {"DchDTS", type datetime}}),

#"Added Custom" = Table.AddColumn(#"Changed Type", "Custom", (k)=> Table.SelectRows(Sh, each k[AdmitDTS]<=[CensusDTS] and [CensusDTS]<=k[DchDTS])),

#"Expanded Custom" = Table.ExpandTableColumn(#"Added Custom", "Custom", {"CensusDTS", "Shift"}, {"CensusDTS", "Shift"})

in

#"Expanded Custom"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This worked for me so I'm going to mark it as a solution but I actually ended up taking a different route. Here's what I did:

1. Create a discharge shift time column

= Table.AddColumn(#"Reordered Columns", "DchShiftTime", each if [DischargeTime] <= #time(3, 59, 0) then #time(3, 59, 0) else if [DischargeTime] <= #time(7, 59, 0) then #time(7, 59, 0) else if [DischargeTime] <= #time(11, 59, 0) then #time(11, 59, 0) else if [DischargeTime] <= #time(15, 59, 0) then #time(15, 59, 0) else if [DischargeTime] <= #time(19, 59, 0) then #time(19, 59, 0) else if [DischargeTime] <= #time(23, 59, 0) then #time(23, 59, 0) else null)2. Merge that new column with discharge date to create a discharge datetime column. For each encounter this gives me a datetime for the last minute of the four-hour shift.

= Table.AddColumn(#"Added Conditional Column", "DchShiftDTS", each Text.Combine({Text.From([DischargeDT], "en-US"), Text.From([DchShiftTime], "en-US")}, " "), type datetime)3. Determine the number of minutes between the discharge shift datetime and the admit datetime and make that an integer

= Table.AddColumn(#"Changed Type3", "IPStayMinutes", each (Duration.TotalMinutes( [DchShiftDTS]-[InpatientAdmitDTS])))

= Table.TransformColumnTypes(#"Added Custom",{{"IPStayMinutes", Int64.Type}})4. Here is the nifty part! List all the datetimes for the admission datetime plus the number of minutes calculated in the previous step (+1 to get that last minute)

= Table.AddColumn(#"Changed Type1", "Minutes", each List.DateTimes( [InpatientAdmitDTS], [IPStayMinutes]+1, #duration(0,0,1,0)))5. Expand that list to new rows and change the resulting column to type datetime

= Table.ExpandListColumn(#"Added Custom3", "Minutes")

= Table.TransformColumnTypes(#"Expanded Minutes",{{"Minutes", type datetime}})6. Add a new time column based on the previous so I have just the time for each row

= Table.AddColumn(#"Changed Type2", "CensusTime", each DateTime.Time([Minutes]), type time)7. Finally, the payoff! Filter the "CensusTime" column to just the six end of shift times, leaving me with one row per census 'moment' for an encounter

= Table.SelectRows(#"Inserted Time3", each [CensusTime] = #time(3, 59, 0) or [CensusTime] = #time(7, 59, 0) or [CensusTime] = #time(11, 59, 0) or [CensusTime] = #time(15, 59, 0) or [CensusTime] = #time(19, 59, 0) or [CensusTime] = #time(23, 59, 0))

The big problem is that it took a long time to load the data. With six years of data I started with 196K rows and ended up with 5.15 million rows. Five million rows isn't that significant but in between, with the calculation and expansion of the minute-by-minute rows, there would have been hundreds of millions of rows to process. If I modify this or do something similar in the future I might calculate by hour rather than minute in increase efficiency at the cost of some accuracy and precision.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Shift = INT(TIMEVALUE(CensusDTS)*6)+1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. However, that answer assumes I already have the CensusDTS column. How do I get the CensusDTS from the Admit & Discharge DTS columns?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FYI, I noticed this earlier but forgot to mention. In your DAX approach here you aren't returning the correct shift for the last row for patient A. Not sure if that means everything is off. If the discharge datetime is 1/19/2024 6:15 PM, the Census DTS should be 1/19/2024 7:59 PM, shift 5.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Awesome! That looks promising. Do you know of a way to do this in the Query Editor?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sh

let

Source = {0..200},

#"Converted to Table" = Table.FromList(Source, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Added Custom" = Table.AddColumn(#"Converted to Table", "CensusDTS", each #datetime(2024,1,18,3,59,0) + #duration(0,[Column1]*4,0,0),type datetime),

#"Added Custom1" = Table.AddColumn(#"Added Custom", "Shift", each Number.Mod([Column1],6)+1,Int64.Type)

in

#"Added Custom1"

Pat

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("LcsxDoAgDEbhq5DOxPpXFOgGO4l7w/2voVSnl7zkM6NGkbAVrDAKSwrYFTm08a261qU4wz1oRqPuIpc3wtkB9PiBMMSXpupgPg==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [PatientID = _t, DRGWeight = _t, AdmitDTS = _t, DchDTS = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"PatientID", type text}, {"DRGWeight", type number}, {"AdmitDTS", type datetime}, {"DchDTS", type datetime}}),

#"Added Custom" = Table.AddColumn(#"Changed Type", "Custom", (k)=> Table.SelectRows(Sh, each k[AdmitDTS]<=[CensusDTS] and [CensusDTS]<=k[DchDTS])),

#"Expanded Custom" = Table.ExpandTableColumn(#"Added Custom", "Custom", {"CensusDTS", "Shift"}, {"CensusDTS", "Shift"})

in

#"Expanded Custom"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This worked for me so I'm going to mark it as a solution but I actually ended up taking a different route. Here's what I did:

1. Create a discharge shift time column

= Table.AddColumn(#"Reordered Columns", "DchShiftTime", each if [DischargeTime] <= #time(3, 59, 0) then #time(3, 59, 0) else if [DischargeTime] <= #time(7, 59, 0) then #time(7, 59, 0) else if [DischargeTime] <= #time(11, 59, 0) then #time(11, 59, 0) else if [DischargeTime] <= #time(15, 59, 0) then #time(15, 59, 0) else if [DischargeTime] <= #time(19, 59, 0) then #time(19, 59, 0) else if [DischargeTime] <= #time(23, 59, 0) then #time(23, 59, 0) else null)2. Merge that new column with discharge date to create a discharge datetime column. For each encounter this gives me a datetime for the last minute of the four-hour shift.

= Table.AddColumn(#"Added Conditional Column", "DchShiftDTS", each Text.Combine({Text.From([DischargeDT], "en-US"), Text.From([DchShiftTime], "en-US")}, " "), type datetime)3. Determine the number of minutes between the discharge shift datetime and the admit datetime and make that an integer

= Table.AddColumn(#"Changed Type3", "IPStayMinutes", each (Duration.TotalMinutes( [DchShiftDTS]-[InpatientAdmitDTS])))

= Table.TransformColumnTypes(#"Added Custom",{{"IPStayMinutes", Int64.Type}})4. Here is the nifty part! List all the datetimes for the admission datetime plus the number of minutes calculated in the previous step (+1 to get that last minute)

= Table.AddColumn(#"Changed Type1", "Minutes", each List.DateTimes( [InpatientAdmitDTS], [IPStayMinutes]+1, #duration(0,0,1,0)))5. Expand that list to new rows and change the resulting column to type datetime

= Table.ExpandListColumn(#"Added Custom3", "Minutes")

= Table.TransformColumnTypes(#"Expanded Minutes",{{"Minutes", type datetime}})6. Add a new time column based on the previous so I have just the time for each row

= Table.AddColumn(#"Changed Type2", "CensusTime", each DateTime.Time([Minutes]), type time)7. Finally, the payoff! Filter the "CensusTime" column to just the six end of shift times, leaving me with one row per census 'moment' for an encounter

= Table.SelectRows(#"Inserted Time3", each [CensusTime] = #time(3, 59, 0) or [CensusTime] = #time(7, 59, 0) or [CensusTime] = #time(11, 59, 0) or [CensusTime] = #time(15, 59, 0) or [CensusTime] = #time(19, 59, 0) or [CensusTime] = #time(23, 59, 0))

The big problem is that it took a long time to load the data. With six years of data I started with 196K rows and ended up with 5.15 million rows. Five million rows isn't that significant but in between, with the calculation and expansion of the minute-by-minute rows, there would have been hundreds of millions of rows to process. If I modify this or do something similar in the future I might calculate by hour rather than minute in increase efficiency at the cost of some accuracy and precision.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Read about Table.Buffer and its siblings. Or do this all in DAX.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks so much for all your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

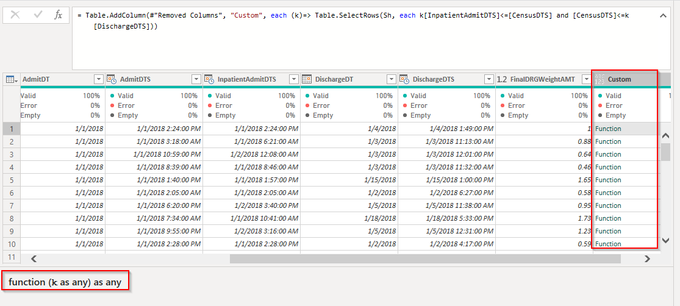

This is helpful, thanks much. I've come up with an alternative solution but yours is much more elegant. However, I'm not entirely sure what is going on in that M code and would like to understand so that I can incoporate it into my query.

- What does " (k)=> " do exactly? I understand the Table.SelectRows portion.

- In the Sh table, why 0 to 200, i.e. 200 rows? Is that based on the date range in my sample data? My actual data go back to 2018-01-01. Does that Sh table need to have many more rows then? If so, should I hard code it as you have or add some dynamic table that counts based on actual dates in the patient encounters table?

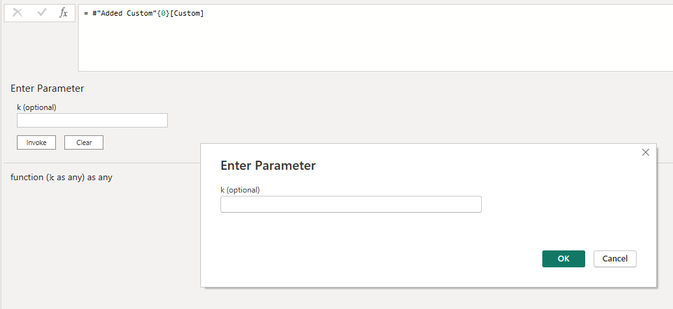

When I try to incorporate this into my query that custom column on the patient encounters table returns as a function

and that function asks for a parameter

If you have the time could you explain what this is doing and how I might fix it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. "(k)" - that's a long story. When I started to learn about context in Power Query I saw an example that used k, and then I used it too, and have used it ever since. It means "current context" and you can use whatever string you like.

2. yeah, whatever range you need.

Note that your formula contains an extra "each" - you can either have the "each" (but nesting them gets nasty very quickly) or the explicit reference via (k)=> , but not both. Remove the first "each" and you get a table instead of a function.

If you want to learn Power Query, read Ben Gribaudo's primer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ach! Stupid cut and paste error!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Done for the day but I will try that out tomorrow. Thanks so much!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you share a straightforward solution? All I could find is a way to expand date ranges to create rows for each day between the admit & discharge dates. I haven't seen anything on how to break out ranges by hour or grouped hours.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That seems to be straightforward . What have you tried and where are you stuck?

Helpful resources

| User | Count |

|---|---|

| 79 | |

| 76 | |

| 68 | |

| 66 | |

| 53 |

| User | Count |

|---|---|

| 104 | |

| 99 | |

| 92 | |

| 78 | |

| 69 |