FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Context Filter is not working as expected

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Context Filter is not working as expected

I have a dataset called Workshop:

Id | Account | Associate Id | Start Date | End Date | Workshop | Gender | State |

1 | Account 1 | 12345 | 01-Jan-17 | 04-Jan-17 | Workshop 1 | Female | State 1 |

2 | Account 1 | 12346 | 02-Jan-18 | 05-Jan-18 | Workshop 1 | Male | State 1 |

3 | Account 1 | 12347 | 03-Jun-19 | 06-Jun-19 | Workshop 1 | Unknown | State 1 |

4 | Account 1 | 12348 | 02-Jan-18 | 05-Jan-18 | Workshop 1 | Male | State 2 |

5 | Account 1 | 12349 | 03-Jun-19 | 06-Jun-19 | Workshop 1 | Unknown | State 2 |

6 | Account 1 | 12350 | 04-Jan-19 | 07-Jan-19 | Workshop 1 | Female | State 2 |

7 | Account 1 | 12351 | 05-Jan-18 | 08-Jan-18 | Workshop 2 | Male | State 2 |

8 | Account 1 | 12352 | 06-Feb-19 | 09-Feb-19 | Workshop 2 | Female | State 2 |

9 | Account 1 | 12353 | 07-Jan-18 | 10-Jan-18 | Workshop 2 | Male | State 1 |

10 | Account 1 | 12354 | 08-Jan-18 | 11-Jan-18 | Workshop 2 | Unknown | State 2 |

11 | Account 1 | 12355 | 09-Jan-18 | 12-Jan-18 | Workshop 2 | Female | State 1 |

12 | Account 1 | 12356 | 10-Jan-19 | 13-Jan-19 | Workshop 2 | Unknown | State 1 |

13 | Account 2 | 12357 | 11-Jan-18 | 14-Jan-18 | Workshop 2 | Female | State 2 |

14 | Account 2 | 12358 | 12-Jan-18 | 15-Jan-18 | Workshop 2 | Male | State 2 |

15 | Account 2 | 12359 | 13-May-19 | 16-May-19 | Workshop 2 | Unknown | State 2 |

16 | Account 2 | 12360 | 19-Jan-19 | 22-Jan-19 | Workshop 2 | Female | State 1 |

17 | Account 2 | 12361 | 20-Jan-18 | 23-Jan-18 | Workshop 2 | Male | State 1 |

18 | Account 2 | 12362 | 14-Jan-18 | 17-Jan-18 | Workshop 2 | Unknown | State 1 |

19 | Account 2 | 12363 | 15-Jan-19 | 18-Jan-19 | Workshop 1 | Female | State 1 |

20 | Account 2 | 12364 | 16-Jan-18 | 19-Jan-18 | Workshop 1 | Male | State 1 |

21 | Account 2 | 12365 | 17-Jan-18 | 20-Jan-18 | Workshop 1 | Unknown | State 1 |

22 | Account 2 | 12366 | 18-Jan-18 | 21-Jan-18 | Workshop 1 | Male | State 2 |

23 | Account 2 | 12367 | 18-Jan-18 | 21-Jan-18 | Workshop 1 | Unknown | State 2 |

24 | Account 2 | 12368 | 18-Jan-18 | 21-Jan-18 | Workshop 1 | Female | State 2 |

Created Two Measure:

- Associate Count Across Account should not be filterable by Account and State

Associate Count Across Account = CALCULATE ( DISTINCTCOUNT ( Workshop[Id] ), ALL ( Workshop[Account], Workshop[State] ) )

- Associate Count in my Account

Associate Count in my Account = CALCULATE ( DISTINCTCOUNT ( Workshop[Id] ) )

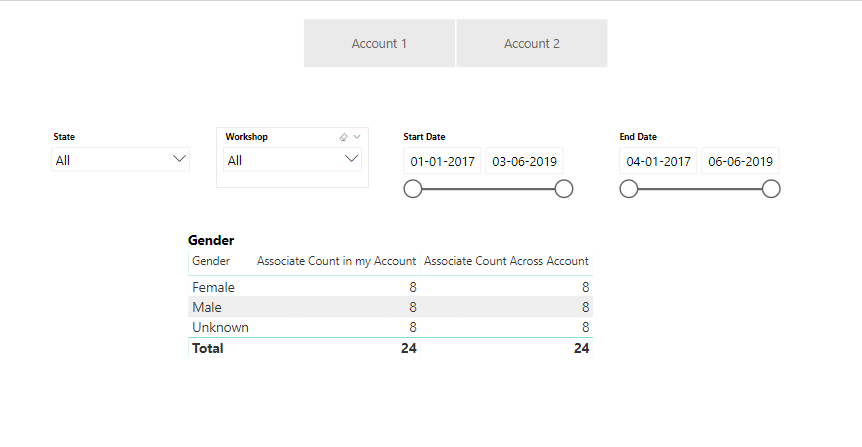

Visualization 1. No Filter applied.

1. No Filter applied.

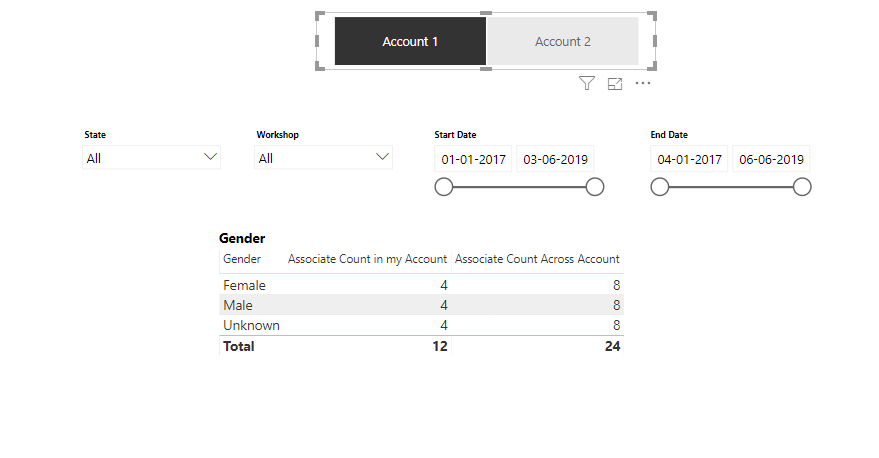

2. Selected Account 1 (Everything works fine)

2. Selected Account 1 (Everything works fine)

3. Filter State 1 from State (Everything works fine)

3. Filter State 1 from State (Everything works fine)

4. Now clear the from State Slicer and then filter date range 22-01-2017 to 03-06-2019 from Start Date Slicer (Associate Count across Account Measure is not working as expected)

4. Now clear the from State Slicer and then filter date range 22-01-2017 to 03-06-2019 from Start Date Slicer (Associate Count across Account Measure is not working as expected)

ISSUE

When I apply some filter from Date Data Type Slicer as the range that time Account and State filter is not working as expected.

There is an article written by Alberto Ferrari https://www.sqlbi.com/articles/understanding-dax-auto-exist/

However, could not able to fix my issue. Can you please help me with this? Please let me know in case you need some more information.

Thank You!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to transform your single-table model in a proper star schema.

Add a dimension for sets of related attributes you want to slice and filter for, and leave the fact table with numbers to aggregate.

Autoexist is applied to columns in the same table, not to columns of different tables. This is why a star schema would work just fine.

At the end, data modeling is quite easy: Star schema all the things.

Have fun with DAX!

Alberto Ferrari

http://www.sqlbi.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to transform your single-table model in a proper star schema.

Add a dimension for sets of related attributes you want to slice and filter for, and leave the fact table with numbers to aggregate.

Autoexist is applied to columns in the same table, not to columns of different tables. This is why a star schema would work just fine.

At the end, data modeling is quite easy: Star schema all the things.

Have fun with DAX!

Alberto Ferrari

http://www.sqlbi.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI Alberto,

I know, Star Schema is the "recommended" way, but it is really not fit for many modern ways of working with data as explained in Why star schema is set up for failure and in Should auto exist and sort by column implementation be changed?

Is there another way to work around auto-exist in a specific measure, e.g. by clearing all filters and then reapplying only the slicers that I want to apply?

Cheers, aabtra

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are touching two separate topics:

- Star schema is old

- Autoexist should be changed

While I agree with the latter, and I hope Microsoft is actively working on a solution, I totally disagree with the former. Currently, there are no viable workarounds in DAX for Autoexist, this is why I guess Microsoft will work on changing the behavior.

On the other topic, I read multiple sources claiming that the "modern" way of managing data should not rely on the "old" star schema methodology. All the sources I read so far fail in providing a new paradigm that is ir could be better than a star schema.

Star schema versus the rest of the models mostly turns into a religious war. I am not into it. If there were a better paradigm, I would happily study that. However, so far I only read criticism without good alternatives. Therefore, I stick with the star schema.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Alberto,

thanks for the quick reply. When it comes to the star schema I agree with you that it is convoluted and there are probably multiple ways to address the symptom: For me, the symptom is that I have source data with around 300 rows edit: colums by which my "users" potentially want to filter in their own dashboards and I am for sure not going to create a dimension table for each of these.

Now you may say how is this a represenatative scenario? And I would say, that is actually very common when teams are continously working with new data, sources change, new questions get asked etc. and you don't have the time and ressourced to always click through new relationships in PBI.

Could this be solved with a more programmatic / generative approach to data modeling in PBI? Absolutely yes! But it could also be solved with allowing, even enouraging the users to break with the star schema in scenarios, where performance and memory requirements are secondary to workflow and speed considerations. And what the above source implies is, that with todays database engines and functional programming languages, many of the memory and computation drawbacks could potentially be optimized to make this workflow perfectly fine for a number of everyday tasks.

So maybe let me be more nuanced: of course noone should get rid of the star schema per se. We should just be encouraged to build fatter tables for purposes of work-flow management and development speed and the engines should be optimized for this reality as well.

Cheers, aabtra

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlbertoFerrari . First of all, thanks for sharing you take here & writing in detail about Auto-exist in this article. It seems that not many developers are aware about this behaviour but sooner or later every PBI developer is bound to run into it. I read your article in detail and have a question:

In it, you have used example measures to explain the behaviour. One of the measures uses ALL() modifier. So my question is this - Should one expect the Auto-exist behaviour with other context modifiers as well ? Such as ALLSELECTED(), ALLEXCEPT() and so on. Looking forward to hear from you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes. Any modifier that removes a filter is going to be affected by this. Modifiers that add filters, on the other hand, might fight with arbitrarily shaped set. In both scenarios, some borderline cases are a real puzzle to solve.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see. Definitely something to keep in mind while adding further tables to my Data Model. One more question though (last one, I promise 😅). In the example that you used in the article here, since there are only 9 rows in the underlying dataset, it becomes quite easy to look at the rows one is missing out due to Auto-exist.

However, in the report I'm working on, the Fact table has > 100,000 records (fetched in DQ mode). I am baffled about how to find out exactly which rows/datapoints are not being fetched due to Auto-exist. In your opinion, what would be the best way to figure this out ? Thanks in advance.

P.S.: I'm already using the approach using COUNTROWS() to find the difference in numbers of rows. It's just that, I also wish to find out which records from the Fact table are being left out.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hard to give you any advice here... the result of the query is aggregated, you do not see the individual rows anymore. I think it could be solved for a very specific query, but I do not see a simple (or even complex) solution to the more generic problem. The problem is that the hidden rows depend on the formulas used in the measure, which filter are removed and which are kept.

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 38 | |

| 36 | |

| 33 | |

| 31 | |

| 28 |

| User | Count |

|---|---|

| 129 | |

| 88 | |

| 79 | |

| 68 | |

| 63 |