Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Data Factory forums

- Forums

- Get Help with Data Factory

- Dataflows

- Re: Dataflow (gen2) error: Couldn't refresh the en...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow (gen2) error: Couldn't refresh the entity because of an issue with the mashup document

I am receiving the following error when my dataflow (gen 2) attempts to sync data from Salesforce to Lakehouse.

Error Code: 999999, Error Details: Couldn't refresh the entity because of an issue with the mashup document MashupException.Error: DataSource.Error: Failed to insert a table., InnerException: Unable to create a table on the Lakehouse SQL catalog due to metadata refresh failure

I have deleted the original tables in the Lakehouse, but I am receiving the same error messages. I created a new dataflow (gen 2) with different data and received the same error message. I have created a new lakehouse altogether and tested the original dataflow (noted above) and new dataflow (gen 2) without success. I keep getting this metadata error.

Your assistance would be appreciated.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had to rebuild the Lakehouse completely to resolve this issue. I used the same dataflow (gen 2) to achieve this outcome.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am in the same situation as @acitsme : I am trying to ingest data from an on-premises Oracle database via a gateway and I am not able to get anywhere so far, all my attempts at refreshing dataflows are returning errors ala:

Error Code: 999999, Error Details: Couldn't refresh the entity because of an issue with the mashup document MashupException.Error: Container exited unexpectedly with code 0xC000001D. PID: 5384. Details: GatewayObjectId: c90672b7-2a7d-4bee-a4ae-bbff19f736ee (Request ID: ebb0b366-0baa-4235-8956-57b11e9511d8).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the issue still there. I've tried to delete everything in the workspace and launching dataflow from pipeline. Non success. Someone here suggested not to publish the dataflow, wait 5 min and use it on a new pipeline.

I've tried but if the dataflow is not published you cannot pick it in the pipeline.

One piece of information missing: I'm testing fabric being in a trial enviroment. MICROSOFT PLEASE HELP

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

Whille the error message might be the same, the reasons that cause such error might be different from the one in this topic.

I'd highly recommend reaching out to our support team by raising a support ticket so an engineer can take a closer look at your situation:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there any general update on this issue? I am also experiencing it now, after our Gateway has been updated to the newest version. (It does not make sense to me that I have to downgrade again a Gateway, for errors to go away).

Any ETA on a bugfix for this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Don't know, let's hope microsoft will take action on this issue clearly explaining what next version (february?) fixes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Miguel,

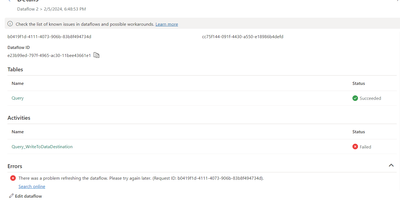

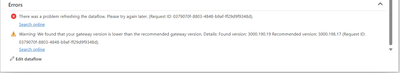

I've downgraded gateway to Nov version 3000.198.17: now the query portion works but dataflows fails in the write side as you can see below. Any suggestion?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am also in a Trial environment...but I just found this one: https://community.fabric.microsoft.com/t5/Dataflows/MashupException-Error-The-value-is-not-updatable... and I have my tech guy downgrade the gw to the September version.

And my dataflows are running successfully now, ingesting data from on-premises Oracle to a lakehouse (with "staging enabled"). Need to test a lot more to build confidence in this platform.

br

johsm

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Joshm,

save your time: september gw is too old

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the end (after one week...) the solution for me was pretty easy:

1. downgrade the enterprise gateway version to November version 3000.198.17: (to avoid the mashup error) like this +++Query: Error Code: 999999, Error Details: Couldn't refresh the entity because of an issue with the mashup document MashupException.Error: Container exited unexpectedly with code 0x000000FF. PID: 2084. Details: GatewayObjectId: aff7bb2a-da8b-43d6-8746-97d3fff96e79 (Request ID: 68e6beea-3654-4f06-95f6-ff4e53724e8f).+++

2. open the following port on firewall to avoid error +++ There was a problem refreshing the dataflow. Please try again later. (Request ID: a164b6c5-bf5b-4bff-93f8-3516d388d381).+++

- Protocol: TCP

- Endpoint: *.datawarehouse.pbidedicated.windows.net

- Port: 1433

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info, but for me it now works (ie with the September one).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had to recreate the Lakehouse and Warehouse to overcome the challenge.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have created a new one and the same happens there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @andyemiller

Follow up to see you on the last response and was just checking back to see if you have a resolution yet. In case if you have any resolution please do share that same with the community as it can be helpful to others.

In case if the issue still persists please upgrade the gateway version to the latest, this might help me in resolving the issue.

Please refer to the Link for more details on the latest gateway version.

I hope this helps. Please do let us know if you have any further questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @andyemiller

We haven’t heard from you on the last response and was just checking back to see if you have a resolution yet. In case if you have any resolution please do share that same with the community as it can be helpful to others.

Otherwise, will respond back with the more details and we will try to help.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had to rebuild the Lakehouse completely to resolve this issue. I used the same dataflow (gen 2) to achieve this outcome.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my case, I had to rebuild the Lakehouse to ingest data from a dataflow. Since this time, I have experienced Mashup issues when the field types are not consistent with the source and destination data. Usually, a change is made to the source data (Salesforce in my case) that has not been replicated to the Lakehouse. I usually edit the Lakehouse field by rebuilding the table structure, using an SQL statement, and then use the dataflow to rebuild the connection between the source and destination. The work takes a "hot minute," but has not been a hurdle since this earlier issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same error. I need a solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @andyemiller

Thanks for using Fabric Community.

Sorry for the inconvenience that you are facing.

Currently there is ongoing bug with some similar issue "Couldn't refresh the entity because of an issue with the mashup document".

I will reach to the internal team on the issue. I will update you once I hear from them.

Meanwhile you can refer to the below links where OP has resolved the similar kind of issue with some workarounds.

Appreciate your patience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting same error and neither of the workarounds worked for me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am also getting the same error. Any ideas yet? Its been a few months. @andyemiller when you say you had to "rebuild the Lakehouse completely". Did you do anything differnt in the creation of the new lakehouse that was differnt then the first one? Or is your hypothosis that perhaps MS has invoked some new capability the Lakehouse creation process behind the scenes and this was not a capability your old lake house had. Just grabbing at straws here to try to figure out what the issue could be. Thanks for your help!