FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!Special holiday offer! You and a friend can attend FabCon with a BOGO code. Supplies are limited. Register now.

- Data Factory forums

- Forums

- Get Help with Data Factory

- Dataflow

- Error loading data from on prem SQL server to Fabr...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Error loading data from on prem SQL server to Fabric Lakehouse with Dataflows Gen2

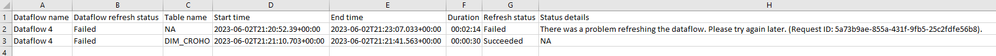

@miguel I am trying to load a table with Dataflows Gen 2 from an on premise SQL server to a Lakehouse in Fabric with load to destination. No luck so far. I keep getting this error: There was a problem refreshing the dataflow. Please try again later. My Gateway is updated to the newest version 3000.174.12. The error shows up when trying to refresh the dataflow. I am trying this in a Fabric Trial Capacity.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The solution was in our case that is was not enough to open up port 1433 en whitelist the right addresses *.datawarehouse.pbidedicated.windows.net and the more usecase specific *.northeurope.cloudapp.azure.com in the Fortinet firewall but to open up SQL access in the firewall from our VM (where the gateway is) to the Fortinet maintained internet service 'Azure'.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The solution was in our case that is was not enough to open up port 1433 en whitelist the right addresses *.datawarehouse.pbidedicated.windows.net and the more usecase specific *.northeurope.cloudapp.azure.com in the Fortinet firewall but to open up SQL access in the firewall from our VM (where the gateway is) to the Fortinet maintained internet service 'Azure'.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

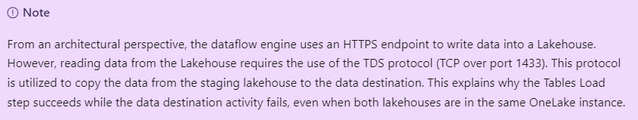

I found this article:

https://learn.microsoft.com/en-us/fabric/data-factory/gateway-considerations-output-destinations

And this fabric blog article:

https://blog.fabric.microsoft.com/it-it/blog/data-factory-spotlight-dataflows-gen2?ft=All:

So i will attend for next release to disable staging area so I don’t need to use 1433 port.

I think this will work for me.

Bye

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll add my vote to this - there's no way we're going to be able to convince our corporate network team to open firewalls every time any Fabric user creates a new lakehouse or warehouse - that just isn't remotely possible for any large client.

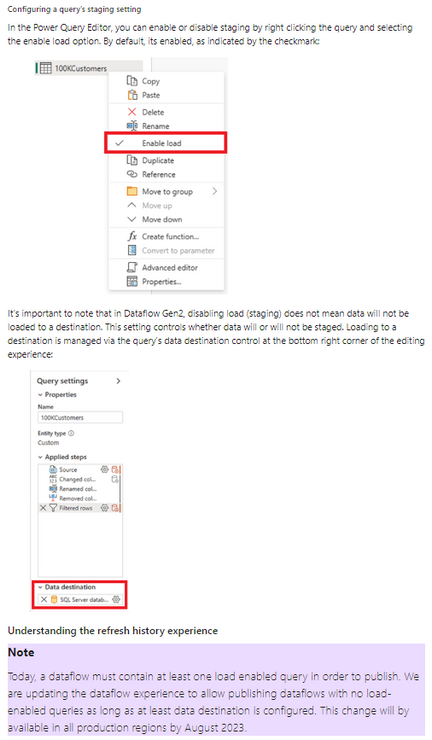

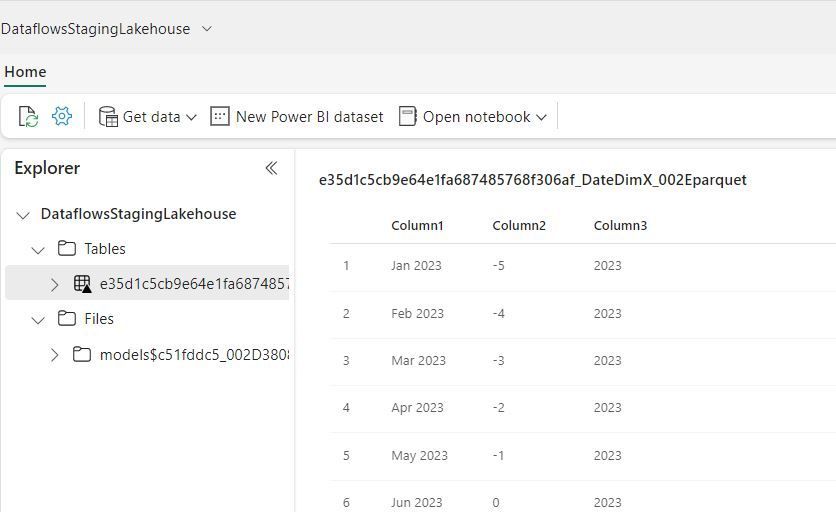

Here's the thing that's super confusing for me. First off, to verify, here's a screenshot of the error I'm getting - exactly the same thing as original post:

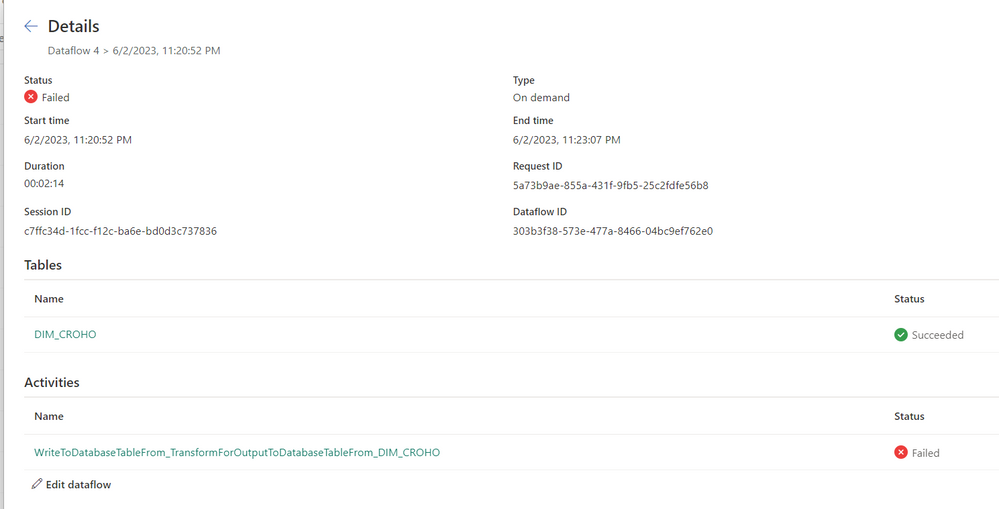

But here's what doesn't make sense - if I look in the workspace where this dataflow lives, we know a "DataflowsStagingLakehouse" is getting created, that I read should in the future be "hidden". If I look in that lakehouse, I can see that the data HAS been successfully pulled from our on-prem SQL server and landed:

So here's my question - I completely don't understand why we're trying to load the data from on-prem to a staging lakehouse, then ship it back down to the on prem gateway, then send it up again to the final lakehouse or warehouse I want to send it to. That's 3 full trips for the data.

Why doesn't / can't this just go directly to the final lakehouse and skip this staging lakehouse? And even if it can't for some reason skip the staging lakehouse, why isn't it moved directly from staging lakehouse to final destination without having to send it back down and up again through an on-prem gateway?

Thanks,

Scott

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And just to follow up, I installed SSMS onto one of our gateway servers - and it isn't able to hit the endpoint SQL connection. I can do so find from my work laptop, but not from the gateway servers.

Still 100% confused about why this is needed though.

Thanks,

Scott

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

These are my clues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

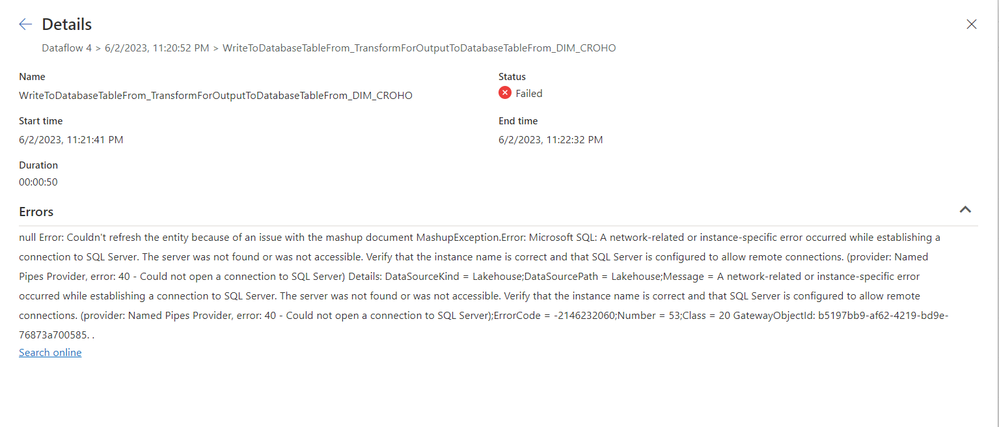

could you please click on the first activity that you see? (at the bottom where it starts with "WriteToDatabaseTableFrom" and post a screenshot of the dialog that shows up? it should show a different error message

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey!

If you go into the refresh history and navigate inside of it to the table / query that shows the failure, what error message does it show? could you please share a screenshot of it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

could it be that your SQL Instance needs to add some of the IPs used by Power Query and Fabric to the allowlist? This error points to a network related issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ah ok. I'll look into it tomorrow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The error message you are seeing isn't about your on-prem SQL Server. Notice that the DataSourceKind is Lakehouse - this means that it's either dataflow staging (which uses Lakehouse) or your destination Lakehouse (probably the destination Lakehouse, since the error is coming up on the Activity writing to the destination Lakehouse).

The SQL Endpoint is used to write to the Lakehouse, so you need make sure that your local network allows connectivity to it. You can get the endpoint info for the Lakehouse from the "More options" menu on the Lakehouse and use the option for the connection string. You can see if it is open by testing from your local machine using SSMS or Azure Data Studio.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With Azure Synapse integration runtime don't have firewall problem...

Can Microsoft Fabric, from on premise sql to lakehouse, also achieve the same result through corporate proxies?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks for your guidance @jwelch I tried the SQL connection string of the destination lakehouse in SSMS and indeed it gave the same sort error:

------------------------------

ADDITIONAL INFORMATION:

A network-related or instance-specific error occurred while establishing a connection to SQL Server. The server was not found or was not accessible. Verify that the instance name is correct and that SQL Server is configured to allow remote connections. (provider: Named Pipes Provider, error: 40 - Could not open a connection to SQL Server) (Microsoft SQL Server, Error: 53)

For help, click: https://docs.microsoft.com/sql/relational-databases/errors-events/mssqlserver-53-database-engine-err...

------------------------------

The network path was not found

----------------------------------------------------------

So now I have to ask the VM / network IT team to allow for connection to this lakehouse and from here on every time we want to use or test a new lakehouse repeat that proces or is there a way to use a range of IP's or something like @miguel suggested?

Our gateway is on a VM, so if that VM should be able to connect to the SQL Endpoint, right?

I looked a the list of IP's for Azure, but there are a lot of adresses 🙂 I would love some help on which to use here.

Download Azure IP Ranges and Service Tags – Public Cloud from Official Microsoft Download Center

Our capacities are in North Europe.

Maybe PowerQueryOnline, DataFactory, SQL, AzureDataLake?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Our gateway is on a VM, so if that VM should be able to connect to the SQL Endpoint, right?

Not necessarily. What needs to be done is that your team will need to add the endpoint of the Lakehouse (either the DNS / servername to it or the direct ip that resolves from that servername) to your allow list for firewall reasons in order for the Gateway to be able to communicate directly to the Lakehouse.

What's currently being blocked is that when your gateway is trying to connect to the Lakehouse to load the data, it is not able to do so given the firewall rules set at the VM where your gateway is located.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having the same error. What is the reasoning behind this arquitecture? if gateway is set to enable the data in the cloud, and the workflow/pipeline is running in the cloud, why this error when dataflow/pipeline runs?

I understand the SQL endpoint must be secured from external connections, but I didn't expect this issue if I'm already bringing data to cloud through the gateway. It also doesn't make sense we don't get any error when building the dataflow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous wrote:if gateway is set to enable the data in the cloud, and the workflow/pipeline is running in the cloud, why this error when dataflow/pipeline runs?

The gateway still needs to send the data from your network to the cloud. For performance reasons, it's better if the Gateway can send the data directly to the destination in the cloud, rather than introducing multiple hops and having to "land" the data in temporary locations.

It also doesn't make sense we don't get any error when building the dataflow.

You don't see an error because when you are building the dataflow, the requests to the destination are not routed through the Gateway - that only happens during the Refresh operation. That is also a performance operation. However, we are looking into ways to validate the connectivity so that authors can be aware of this earlier.

-John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In Azure Synapse each source and each target can decide which integration runtime to use.

Therefore, using the gateway for source and destination, a direct connection is used, while using the gateway for the on premise source and without the gateway for the cloud source, network problems would be avoided.

The solution of opening endpoints on the firewall for each lakehouse is impractical.

I don't understand what the problem is in using the Azure Synapse approach since the capacity is owned by the user.

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks @jwelch

Is it possible to attach endpoints in Fabric to virtual network in Azure ?

Can you share documentation on how to do it or how to enable connectivity from gateway to endpoints?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So for every Lakehouse we will be using (in the end this will be of course limited, but still) this wil have to be repeated?