Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registeredGet Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Learn more

- Data Factory forums

- Forums

- Get Help with Data Factory

- Pipelines

- Re: Speed of writing to Fabric Data Warehouse from...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Speed of writing to Fabric Data Warehouse from Fabric Pipeline vs ADF Pipeline

I need to move a large amount of data from CSVs stored in Azure Blob Storage to a Microsoft Fabric Data Warehouse.

Initially I was planning to use ADF to do this because I may need to use the Tumbling Window Triggers (which to my knowledge are not yet available in Fabric).

Doing so has proven to be quite slow. These files are stored with varying paths within the container, thus requiring the use of wildcard paths to access them. When attempting to use wildcard paths in ADF, I am required to enable staging on the Copy Data activity. If I don't, it fails the pipeline validation.

With staging enabled, it takes a significant amount of time to load the data. For example, loading just 2.6 GB took roughly 2.5 hours.

If I were to recreate and run the same pipeline from Microsoft Fabric, would it be faster? Is there a better way?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the details @smoqt

The internal team is looking into the issue. Meanwhile you can try this as adviced by the team:

If the source has too many small files, the loading to Warehouse copy command will be significantly slow. You can always use a separate copy job with 'Copy Behavior' = 'merge files' to merge small files into one single large file, then the performance will be better.

Hope this helps. Please let me know if you have any further questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @smoqt

The internal team replied as follows:

Moving 2.6 GB files in general should: be much faster than 2.5 hours. Then only difference that impacts the throughput between Fabric and ADF would be in Fabric you can levage the native workspace staging storage while in ADF you have to bind it to an Azure storage.

Hope this helps. Please let me know if you have any further questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. I will continue testing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

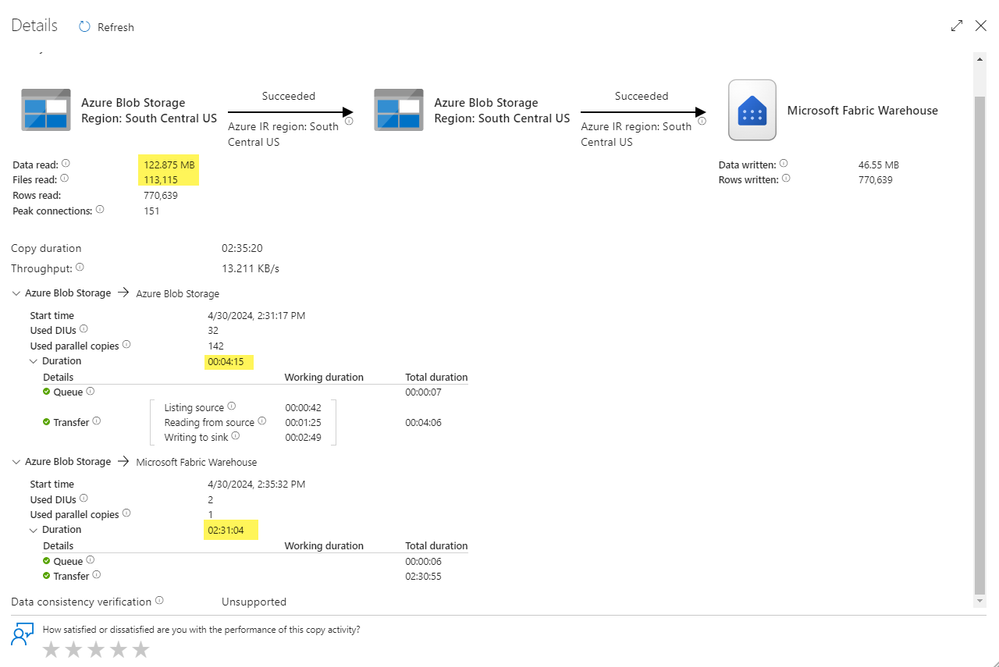

I wanted to share another example where this time I did not receive throttling errors. Writing 123 MB took only 4 min to write to blob storage but 2.5 hours to write to Fabric.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @smoqt

Can you please provide the run id's for the above two pipelines?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Example 1:

Pipeline Run ID: ddbd9f52-da90-43f3-afe1-aa1458b65f59

Activity Run ID: c9f1a5e0-0ff3-4402-b317-ef23111b505c

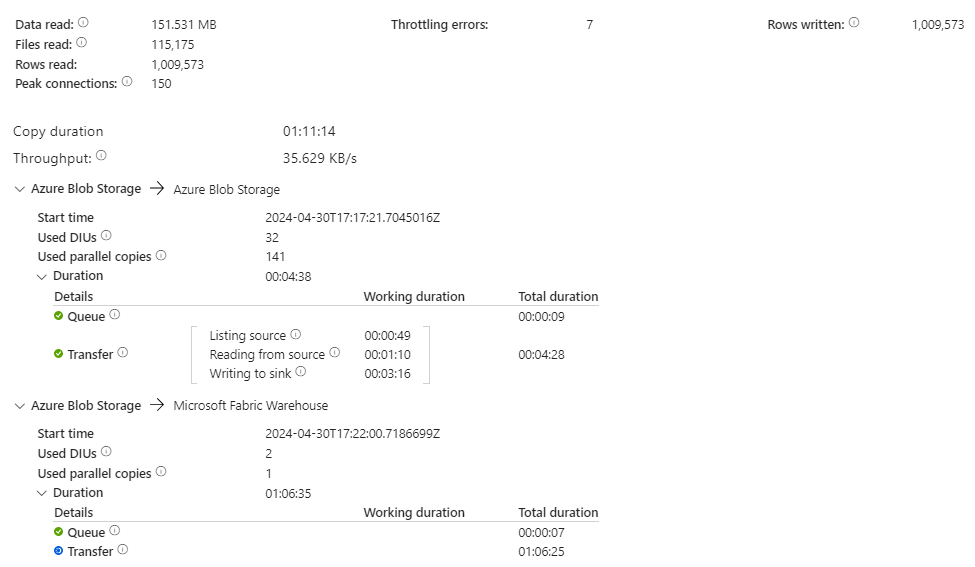

Example 2:

Pipeline Run ID: 31a31bab-42df-4b36-9777-c83ebb758b52

Activity Run ID: dc622eca-8676-4a13-a120-ca1fa962c2e1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the details @smoqt

The internal team is looking into the issue. Meanwhile you can try this as adviced by the team:

If the source has too many small files, the loading to Warehouse copy command will be significantly slow. You can always use a separate copy job with 'Copy Behavior' = 'merge files' to merge small files into one single large file, then the performance will be better.

Hope this helps. Please let me know if you have any further questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, @Anonymous. I will test.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is an example from today.

Currently at 1hr and 12 min for 152 MB.

I see the throttling errors and a significant difference between the performance when writing to Azure Blob Storage vs when writing to Fabric Warehouse.

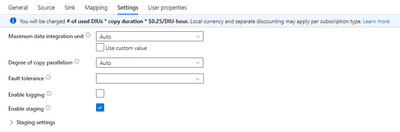

I am using the default settings for Maximum DIU and Degree of Copy Parallelism ("Auto").

I will research how to mitigate throttling errors, however I'm curious if the throttling is happening strictly on the Azure side or on the Fabric side.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @smoqt

Thanks for using Fabric Community.

At this time, we are reaching out to the internal team to get some help on this. We will update you once we hear back from them.

Thanks

Helpful resources

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

Fabric Monthly Update - October 2025

Check out the October 2025 Fabric update to learn about new features.

| User | Count |

|---|---|

| 7 | |

| 2 | |

| 2 | |

| 1 | |

| 1 |