Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Compete to become Power BI Data Viz World Champion! First round ends August 18th. Get started.

- Power BI forums

- Forums

- Get Help with Power BI

- DAX Commands and Tips

- The most efficient way to perform lookup in table

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The most efficient way to perform lookup in table

Hello guys, how are you?

I'm having a very specific issue, and even knowing how to solve that in theory, I'm having serious performance problems, due to the obligatory use from a very heavy database - an azure cube developed by my company that provides data worldwide.

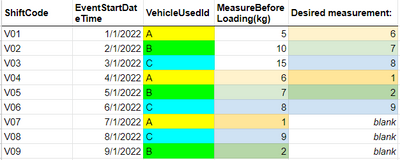

I've come with an example in a fact table, where I have trips from loading vehicles with an initial weight in the beggining of the trip.

I need to create a measurement (can't make new columns in the direct query) that calculates the initial weight for the next trip from this same vehicle.

It's a very simple task with ALL filters and on, however the real table is not retrieving the data due to the mentioned size of the datamodel.

I've been trying using the OFFSET funcion, but I'm not being able to make the logic work to my problem.

Any suggestions?

Below, the latest try I've made:

Thanks!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @renanvg7,

AFAIK, iterate or looping calculations on the table with a huge amount of records may cause the performance issue.

How many records are your report stored? Please share some more detailed information to help us clarify your scenario:

How to Get Your Question Answered Quickly

In addition, you can also try to use the following measure formula if it helps:

Next trip weight =

VAR vData =

MAX ( 'Shifts and Events'[EventStartDateTime] )

VAR vMenorData =

CALCULATE (

MIN ( 'Shifts and Events'[EventStartDateTime] ),

FILTER (

ALLSELECTED ( 'Shifts and Events' ),

'Shifts and Events'[EventStartDateTime] > vData

),

VALUES ( 'Shifts and Events'[VehicleUsedId] )

)

RETURN

CALCULATE (

MAX ( 'Shifts and Events'[MeasureBeforeLoading(kg)] ),

FILTER (

ALLSELECTED ( 'Shifts and Events' ),

[EventStartDateTime] = vMenorData

),

VALUES ( 'Shifts and Events'[VehicleUsedId] )

)Regards,

Xiaoxin Sheng

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @renanvg7,

AFAIK, iterate or looping calculations on the table with a huge amount of records may cause the performance issue.

How many records are your report stored? Please share some more detailed information to help us clarify your scenario:

How to Get Your Question Answered Quickly

In addition, you can also try to use the following measure formula if it helps:

Next trip weight =

VAR vData =

MAX ( 'Shifts and Events'[EventStartDateTime] )

VAR vMenorData =

CALCULATE (

MIN ( 'Shifts and Events'[EventStartDateTime] ),

FILTER (

ALLSELECTED ( 'Shifts and Events' ),

'Shifts and Events'[EventStartDateTime] > vData

),

VALUES ( 'Shifts and Events'[VehicleUsedId] )

)

RETURN

CALCULATE (

MAX ( 'Shifts and Events'[MeasureBeforeLoading(kg)] ),

FILTER (

ALLSELECTED ( 'Shifts and Events' ),

[EventStartDateTime] = vMenorData

),

VALUES ( 'Shifts and Events'[VehicleUsedId] )

)Regards,

Xiaoxin Sheng

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous This solution improved a lot the behaviour! At least it's retrieving data, even if I wait 3 or 4 minutes to do so.

However, I'll talk to the cube managers to provide this calculation in a column, it should be the optimal solution.

We are talking about 100k lines per month, in a 2 year basis, but only for my geography (it may be having issues, because we can be achieving about 2M lines per month in a world basis that I don't have access due to the RSL, and I'm not sure if it increases the calculation time)

However, thanks about the solution!

Best regards,

Renan

Helpful resources

| User | Count |

|---|---|

| 28 | |

| 11 | |

| 8 | |

| 6 | |

| 5 |

| User | Count |

|---|---|

| 35 | |

| 14 | |

| 12 | |

| 9 | |

| 7 |