- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Select Rows based on Timestamp in one table and St...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

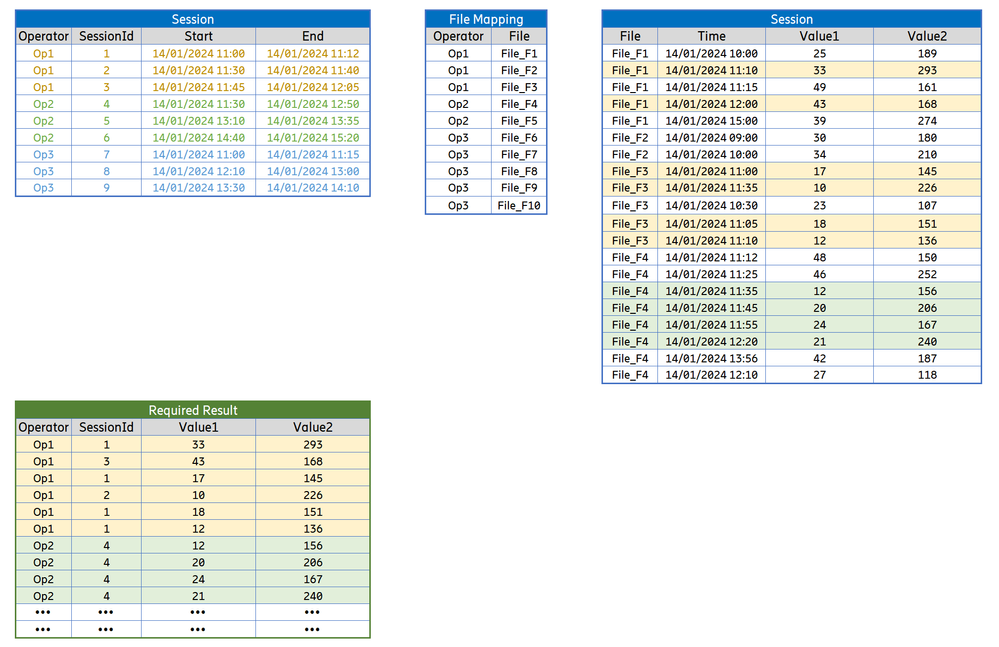

Select Rows based on Timestamp in one table and Start End Fields on a Second Table

Hi There,

I am new to Power BI, so please forgive the basic question.

I have three tables

- Session Table : Containing Start and End Times

- Mapping Table : Containg Operator to File Name mapping

- Events Table: Containing A Time stamp and some values.

What I need to do is create a result which filters the Events Table based on the Start and End times in the Session Table.

Any help would be grealy appricated.

Thanks

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

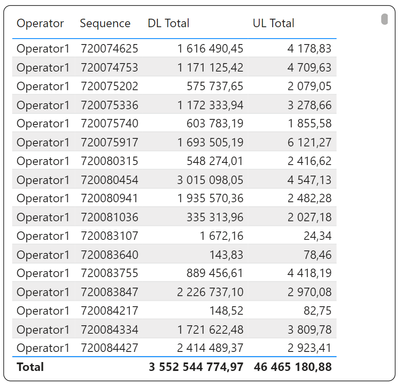

I spent around 2 hours with this query. I tried different versions of code because I wanted to speed it up, but txt source is too slow and you need to do a lot of comparism. I'm not able to make it faster. Maybe someone else can help.

Change address to your folder in Source step.

let

Source = Folder.Files("c:\Downloads\PowerQueryForum\VHalpenny\"),

FilteredSessionsTable = Table.SelectRows(Source, each ([Name] = "SessionsTable.xlsx")),

Content = FilteredSessionsTable{0}[Content],

ImportedExcelWorkbook = Excel.Workbook(Content, true),

Sheet1 = ImportedExcelWorkbook{[Item="Sheet1",Kind="Sheet"]}[Data],

FilteredRows1 = Table.SelectRows(Sheet1, each ([T200] <> null)),

RemoveMiliseconds = Table.TransformColumns(FilteredRows1, List.Transform({"T200", "T300"}, (x)=> { x, each DateTime.From(DateTime.ToText(_, [Format="yyyy-MM-dd hh:mm:ss"])) })),

Ad_DateTimeList = Table.AddColumn(RemoveMiliseconds, "DateTimeList", each List.DateTimes([T200], Duration.TotalSeconds([T300] - [T200])+1, #duration(0,0,0,1)), type list),

Ad_MergeKey1 = Table.AddColumn(Ad_DateTimeList, "MergeKey", each

if Time.Minute([T200]) = Time.Minute([T300])

then { Number.From(DateTime.ToText([T200], "yyyyMMddhhmm")) }

else { Number.From(DateTime.ToText([T200], "yyyyMMddhhmm")), Number.From(DateTime.ToText([T300], "yyyyMMddhhmm")) },

type list),

// Niektore riadky su zduplikovane kvoli MERGE neskor. Jedna sa o riadky kedy minuta z [T200] nie je rovna minute z [T300]

ExpandedMergeKey1 = Table.ExpandListColumn(Ad_MergeKey1, "MergeKey"),

TableSessions = Table.Buffer(Table.SelectColumns(ExpandedMergeKey1,{"Operator", "MergeKey", "DateTimeList"})),

StepBack = Source,

FiteredOperatorTextFiles = Table.SelectRows(StepBack, each Text.StartsWith([Name], "Operator", Comparer.OrdinalIgnoreCase) and [Extension] = ".txt" ),

RemovedOtherColumns1 = Table.SelectColumns(FiteredOperatorTextFiles,{"Content", "Name"}),

Ad_Data = Table.AddColumn(RemovedOtherColumns1, "Data", each

[ a = Table.TransformColumnNames(Table.PromoteHeaders(Csv.Document([Content],[Delimiter=";", Columns=3, Encoding=1250, QuoteStyle=QuoteStyle.None])), Text.Trim), //csv to table + promote headers + trim column names

b = Table.AddColumn(a, "Operator", (x)=> Text.BeforeDelimiter([Name], "_"), type text), //Add Operator column

c = Table.AddColumn(b, "System", (x)=> Text.Proper(Text.BetweenDelimiters(Text.Lower([Name]), "_", ".txt")), type text) //Add System column

//d = Table.FirstN(c, RepeatValue) //for query creating purpose (to load less data)

][c], type table),

CombinedData = Table.Combine(Ad_Data[Data]),

ChangedType = Table.TransformColumnTypes(CombinedData,{{"Time", type datetime}, {"MR-DC DRB PDCP DL Throughput Total (kbps)", type number}, {"MR-DC DRB PDCP UL Throughput Total (kbps)", type number}}, "en-US"),

FilteredRows2 = Table.SelectRows(ChangedType, each [Time] <> null),

RemoveMiliseconds2 = Table.TransformColumns(FilteredRows2, {{"Time", each DateTime.From(DateTime.ToText(_, [Format="yyyy-MM-dd hh:mm:ss"]))}}),

Ad_MergeKey2 = Table.AddColumn(RemoveMiliseconds2, "MergeKey", each Number.From(DateTime.ToText([Time], "yyyyMMddhhmm")), Int64.Type),

GroupedRows = Table.Group(Ad_MergeKey2, {"Operator", "MergeKey"}, {{"All", each _, type table [Time=nullable datetime, #"MR-DC DRB PDCP DL Throughput Total (kbps)"=nullable number, #"MR-DC DRB PDCP UL Throughput Total (kbps)"=nullable number, Operator=text, System=text, Date=date, Hour=number]}}),

MergedQueries = Table.NestedJoin(GroupedRows, {"Operator", "MergeKey"}, TableSessions, {"Operator", "MergeKey"}, "TableSessions", JoinKind.LeftOuter),

FilteredRows3 = Table.SelectRows(MergedQueries, each Table.RowCount([TableSessions]) <> 0),

Ad_TableSessionDateTimes = Table.AddColumn(FilteredRows3, "TableSession_DateTimes", each List.Combine([TableSessions][DateTimeList]), type list),

RemovedOtherColumns2 = Table.SelectColumns(Ad_TableSessionDateTimes,{"All", "TableSession_DateTimes"}),

ExpandedAll = Table.ExpandTableColumn(RemovedOtherColumns2, "All", {"Time", "MR-DC DRB PDCP DL Throughput Total (kbps)", "MR-DC DRB PDCP UL Throughput Total (kbps)", "Operator", "System"}, {"Time", "MR-DC DRB PDCP DL Throughput Total (kbps)", "MR-DC DRB PDCP UL Throughput Total (kbps)", "Operator", "System"}),

Ad_KeepRow = Table.AddColumn(ExpandedAll, "Keep Row", each List.Contains(List.Buffer([TableSession_DateTimes]), [Time]), type logical),

FilteredRows4 = Table.SelectRows(Ad_KeepRow, each [Keep Row] = true)

in

FilteredRows4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oops!

Sorry, I have done so now.

https://drive.google.com/file/d/1Dsg62N8N9wA7-c8N5DBKmlsIO4SHizEw/view?usp=drive_link

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I spent around 2 hours with this query. I tried different versions of code because I wanted to speed it up, but txt source is too slow and you need to do a lot of comparism. I'm not able to make it faster. Maybe someone else can help.

Change address to your folder in Source step.

let

Source = Folder.Files("c:\Downloads\PowerQueryForum\VHalpenny\"),

FilteredSessionsTable = Table.SelectRows(Source, each ([Name] = "SessionsTable.xlsx")),

Content = FilteredSessionsTable{0}[Content],

ImportedExcelWorkbook = Excel.Workbook(Content, true),

Sheet1 = ImportedExcelWorkbook{[Item="Sheet1",Kind="Sheet"]}[Data],

FilteredRows1 = Table.SelectRows(Sheet1, each ([T200] <> null)),

RemoveMiliseconds = Table.TransformColumns(FilteredRows1, List.Transform({"T200", "T300"}, (x)=> { x, each DateTime.From(DateTime.ToText(_, [Format="yyyy-MM-dd hh:mm:ss"])) })),

Ad_DateTimeList = Table.AddColumn(RemoveMiliseconds, "DateTimeList", each List.DateTimes([T200], Duration.TotalSeconds([T300] - [T200])+1, #duration(0,0,0,1)), type list),

Ad_MergeKey1 = Table.AddColumn(Ad_DateTimeList, "MergeKey", each

if Time.Minute([T200]) = Time.Minute([T300])

then { Number.From(DateTime.ToText([T200], "yyyyMMddhhmm")) }

else { Number.From(DateTime.ToText([T200], "yyyyMMddhhmm")), Number.From(DateTime.ToText([T300], "yyyyMMddhhmm")) },

type list),

// Niektore riadky su zduplikovane kvoli MERGE neskor. Jedna sa o riadky kedy minuta z [T200] nie je rovna minute z [T300]

ExpandedMergeKey1 = Table.ExpandListColumn(Ad_MergeKey1, "MergeKey"),

TableSessions = Table.Buffer(Table.SelectColumns(ExpandedMergeKey1,{"Operator", "MergeKey", "DateTimeList"})),

StepBack = Source,

FiteredOperatorTextFiles = Table.SelectRows(StepBack, each Text.StartsWith([Name], "Operator", Comparer.OrdinalIgnoreCase) and [Extension] = ".txt" ),

RemovedOtherColumns1 = Table.SelectColumns(FiteredOperatorTextFiles,{"Content", "Name"}),

Ad_Data = Table.AddColumn(RemovedOtherColumns1, "Data", each

[ a = Table.TransformColumnNames(Table.PromoteHeaders(Csv.Document([Content],[Delimiter=";", Columns=3, Encoding=1250, QuoteStyle=QuoteStyle.None])), Text.Trim), //csv to table + promote headers + trim column names

b = Table.AddColumn(a, "Operator", (x)=> Text.BeforeDelimiter([Name], "_"), type text), //Add Operator column

c = Table.AddColumn(b, "System", (x)=> Text.Proper(Text.BetweenDelimiters(Text.Lower([Name]), "_", ".txt")), type text) //Add System column

//d = Table.FirstN(c, RepeatValue) //for query creating purpose (to load less data)

][c], type table),

CombinedData = Table.Combine(Ad_Data[Data]),

ChangedType = Table.TransformColumnTypes(CombinedData,{{"Time", type datetime}, {"MR-DC DRB PDCP DL Throughput Total (kbps)", type number}, {"MR-DC DRB PDCP UL Throughput Total (kbps)", type number}}, "en-US"),

FilteredRows2 = Table.SelectRows(ChangedType, each [Time] <> null),

RemoveMiliseconds2 = Table.TransformColumns(FilteredRows2, {{"Time", each DateTime.From(DateTime.ToText(_, [Format="yyyy-MM-dd hh:mm:ss"]))}}),

Ad_MergeKey2 = Table.AddColumn(RemoveMiliseconds2, "MergeKey", each Number.From(DateTime.ToText([Time], "yyyyMMddhhmm")), Int64.Type),

GroupedRows = Table.Group(Ad_MergeKey2, {"Operator", "MergeKey"}, {{"All", each _, type table [Time=nullable datetime, #"MR-DC DRB PDCP DL Throughput Total (kbps)"=nullable number, #"MR-DC DRB PDCP UL Throughput Total (kbps)"=nullable number, Operator=text, System=text, Date=date, Hour=number]}}),

MergedQueries = Table.NestedJoin(GroupedRows, {"Operator", "MergeKey"}, TableSessions, {"Operator", "MergeKey"}, "TableSessions", JoinKind.LeftOuter),

FilteredRows3 = Table.SelectRows(MergedQueries, each Table.RowCount([TableSessions]) <> 0),

Ad_TableSessionDateTimes = Table.AddColumn(FilteredRows3, "TableSession_DateTimes", each List.Combine([TableSessions][DateTimeList]), type list),

RemovedOtherColumns2 = Table.SelectColumns(Ad_TableSessionDateTimes,{"All", "TableSession_DateTimes"}),

ExpandedAll = Table.ExpandTableColumn(RemovedOtherColumns2, "All", {"Time", "MR-DC DRB PDCP DL Throughput Total (kbps)", "MR-DC DRB PDCP UL Throughput Total (kbps)", "Operator", "System"}, {"Time", "MR-DC DRB PDCP DL Throughput Total (kbps)", "MR-DC DRB PDCP UL Throughput Total (kbps)", "Operator", "System"}),

Ad_KeepRow = Table.AddColumn(ExpandedAll, "Keep Row", each List.Contains(List.Buffer([TableSession_DateTimes]), [Time]), type logical),

FilteredRows4 = Table.SelectRows(Ad_KeepRow, each [Keep Row] = true)

in

FilteredRows4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wouldn't try to do that in Power Query. Only do minimal transforms and then let Power BI do the heavy lifting in memory.

let

Source = Folder.Files("C:\Users\Downloads\Help\Sessions"),

#"Added Custom" = Table.AddColumn(Source, "Custom", each Table.PromoteHeaders(Csv.Document([Content],[Delimiter=";", Columns=3, Encoding=1252, QuoteStyle=QuoteStyle.None]), [PromoteAllScalars=true])),

#"Expanded Custom" = Table.ExpandTableColumn(#"Added Custom", "Custom", {"Time", " MR-DC DRB PDCP DL Throughput Total (kbps)", "MR-DC DRB PDCP UL Throughput Total (kbps)"}, {"Time", " MR-DC DRB PDCP DL Throughput Total (kbps)", "MR-DC DRB PDCP UL Throughput Total (kbps)"}),

#"Removed Other Columns" = Table.SelectColumns(#"Expanded Custom",{"Name", "Time", " MR-DC DRB PDCP DL Throughput Total (kbps)", "MR-DC DRB PDCP UL Throughput Total (kbps)"}),

#"Renamed Columns" = Table.RenameColumns(#"Removed Other Columns",{{" MR-DC DRB PDCP DL Throughput Total (kbps)", "DL"}, {"MR-DC DRB PDCP UL Throughput Total (kbps)", "UL"}}),

#"Changed Type" = Table.TransformColumnTypes(#"Renamed Columns",{{"Time", type datetime}, {"DL", type number}, {"UL", type number}}),

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each [DL]>0 or [UL]>0),

#"Split Column by Delimiter" = Table.SplitColumn(#"Filtered Rows", "Name", Splitter.SplitTextByDelimiter("_", QuoteStyle.Csv), {"Operator", "System"}),

#"Replaced Value" = Table.ReplaceValue(#"Split Column by Delimiter",".txt","",Replacer.ReplaceText,{"System"})

in

#"Replaced Value"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @VHalpenny,

@lbendlin is right. I created pbix file for you, but this will work only if you have 1 operator in SessionsTable. If you have multiple - you have to use different logic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much for your help!

I really appriacte it!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you dufoq3,

I really appricate the time and effort you put into this.

I will use what you have suggested, even if it is slow it is better than anything I could do!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried loading your tables as is and connecting them via the data model?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi lbendlin,

No I have not.

I do not know how to go about it it.

In particular how do I filter the data based on the start and ends?

Thanks V.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please provide sample data that covers your issue or question completely, in a usable format (not as a screenshot).

Do not include sensitive information or anything not related to the issue or question.

If you are unsure how to upload data please refer to https://community.fabric.microsoft.com/t5/Community-Blog/How-to-provide-sample-data-in-the-Power-BI-...

Please show the expected outcome based on the sample data you provided.

Want faster answers? https://community.fabric.microsoft.com/t5/Desktop/How-to-Get-Your-Question-Answered-Quickly/m-p/1447...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi lbendlin,

I am having issues sharing the data with you. I wanted to give a zip file but both OneDrive and Dopbox ask me to specify an e-mail address.

Instead I will explain the source files with some sample data.

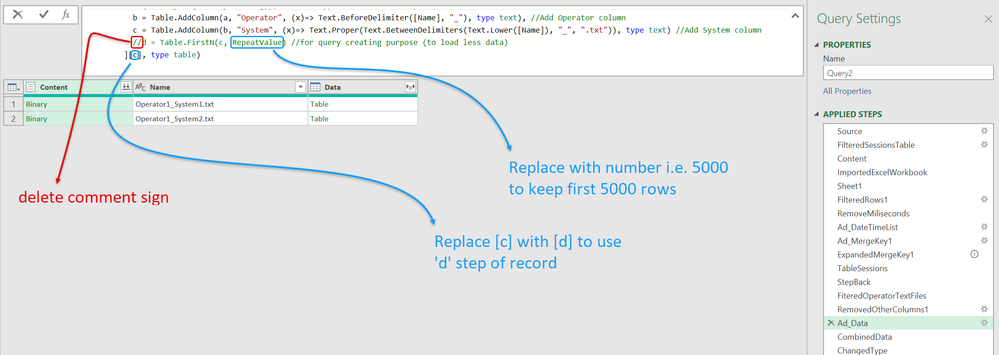

1. There is a sessions master excel file which contains the following information format. (I have reduced the columns and anonymised the data)

| Operator | Sequence | Point of Interest | Infrastructure Type Fine | T200 | T300 |

| Operator1 | 720074625 | Drive | cities | 2023-07-20 07:46:32.579 | 2023-07-20 07:46:39.579 |

| Operator1 | 720075202 | Drive | cities | 2023-07-20 07:52:08.142 | 2023-07-20 07:52:15.142 |

| Operator1 | 720075740 | Drive | cities | 2023-07-20 07:57:46.954 | 2023-07-20 07:57:53.954 |

| Operator1 | 720080315 | Drive | cities | 2023-07-20 08:03:22.375 | 2023-07-20 08:03:29.375 |

| Operator1 | 720080941 | Drive | cities | 2023-07-20 08:09:48.694 | 2023-07-20 08:09:55.694 |

| Operator1 | 720083755 | Drive | cities | 2023-07-20 08:38:02.599 | 2023-07-20 08:38:09.599 |

| Operator1 | 720084334 | Drive | cities | 2023-07-20 08:43:40.796 | 2023-07-20 08:43:47.796 |

| Operator1 | 720084912 | Drive | cities | 2023-07-20 08:49:19.291 | 2023-07-20 08:49:26.291 |

| Operator1 | 720085459 | Drive | cities | 2023-07-20 08:55:05.787 | 2023-07-20 08:55:12.787 |

| Operator1 | 720090048 | Drive | cities | 2023-07-20 09:00:54.729 | 2023-07-20 09:01:01.729 |

| Operator1 | 720090618 | Drive | cities | 2023-07-20 09:06:25.142 | 2023-07-20 09:06:32.142 |

| Operator1 | 720091154 | Drive | cities | 2023-07-20 09:12:01.161 | 2023-07-20 09:12:08.161 |

| Operator1 | 720091737 | Drive | cities | 2023-07-20 09:17:44.544 | 2023-07-20 09:17:51.544 |

| Operator1 | 720092309 | Drive | cities | 2023-07-20 09:23:16.070 | 2023-07-20 09:23:23.070 |

| Operator1 | 720092843 | Drive | cities | 2023-07-20 09:28:50.806 | 2023-07-20 09:28:57.806 |

| Operator1 | 720093424 | Drive | cities | 2023-07-20 09:34:30.959 | 2023-07-20 09:34:37.959 |

| Operator1 | 720094013 | Drive | cities | 2023-07-20 09:40:19.849 | 2023-07-20 09:40:26.849 |

| Operator1 | 720094726 | Drive | cities | 2023-07-20 09:47:33.674 | 2023-07-20 09:47:40.674 |

| Operator1 | 720095301 | Drive | cities | 2023-07-20 09:53:08.537 | 2023-07-20 09:53:15.537 |

| Operator1 | 720095852 | Drive | cities | 2023-07-20 09:59:00.670 | 2023-07-20 09:59:07.670 |

| Operator1 | 720100440 | Drive | cities | 2023-07-20 10:04:48.336 | 2023-07-20 10:04:55.336 |

| Operator1 | 720101034 | Drive | cities | 2023-07-20 10:10:41.640 | 2023-07-20 10:10:48.640 |

| Operator1 | 720101615 | Drive | cities | 2023-07-20 10:16:22.645 | 2023-07-20 10:16:29.645 |

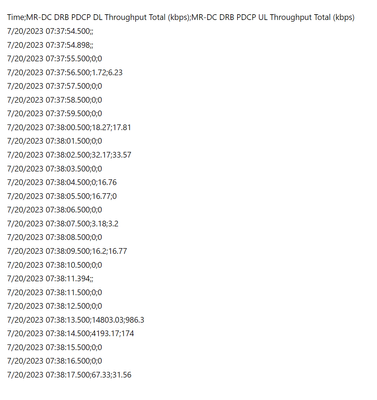

2. There is a directory which holds csv files with the follwing format (again I have simplified the columns).

Time;MR-DC DRB PDCP DL Throughput Total (kbps);MR-DC DRB PDCP UL Throughput Total (kbps)

7/20/2023 07:37:54.500;;

7/20/2023 07:37:54.898;;

7/20/2023 07:37:55.500;0;0

7/20/2023 07:37:56.500;1.72;6.23

7/20/2023 07:37:57.500;0;0

7/20/2023 07:37:58.500;0;0

7/20/2023 07:37:59.500;0;0

7/20/2023 07:38:00.500;18.27;17.81

7/20/2023 07:38:01.500;0;0

7/20/2023 07:38:02.500;32.17;33.57

7/20/2023 07:38:03.500;0;0

7/20/2023 07:38:04.500;0;16.76

7/20/2023 07:38:05.500;16.77;0

7/20/2023 07:38:06.500;0;0

7/20/2023 07:38:07.500;3.18;3.2

7/20/2023 07:38:08.500;0;0

7/20/2023 07:38:09.500;16.2;16.77

7/20/2023 07:38:10.500;0;0

7/20/2023 07:38:11.394;;

7/20/2023 07:38:11.500;0;0

7/20/2023 07:38:12.500;0;0

7/20/2023 07:38:13.500;14803.03;986.3

7/20/2023 07:38:14.500;4193.17;174

7/20/2023 07:38:15.500;0;0

7/20/2023 07:38:16.500;0;0

7/20/2023 07:38:17.500;67.33;31.56

3. Unfortunately I cannot provide a sample of the required output because it is part of a larger process. However what I am looking for is to file out the events in the individual sessions in the csv files using the time window defined in the sessions mater excel file, (T200 : Start Time - T300 : End Time). This information should form a new table witht the same information as in the csv file, but excluding times outside the defined session windows.

Thanks for your help,

V.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oops!

Sorry, that mapping table I created in excel, it was a mapping between the operator and the filename.

| Operator | File |

| Operator1 | System_File1 |

| Operator1 | System_File2 |

| Operator1 | System_File3 |

| Operator2 | System_File4 |

The filename column I created in Power BI when transforming the csv and the operator name is in the sessions master file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

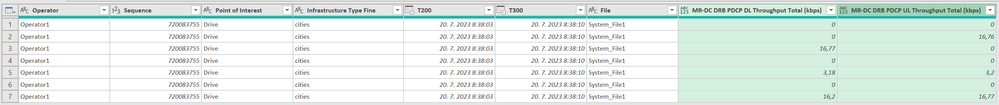

When I transform the events table in Power BI

It has the equivilent of this

File;Time;MR-DC DRB PDCP DL Throughput Total (kbps);MR-DC DRB PDCP UL Throughput Total (kbps)

System_File1;7/20/2023 07:37:54.500;;

System_File1;7/20/2023 07:37:54.898;;

System_File1;7/20/202System1;3 07:37:55.500;0;0

System_File1;7/20/2023 07:37:56.500;1.72;6.23

System_File1;7/20/2023 07:37:57.500;0;0

System_File1;7/20/2023 07:37:58.500;0;0

System_File1;7/20/2023 07:37:59.500;0;0

System_File1;7/20/2023 07:38:00.500;18.27;17.81

System_File1;7/20/2023 07:38:01.500;0;0

System_File1;7/20/2023 07:38:02.500;32.17;33.57

System_File1;7/20/2023 07:38:03.500;0;0

System_File1;7/20/2023 07:38:04.500;0;16.76

System_File1;7/20/2023 07:38:05.500;16.77;0

System_File1;7/20/2023 07:38:06.500;0;0

System_File1;7/20/2023 07:38:07.500;3.18;3.2

System_File1;7/20/2023 07:38:08.500;0;0

System_File1;7/20/2023 07:38:09.500;16.2;16.77

System_File1;7/20/2023 07:38:10.500;0;0

System_File1;7/20/2023 07:38:11.394;;

System_File1;7/20/2023 07:38:11.500;0;0

System_File1;7/20/2023 07:38:12.500;0;0

System_File1;7/20/2023 07:38:13.500;14803.03;986.3

System_File1;7/20/2023 07:38:14.500;4193.17;174

System_File1;7/20/2023 07:38:15.500;0;0

System_File1;7/20/2023 07:38:16.500;0;0

System_File1;7/20/2023 07:38:17.500;67.33;31.56

Thanks

V.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm sorry, but don't be that lazy and check your data before posting please!

There were no match between Session and Event table, so I've edited your sample.

Result

let

SessionTable = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZVLihwxDECvEno9Y/S1LK9nnwMMvQihF71K6Aw5f2T1rlrBBQVleMUroZ8/Py/ff98eP75+PfDydjECMOmkcf543P/e4v3z/nW//YkDAfE72DvBN7ApfTI1NS+JJ7m+FXqNr/d6pQmjoVBFUJPUehM4oV9hNlepiHKSSj+AcZucMYEnUWPTkniSWu+CJ/Q+ZbTux+iTqCYp9fHfE9FzeKK0fiztk3iSUi/MstcLT4Fm3ktiSWq947ZzQuITvZFjRagnKfUq6nu96gRtNqwiSEkqvQPI2Ol9AkyVZnTMfRCMJ0mt73hG3ydpMVZJYp7/N1aOqNvSRuJpxYj9mPsnGUlqvbGd0MfUSlM59n0SxSSlnhi2pY3m4Im9gUFFiJPU+uj8E/poEWgDjn3/JJak1LPQidyzTIZYXK+ds4glKfUCeCJ6gTVWMYMVibFapNYb9RN6m8yt22tpg8S6WKTUK8N2Y8ZS5NV+ysepTRLXySK1fuh254RkDW7E+No5i1iSQo+xE7a3FcIEWfueuVck9v0ipR5hu5BDEo9g6wIlGUlqfd9ehkvS12XYRUviSa7Xfw==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Operator = _t, Sequence = _t, #"Point of Interest" = _t, #"Infrastructure Type Fine" = _t, T200 = _t, T300 = _t]),

SessionTable_ChangedType = Table.TransformColumnTypes(SessionTable,{{"Sequence", Int64.Type}, {"T200", type datetime}, {"T300", type datetime}}),

MappingTable = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45W8i9ILUosyS8yVNJRCq4sLknNjXfLzEk1VIrVwSlphE/SGEXSCFXSRCk2FgA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Operator = _t, File = _t]),

EventTable = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("lZNBS8QwEIX/SuhJQcdMJs2kzc0tnhSWtZ7KsigUd3GXXdzuwX9v2uhFkE5o6Ol7L0Pem64rHnb7PrS7Qx+eVrfNQjWre7VsFkvVPKp2+3m8vG9Pl0G1x+F1r64+3k7n67/ky79ksb7piuev89AfNuNFGPjO6HgMKe1r4rq0UGodgoj0lReR5eQZPwHrJhaBTXBgSKDgDHefwVZS1tdap6k9GA7I4FGgwQx/M7FkADkQQckCDWX42x8WHbAT8CnRkWaRv8uYJeVJgD7+jEAgDjWy1e/gJk0/L0Ett0cEquz8Tkyk3NVksCl1tD7mrylU3sHsEkVZKoDFisaKIVuBRrzWkc0oAKYCOAaiQPGdYiHX3w==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Column1 = _t]),

EventTable_Split = Table.SplitColumn(EventTable, "Column1", Splitter.SplitTextByDelimiter(";", QuoteStyle.Csv)),

EvetnTable_PromotedHeaders = Table.PromoteHeaders(EventTable_Split, [PromoteAllScalars=true]),

EventTable_ChangedType = Table.TransformColumnTypes(EvetnTable_PromotedHeaders,{{"Time", type datetime}, {"MR-DC DRB PDCP DL Throughput Total (kbps)", type number}, {"MR-DC DRB PDCP UL Throughput Total (kbps)", type number}}, "en-US"),

// Merged Session and Mapping

MergedQueriesMapping = Table.NestedJoin(SessionTable_ChangedType, {"Operator"}, MappingTable, {"Operator"}, "MappingTable", JoinKind.LeftOuter),

ExpandedMappingTable = Table.ExpandTableColumn(MergedQueriesMapping, "MappingTable", {"File"}, {"File"}),

MergedQueriesEvent = Table.NestedJoin(ExpandedMappingTable, {"File"}, EventTable_ChangedType, {"File"}, "EventTable_ChangedType", JoinKind.LeftOuter),

FilteredRows = Table.SelectRows(MergedQueriesEvent, each Table.RowCount([EventTable_ChangedType]) > 0),

Ad_Data = Table.AddColumn(FilteredRows, "Data", each Table.SelectRows([EventTable_ChangedType], (x)=> x[Time] >= [T200] and x[Time] <= [T300]), type table),

ExpandedData = Table.ExpandTableColumn(Ad_Data, "Data", {"MR-DC DRB PDCP DL Throughput Total (kbps)", "MR-DC DRB PDCP UL Throughput Total (kbps)"}, {"MR-DC DRB PDCP DL Throughput Total (kbps)", "MR-DC DRB PDCP UL Throughput Total (kbps)"}),

FilteredRows2 = Table.SelectRows(ExpandedData, each ([#"MR-DC DRB PDCP DL Throughput Total (kbps)"] <> null)),

RemovedColumns = Table.RemoveColumns(FilteredRows2,{"EventTable_ChangedType"})

in

RemovedColumns- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dufoq,

I was not intentionally being lazy.

Origionally I put the concept I was trying to explain in my origional post.

What I was looking for is an approach to filter rows based on data in another table.

I.e. start and end times in one table and the events listed by time in another.

I did try to share the source data using OndDrive or Dropbox, both of which need a corresponding email to allow permissions to access.

The system_files in question have over 700,000 entries in them with more than 350 Columns.

This is simplified down to explain the concept.

Thank you for your reply I will try to replicate it.

I appricate your time and effort.

Thanks,

V.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.