- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remove duplicate row, as well as row that was duplicated.

Our labelling software generates a transaction report that represents each label generated, I pull data from this report using PowerQuery. Each row is an individual label or transaction.

When an error is made during the labelling process and the operator deletes/reverses that label, the transaction report shows it as a normal transaction with a 1 in a Transaction Type (Trx Type) column, followed by another row with the same information, but has a -1 as the transaction type to represent the deleted label.

Is there any way I can remove both these rows in my query? I know I can filter -1 Trx Types, but this leaves the original transaction in place. These is also a serial column for each transaction, these match on the -1 and 1 rows, so could potentially be used? Below is an example of the report.

| Date | Product PPN | Description | Prod Date | Serial | Trx Type |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70001 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70002 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70003 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70004 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70005 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70006 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70007 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70008 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70009 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70010 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70011 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL (*Deleted*) | 2/11/2023 | 70011 | -1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70012 | 1 |

| 4/12/2023 | 10751 | PRODUCT LABEL | 2/11/2023 | 70013 | 1 |

Any help would be appreciated.

Thank you

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @Andrew__K ,

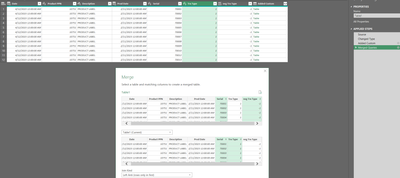

Steps taken:

1. Unique Row Identifier: Combination of all columns delimeted by "|" in the Table Query (Your input)

2. Created a new query (RowsToRemove) referencing this

3. Grouped this query by Serial along with a summarized table of all rows and a count of all distinct rows. If distinct count > 1 then there are duplicates.

4. Filtered for rows >1 . Expanded the table.

5. Merged Table and Rows to delete as a new query on the unique identifier (Output - shown below). The rows which don't have a unique idenitifer are the rows which didn't have any duplicates in the raw data and are retained.

Copy and paste the following queries in the advanced editor of a blank query:

Table:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("pdKxDoIwEAbgV7l0UoKhV0B0VOtGlChODQOBDiRESK2Jvr1tIqOD3NY/93+XDqcUk7XVLGRQmKF9NhaK4gQuS/1oTDfabrj76KcwVa/adHXvXqV5QfkeNatCxZIIRSS4iN0AeZaiZ5ezvB1KyHf7Y+6yiBCnTsY59x2crQVJxySdkHRK0muSzkh6Q9JbikZO0n/cGiwCqXttdRssf2xazf8I6Wzxe7bVBw==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"(blank)" = _t, #"(blank).1" = _t, #"(blank).2" = _t, #"(blank).3" = _t, #"(blank).4" = _t, #"(blank).5" = _t]),

#"Promoted Headers" = Table.PromoteHeaders(Source, [PromoteAllScalars=true]),

#"Changed Type" = Table.TransformColumnTypes(#"Promoted Headers",{{"Date", type date}, {" Product PPN ", Int64.Type}, {"Description ", type text}, {"Prod Date", type date}, {" Serial", Int64.Type}, {"Trx Type", Int64.Type}}),

#"Unique Row Identifier" = Table.AddColumn(#"Changed Type", "Unique Row Identifier", each Text.Combine({Text.From([Date], "en-CA"), Text.From([#" Product PPN "], "en-CA"), [#"Description "], Text.From([Prod Date], "en-CA"), Text.From([#" Serial"], "en-CA"), Text.From([Trx Type], "en-CA")}, "|"), type text)

in

#"Unique Row Identifier"RowstoRemove:

let

Source = Table,

#"Grouped Rows" = Table.Group(Source, {" Serial"}, {{"Data", each _, type table [Date=nullable date, #" Product PPN "=nullable number, #"Description "=nullable text, Prod Date=nullable date, #" Serial"=nullable number, Trx Type=nullable number, Unique Row Identifier=text]}, {"Distinct Row Count", each Table.RowCount(Table.Distinct(_)), Int64.Type}}),

#"Filtered Rows" = Table.SelectRows(#"Grouped Rows", each ([Distinct Row Count] = 2)),

#"Removed Other Columns" = Table.SelectColumns(#"Filtered Rows",{"Data"}),

#"Expanded Data" = Table.ExpandTableColumn(#"Removed Other Columns", "Data", {"Date", " Product PPN ", "Description ", "Prod Date", " Serial", "Trx Type", "Unique Row Identifier"}, {"Date", " Product PPN ", "Description ", "Prod Date", " Serial", "Trx Type", "Unique Row Identifier"})

in

#"Expanded Data"Output:

let

Source = Table.NestedJoin(Table, {"Unique Row Identifier"}, RowsToRemove, {"Unique Row Identifier"}, "RowsToRemove", JoinKind.LeftOuter),

#"Expanded RowsToRemove" = Table.ExpandTableColumn(Source, "RowsToRemove", {"Unique Row Identifier"}, {"Unique Row Identifier.1"}),

#"Filtered Rows" = Table.SelectRows(#"Expanded RowsToRemove", each ([Unique Row Identifier.1] = null))

in

#"Filtered Rows"

If my response(s) assisted you in any way, don't forget to drop me a Kudos 🙂

Kind Regards,

Avinash

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank you! Using your suggestions as well as others, I ended up grouping a merge of PPN-DATE-SERIAL with a row count and all rows column, filtering >1 on the row count and then expanding the all rows column.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Easier to just Table.ReplaceValue "-" with "", then Keep Duplicates, then Table.Join the step with the duplicates to the last step, using JoinKind.LeftAnti. So

RemoveValue = Table.ReplaceValue(PriorStepOrTableName, //whatever the code is to replace "-" with "" in the Serial Column,

KeepDups = //Use the Keep Duplicates GUI function,

RenameSerial = //Rename Serial to Serial1,

DeleteDups = TableJoin(KeepDups,, {"Serial"}, RenameSerial, {"Serial1}, JoinKind.LeftAnti)

--Nate

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, @Andrew__K

s = your_table,

deleted = List.Buffer(Table.SelectRows(s, each [Trx Type] = - 1)[Serial]),

filtered = Table.SelectRows(s, each not List.Contains(deleted, [Serial]))- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Andrew__K ,

You could add a custom column "neg Trx Type" and multiply Trx Type by -1.

Next do a self left anti join on Serial and Neg Trx Type against Serial and Trx Type to remove offsetting rows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @Andrew__K ,

Steps taken:

1. Unique Row Identifier: Combination of all columns delimeted by "|" in the Table Query (Your input)

2. Created a new query (RowsToRemove) referencing this

3. Grouped this query by Serial along with a summarized table of all rows and a count of all distinct rows. If distinct count > 1 then there are duplicates.

4. Filtered for rows >1 . Expanded the table.

5. Merged Table and Rows to delete as a new query on the unique identifier (Output - shown below). The rows which don't have a unique idenitifer are the rows which didn't have any duplicates in the raw data and are retained.

Copy and paste the following queries in the advanced editor of a blank query:

Table:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("pdKxDoIwEAbgV7l0UoKhV0B0VOtGlChODQOBDiRESK2Jvr1tIqOD3NY/93+XDqcUk7XVLGRQmKF9NhaK4gQuS/1oTDfabrj76KcwVa/adHXvXqV5QfkeNatCxZIIRSS4iN0AeZaiZ5ezvB1KyHf7Y+6yiBCnTsY59x2crQVJxySdkHRK0muSzkh6Q9JbikZO0n/cGiwCqXttdRssf2xazf8I6Wzxe7bVBw==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"(blank)" = _t, #"(blank).1" = _t, #"(blank).2" = _t, #"(blank).3" = _t, #"(blank).4" = _t, #"(blank).5" = _t]),

#"Promoted Headers" = Table.PromoteHeaders(Source, [PromoteAllScalars=true]),

#"Changed Type" = Table.TransformColumnTypes(#"Promoted Headers",{{"Date", type date}, {" Product PPN ", Int64.Type}, {"Description ", type text}, {"Prod Date", type date}, {" Serial", Int64.Type}, {"Trx Type", Int64.Type}}),

#"Unique Row Identifier" = Table.AddColumn(#"Changed Type", "Unique Row Identifier", each Text.Combine({Text.From([Date], "en-CA"), Text.From([#" Product PPN "], "en-CA"), [#"Description "], Text.From([Prod Date], "en-CA"), Text.From([#" Serial"], "en-CA"), Text.From([Trx Type], "en-CA")}, "|"), type text)

in

#"Unique Row Identifier"RowstoRemove:

let

Source = Table,

#"Grouped Rows" = Table.Group(Source, {" Serial"}, {{"Data", each _, type table [Date=nullable date, #" Product PPN "=nullable number, #"Description "=nullable text, Prod Date=nullable date, #" Serial"=nullable number, Trx Type=nullable number, Unique Row Identifier=text]}, {"Distinct Row Count", each Table.RowCount(Table.Distinct(_)), Int64.Type}}),

#"Filtered Rows" = Table.SelectRows(#"Grouped Rows", each ([Distinct Row Count] = 2)),

#"Removed Other Columns" = Table.SelectColumns(#"Filtered Rows",{"Data"}),

#"Expanded Data" = Table.ExpandTableColumn(#"Removed Other Columns", "Data", {"Date", " Product PPN ", "Description ", "Prod Date", " Serial", "Trx Type", "Unique Row Identifier"}, {"Date", " Product PPN ", "Description ", "Prod Date", " Serial", "Trx Type", "Unique Row Identifier"})

in

#"Expanded Data"Output:

let

Source = Table.NestedJoin(Table, {"Unique Row Identifier"}, RowsToRemove, {"Unique Row Identifier"}, "RowsToRemove", JoinKind.LeftOuter),

#"Expanded RowsToRemove" = Table.ExpandTableColumn(Source, "RowsToRemove", {"Unique Row Identifier"}, {"Unique Row Identifier.1"}),

#"Filtered Rows" = Table.SelectRows(#"Expanded RowsToRemove", each ([Unique Row Identifier.1] = null))

in

#"Filtered Rows"

If my response(s) assisted you in any way, don't forget to drop me a Kudos 🙂

Kind Regards,

Avinash

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank you! Using your suggestions as well as others, I ended up grouping a merge of PPN-DATE-SERIAL with a row count and all rows column, filtering >1 on the row count and then expanding the all rows column.

Helpful resources

Power BI Monthly Update - July 2025

Check out the July 2025 Power BI update to learn about new features.

| Subject | Author | Posted | |

|---|---|---|---|

| 05-27-2025 05:36 AM | |||

| 05-15-2025 12:57 AM | |||

| 05-21-2025 08:54 AM | |||

| 09-13-2018 09:17 PM | |||

|

Anonymous

| 01-08-2018 03:13 PM |