- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Linked Service in ADF to One Lake

Hi,

I was wondering if anyone knew of a work around to let existing resources in ADF or Azure Synapse write to One Lake.

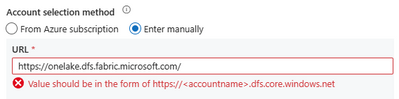

I was hoping I could configure a service principal and then setup a linked service in ADF. However it enforces a name convention of the URL:

For reference I took the URL from: OneLake access and APIs - Microsoft Fabric | Microsoft Learn

Thanks

Ben

Ben

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@bcdobbs It depends on what you want to do with the data, if your source data is already structured/curated then you don't generally need to copy it again in the lakehouse/warehouse, shortcuts will work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GeethaT-MSFT Will the connectors for the Fabric copy data activity be expanded to match what is available in Azure Data Factory? For instance, I have on premises Oracle data that I would like to land in OneLake.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@kdoherty Yes, on-prem connectivity is tracked for GA, Until then a workaround is to use on-prem gateways in pbi to stage the data in a cloud location and then use copy activity.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's good news. Thank you for replying.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I fully intend to once it supports what I need! Currently Fabric copy data activity in pipeline only has very basic API support; I need to be able to pass a bearer key in the auth header (which I've ideally retrieved from key vault or new fabric equivalent), or for internal Microsoft stuff use a managed identity/service principal.

I was just thinking until that was available (I understand it's coming) I could use ADF in azure to land the data while I experiment with fabric.

That said having now played with it more I think the way to go is simply create a shortcut to my exisiting ADLS storage and get the data in that way.

Helpful resources

| Subject | Author | Posted | |

|---|---|---|---|

| 06-16-2025 09:25 AM | |||

|

Anonymous

| 05-29-2024 11:40 PM | ||

|

Anonymous

| 12-11-2024 01:04 AM | ||

| 04-15-2025 11:13 AM | |||

| 06-23-2025 06:41 PM |

| User | Count |

|---|---|

| 4 | |

| 2 | |

| 1 | |

| 1 | |

| 1 |