Join the #PBI10 DataViz contest

Power BI is turning 10, and we’re marking the occasion with a special community challenge. Use your creativity to tell a story, uncover trends, or highlight something unexpected.

Get startedJoin us at FabCon Vienna from September 15-18, 2025, for the ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM. Get registered

- Fabric platform forums

- Forums

- Get Help

- Fabric platform

- Lakehouse Add or Remove columns from table

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lakehouse Add or Remove columns from table

Not sure if this is the right forum or not - but here is the issue.

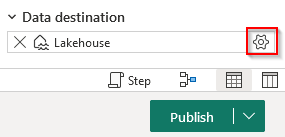

We are loading data to a Lakehouse using gen 2 data flows (for now they are just pointing at exisitn gen 1 dataflows then doing the lakehouse insert - we will recify this later on).

Over time it is typical for columns to be added, removed and / or updated in a dataflow - with a datamart these changes are reflected automatically in the schema - however with a laehouse when adding a new column to the dtaflow i can see no way to bring that into the lakehouse.

What do i need to do here - only options i can see are

1: import it as a new table but that seems to be very clunky as you would need to update queoroes / stored procedures on your sql end point to cater for this

2: Delete exisitng table in lakehouse and then add a new one with the same name.

Am i missing something ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems to be possible to add columns in Lakehouse table now by using notebook.

I am able to use the following type of command in a Notebook:

%%sql

ALTER TABLE tableName

ADD COLUMN columnName dataType

And the table will get updated also in SQL Analytics Endpoint and Direct Lake Semantic Model, something which was a problem before.

Ref. this thread:

https://community.fabric.microsoft.com/t5/General-Discussion/SQL-ALTER-command/m-p/3748079#M4861

However, I get an error if I try to rename or remove (drop) a column.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe this is a solution for renaming columns, dropping columns and changing column type in Lakehouse tables:

https://community.fabric.microsoft.com/t5/General-Discussion/Dropping-and-recreating-lakehouse-table...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is 3rd option which worked for me:

3.Rename original table. For exmplate rename "Table" to "Table1"

Then go to your Dataflow and setup destination of your Dataflow again. (Of course use create new table called "Table".)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So where we ended up with this is moving to using a warehouse for almost everything - and creating our own tables in it.

This allows you to alter them and create primary keys (not enforced) .

Lakehouse is good for super unstructured data but if you have structured data then a warehouse is a much better option.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have used a Python notebook to add a column to an existing table and that works just fine. One can use spark dataframe or pyspark.pandas dataframe to get the desired outcome.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Would really appreciate any additional insight or links to resources that could be provided on this subject.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

could you share how to do this in python?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was wondering the same. 🙂

Helpful resources

Join our Fabric User Panel

This is your chance to engage directly with the engineering team behind Fabric and Power BI. Share your experiences and shape the future.

Fabric Monthly Update - June 2025

Check out the June 2025 Fabric update to learn about new features.

| User | Count |

|---|---|

| 55 | |

| 27 | |

| 18 | |

| 10 | |

| 4 |

| User | Count |

|---|---|

| 72 | |

| 67 | |

| 21 | |

| 8 | |

| 6 |