Join us at the 2025 Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount! Early bird discount ends December 31.

Register NowBe one of the first to start using Fabric Databases. View on-demand sessions with database experts and the Microsoft product team to learn just how easy it is to get started. Watch now

- Fabric platform forums

- Forums

- Get Help

- Fabric platform

- Re: Dropping and recreating lakehouse table

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dropping and recreating lakehouse table

Hi,

I have read, when needing to rename or remove columns in a Lakehouse table, that a suggested solution is to drop the table and create a new table with the new table schema.

I am wondering what will be the consequences of dropping and recreating a Lakehouse table?

- If the table is part of a relationship in a direct lake semantic model, or

- If the table is the target of a OneLake shortcut

Will the relationship in the direct lake semantic model be broken, after the table has been dropped and recreated?

Will the shortcut be broken, after the table has been dropped and recreated?

Anyone have some information or documentation about this?

(Provided the table name will be exactly the same after recreating the table, but some column names and the number of columns may have changed. Let's assume the relationship key column still has the same name and type.)

Thank you! 😀

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @Anonymous,

I tried using ALTER TABLE command in Spark notebook.

ADD COLUMN worked for me, but RENAME COLUMN or DROP COLUMN did not work for me.

Basically I get an error message telling me I need to enable column mapping.

If I enable column mapping then I am able to rename or drop columns in the Notebook interface, however the table doesn't work together with the SQL Analytics Endpoint and Direct Lake Semantic Model after enabling column mapping.

You can read about it in my most recent comment here:

https://community.fabric.microsoft.com/t5/General-Discussion/SQL-ALTER-command/m-p/3748079

Thank you 😀

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @frithjof_v ,

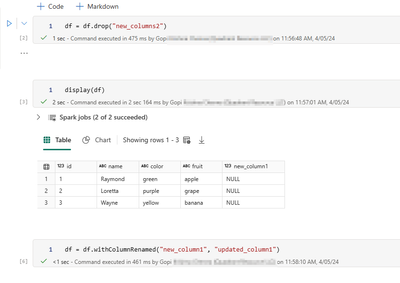

Why don't you try using pyspark features -

df = df.drop("new_columns2")

df = df.withColumnRenamed("new_column1", "updated_column1")

display(df)

Docs to refer -

pyspark.sql.DataFrame.drop — PySpark master documentation (apache.org)

Instead of deleting and creating newlakehouse table.

Please let me know if this works or still creates any issues or limitations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous thank you!

Is this a good approach (see below)?

(I am also thinking about performance on large tables)

Are there any better approaches?

Drop column:

spark.read.table("tableName")\

.drop("columName")\

.write\

.mode("overwrite")\

.option("overwriteSchema", "true")\

.saveAsTable("tableName")

Change column name:

spark.read.table("tableName")\

.withColumnRenamed("oldColumnName", "newColumnName")\

.write\

.mode("overwrite")\

.option("overwriteSchema", "true")\

.saveAsTable("tableName")

Change column type:

spark.read.table("tableName")\

.withColumn("columnName", col("columnName").cast("columnType"))\

.write\

.mode("overwrite")\

.option("overwriteSchema", "true")\

.saveAsTable("tableName")

Add column (see also my next comment in this thread):

spark.read.table("tableName")\

.withColumn("columnName", lit(None).cast("columnType"))\

.write\

.mode("overwrite")\

.option("overwriteSchema", "true")\

.saveAsTable("tableName")

I am new to PySpark, but this code seems to work for the Lakehouse tables, and the tables also seem to continue working with the SQL Analytics Endpoint and Direct Lake Semantic Model 😀

(although dropping columns, renaming columns or changing column types may of course cause calculations or relationships in the semantic model to break, but that is only if the altered column is directly involved in the calculation or relationship).

I think this might work 😀

In addition to your reply, I was inspired by this article: https://learn.microsoft.com/en-us/azure/databricks/delta/update-schema#--explicitly-update-schema-to-change-column-type-or-name

However I am interested to learn more about the performance implications of this method (is this method basically overwriting all the data in the table)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- I think for adding columns, this code might be more efficient because it doesn't require overwriting the table data?

%%sql

ALTER TABLE tableName

ADD COLUMN columnName columnType;

- For adding columns, it seems also mergeSchema might be an option:

https://delta.io/blog/2023-02-08-delta-lake-schema-evolution/ - When using Dataflow Gen2 and replace method, Dynamic schema seems to be an option for adding, removing, renaming or changing type of columns:

https://community.fabric.microsoft.com/t5/Dataflows/Dataflow-Gen2-Dynamic-schema/m-p/3835653#M2683 - Unfortunately, it seems that

%%sql ALTER TABLE tableName DROP COLUMN columnName;

and

%%sql ALTER TABLE tableName RENAME COLUMN oldName TO newName;

requires enabling column mapping, and if I enable column mapping then I get some breaking consequences: https://community.fabric.microsoft.com/t5/General-Discussion/SQL-ALTER-command/m-p/3800910/highlight...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @Anonymous,

I want to use the data in a Direct Lake Semantic Model for Power BI reporting.

To be able to use the data in a Direct Lake Semantic Model, the data needs to be in Lakehouse tables as far as I know.

So I am looking for a method to update table schema of a Lakehouse table, which will not break this table's functionality, relationships etc. in the SQL Analytics Endpoint and Direct Lake Semantic Model.

When working with import mode power bi semantic models in Power BI desktop, it is normal to be able to rename columns or remove columns from tables in the semantic model, without having to delete the table and create a new table. I am looking for the same functionality to remove or rename columns with direct lake semantic model.

For now, only adding columns seems to work.

Thank you 😀

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @frithjof_v ,

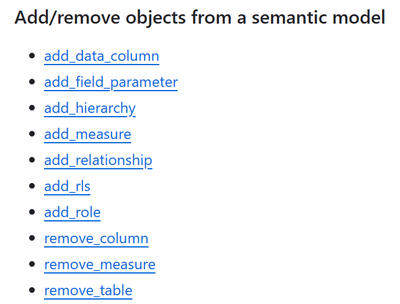

See the fabric_cat_tools library here. It has functions for adding data columns (to the semantic model) and removing columns as well.

GitHub - m-kovalsky/fabric_cat_tools: Documentation for the fabric_cat_tools library

Hope this is helpful. Please let me know incase if you have further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @frithjof_v ,

We haven’t heard from you on the last response and was just checking back to see if your query was answered.

Otherwise, will respond back with the more details and we will try to help .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @frithjof_v ,

We haven’t heard from you on the last response and was just checking back to see if your query was answered.

Otherwise, will respond back with the more details and we will try to help .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

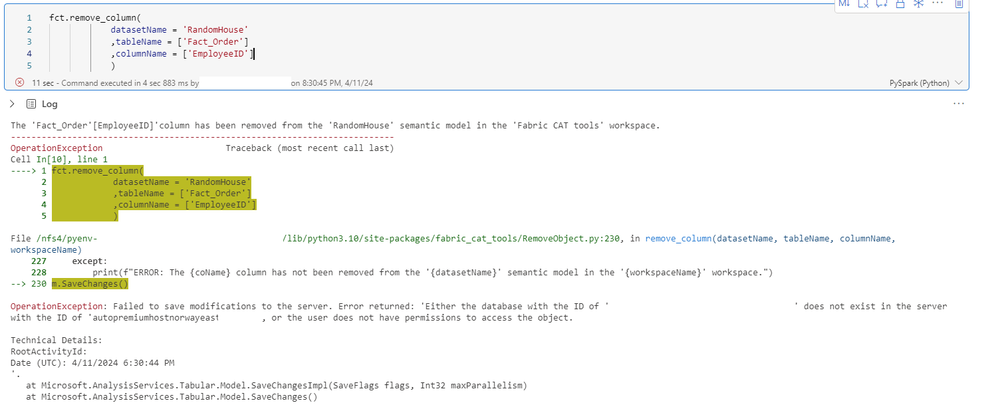

Thank you @Anonymous,

I tried the fabric_cat_tools and I enjoyed testing these functions. It's really interesting and it obviously has many use cases. Thank you for pointing me towards this.

However it didn't quite solve my wish for removing columns, with my objective being to work with Lakehouse and Direct Lake Semantic Model in a coherent way.

Here are my findings:

I tried using the remove_column function, and it does remove the column from the custom direct lake semantic model, however it doesn't delete it from the Lakehouse.

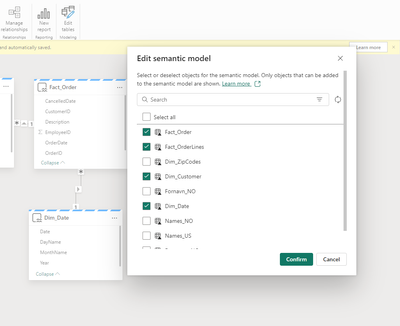

Also, if I refresh the objects of the semantic model (see screenshot below), then the removed column reappears.

Furthermore, the remove_column function doesn't seem to work with the Lakehouse's default semantic model. I got this error message:

I would prefer a solution for removing columns in the Lakehouse, which also works together with the SQL Analytics Endpoint and Direct Lake Semantic Model.

E.g. %%sql ALTER TABLE tableName DROP COLUMN columnName;

or a user interface way of dropping columns from a Lakehouse table.

That will be a holistic solution in my opinion, for working with Lakehouse in conjunction with Direct Lake Semantic Model.

I created an idea here, please vote if anyone else needs this: https://ideas.fabric.microsoft.com/ideas/idea/?ideaid=746601c5-6ba9-ee11-92bd-6045bdb0416e

Thank you 😀

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @frithjof_v ,

Thanks for using Fabric Community.You're correct that dropping and recreating a Lakehouse table can be a one of the solution for renaming or removing columns, but it's important to be aware of the consequences, especially in the context of MS Fabric. Here's a breakdown of the impacts:

Consequences of Dropping and Recreating a Lakehouse Table:

- Relationships in Direct Lake Semantic Model: Yes, dropping the table will break any existing relationships in your Direct Lake semantic model. This is because the model relies on the table schema, and changing the schema (even with the same name) breaks the connection. You'll need to re-establish the relationships after recreating the table.

- OneLake Shortcuts: Similar to semantic models, OneLake shortcuts will also be broken after dropping the table. The shortcut points to a specific schema, and recreating the table with a different schema will render the shortcut unusable. You'll need to recreate the shortcut pointing to the new table.

Alternative Approaches:

While dropping and recreating might seem like a straightforward solution, it can be disruptive. Here are some alternative approaches to consider:

- Alter Table: Depending on your specific needs, you might be able to use the ALTER TABLE statement to modify the existing table schema. This could involve renaming columns, adding new columns, or dropping existing ones (with some limitations). This approach avoids breaking relationships and shortcuts.

Doc to refer - ALTER TABLE - Spark 3.0.0-preview Documentation (apache.org)

We can use Alter commands in Spark SQL inorder to change the schema of table present in Lakehouse.

Remember: Before making any changes, it's crucial to back up your data and thoroughly test your approach in a non-production environment.

Hope this is helpful. Please let me know incase of further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did some testing and here are my observations so far:

I created a Lakehouse A and created some tables in Lakehouse A by using Dataflows Gen2. I also created another Lakehouse B, and in Lakehouse B I created shortcuts to all the tables in Lakehouse A.

I deleted a table from Lakehouse A by clicking the ellipsis for the table and then clicking Delete. The table disappeared in Lakehouse A, and the shortcut table also disappeared in Lakehouse B.

Then I recreated the table in Lakehouse A by using Dataflow Gen2. The new table had a slightly changed schema: I had removed some columns and I had added some new columns compared to the original table. The table name was identical.

I observed that the shortcut table had reappeared in Lakehouse B. So in my case the shortcut in Lakehouse B is still working, after I had dropped and recreated the target table in Lakehouse A with a slightly altered schema.

Regarding the semantic model:

After dropping and recreating the table, the table was not in the default direct lake semantic model anymore. I needed to manually add the table to the default direct lake semantic model again after I had recreated the table.

For the custom direct lake semantic model, the table was actually still in the semantic model after dropping and recreating the table.

However the table now had an orange warning triangle, and I had to refresh the semantic model objects in order for the orange warning triangle to disappear.

Is this the expected behaviour when dropping and recreating a lakehouse table with an altered table schema (added/removed columns)?

Helpful resources

Join us at the Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount!

Microsoft Fabric Community Conference 2025

Arun Ulag shares exciting details about the Microsoft Fabric Conference 2025, which will be held in Las Vegas, NV.

| User | Count |

|---|---|

| 7 | |

| 6 | |

| 5 | |

| 2 | |

| 1 |

| User | Count |

|---|---|

| 15 | |

| 10 | |

| 5 | |

| 4 | |

| 4 |