- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow Gen 2 - SharePoint List - Target

Hi,

I am trying to get a SharePoint List copied through a DataFlow Gen 2 to OneLake (would like to eventually expose it to Power BI and CoPilot along side other datalake tables).

I have the following issues with the target.

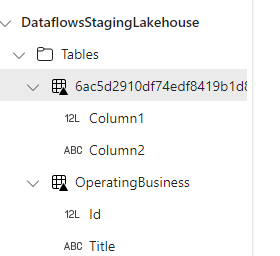

- Upon first execution, the target name is a file name and not the table name that I specified

- The columns in the target become generic even if named in the flow (Column 1, Column 2...)

- Rerunning a flow ignores the previous target and always generates a new one (even if I change it to point to the existing file/table)

Anything I am missing in the configuration of the flow?

Thanks

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

based on the screenshots, it appears that what you're looking at is what we call a Staging Lakehouse which is not meant to be accessed by the end-user. This is a system-level artifact that is used by Dataflows to help you with the loading of the data to the Lakehouse in your workspace.

We do not recommend interacting with staging artifacts and we will be hiding these artifacts in the future as well as preventing changes from the user. These are only meant to be used by the system.

You should be able to see a different Lakehouse which should be the one that you initially created, but it cannot be either of the Staging ones. it should also say that is owned by you. Can you confirm that you can see such Lakehouse?

Also, please let us know if you can share the screenshots that I mentioned in my previous reply from the Dataflows experience around the schema for your query (just a screenshot of what the columns look like) and the "mapping columns" dialog in the output destinations dialog that also showcases the name of what should be the new table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

Could you please share a screenshot of how your table schema looks like for the query that has the output destination definition and another screenshot fo what the column mapping wth the destination looks like?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

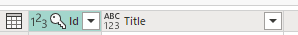

Is this what you are looking for?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes - thanks. I'll take a look to see what's going on.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't been able to reproduce this issue. One note - there is some post processing being done on the Lakehouse after the dataflow refresh completes. It's possible that the processing is taking longer than the norm on your workspace, and that's leading to inconsistencies with what you are seeing. However, I don't think that would be a root cause for this. I'd recommend opening a support ticket for this so we can investigate the specifics of what you are seeing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. Let me try it again and I will wait a few minutes for post-processing. Will let you know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After the initial execution.. this is in the lakehouse.

After 1 minute or so... I have the file and the table.

Waited 15 minutes... same thing.

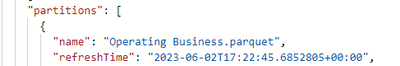

Checked the refresh time in the mode file and got "2023-06-02T17:07:17.5296454+00:00"

Triggered the refresh of the dataflow.

Succesfull

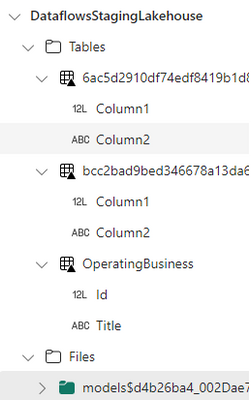

Right after, have 3 tables (files)

THe model file has no 2 JSON in it with the second one having the following refresh.

Is this the expected behavior and does that mean that my table/file has been updated?

Does the system keep the other files as a backup or are those removed after a while?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

based on the screenshots, it appears that what you're looking at is what we call a Staging Lakehouse which is not meant to be accessed by the end-user. This is a system-level artifact that is used by Dataflows to help you with the loading of the data to the Lakehouse in your workspace.

We do not recommend interacting with staging artifacts and we will be hiding these artifacts in the future as well as preventing changes from the user. These are only meant to be used by the system.

You should be able to see a different Lakehouse which should be the one that you initially created, but it cannot be either of the Staging ones. it should also say that is owned by you. Can you confirm that you can see such Lakehouse?

Also, please let us know if you can share the screenshots that I mentioned in my previous reply from the Dataflows experience around the schema for your query (just a screenshot of what the columns look like) and the "mapping columns" dialog in the output destinations dialog that also showcases the name of what should be the new table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, @miguel is correct. The tables named with GUIDs are the results of dataflow staging in the Staging Lakehouse, not the output destination you defined.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the feedback. FYI. I deleted everything and did the following.

- Created a new data lakehouse

- Created a new flow v2

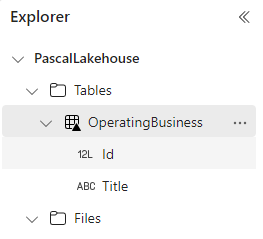

As for the schema, really simple. I am reading a SP list and loading 2 columns for now: ID & Title. Use the detect type option, set the ID as the key.

Target is now my lakehouse.

And now I see the table in my Lakehouse

Now if I refresh it. It is succesful.

One suggestion for your system. Would it be possible to add a last refresh date & time in the Properties menu option under the Explorer?

Your answers were really useful.

Pascal

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad it was helpful.

I like the idea of the Last Refresh time. @miguel - do you know which Ideas forum (Home (microsoft.com)) is used for Lakehouse?

-John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey!

From the products dropdown you can choose the "Synapse" option for ideas around Data Warehouse, Notebooks and Lakehouse.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you share a screenshot of the Output Destination configuration?

Helpful resources

| Subject | Author | Posted | |

|---|---|---|---|

| 06-11-2025 07:54 AM | |||

| 04-25-2025 11:43 AM | |||

| 05-09-2025 08:39 AM | |||

| 01-10-2025 08:37 AM | |||

| 09-13-2024 04:44 AM |