FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Data Factory forums

- Forums

- Get Help with Data Factory

- Dataflow

- Re: Problems running DataFlow in Fabric

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problems running DataFlow in Fabric

Problems running Dataflows within Fabric.

I'm trying to follow this step by step.

https://learn.microsoft.com/en-us/power-bi/fundamentals/fabric-get-started

I was able to create this Dataflow and a lakehouse.

I'm having issues when refreshing the Dataflow from MyWorkspace.

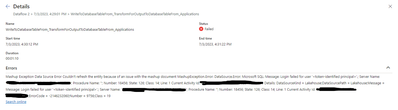

null Error: Data source credentials are missing or invalid. Please update the connection credentials in settings, and try again..

When in my Dataflow, and i connect with my organisational account it works, but when i go back a few moments later it is gone again. Any idea or tips?

Also: When creating a Data Pipeline i'm getting an error: Failed to load dataflows. Request failed with status code 401.

Thanks!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We believe this is an issue specific to My Workspace which occurs in either of the following cases:

1) A query that references another query which has Load enabled

2) A query that outputs to a destination and also has Load enabled

We are investigating the issue and will release a fix as quickly as feasible.

In the interim we believe it should be possible to temporarily work around these issues by using a workspace other than My Workspace.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issues specific to "My Workspace" are now resolved. For on-premises scenarios, you need the latest version of the Gateway (3000.174.13, published on June 2nd).

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issues specific to "My Workspace" are now resolved. For on-premises scenarios, you need the latest version of the Gateway (3000.174.13, published on June 2nd).

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi SidJay,

Thank you for your help.

I've updated the gateway as requested.

I'll keep you posted should the issue return.

KR.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We believe this is an issue specific to My Workspace which occurs in either of the following cases:

1) A query that references another query which has Load enabled

2) A query that outputs to a destination and also has Load enabled

We are investigating the issue and will release a fix as quickly as feasible.

In the interim we believe it should be possible to temporarily work around these issues by using a workspace other than My Workspace.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same exact issue, but in a workspace that is not My Workspace, so the workaround suggested does not work. Same exact scenario with the organizational logins and the fails are writing due to the token issue described. The pull for our Azure DB works just fine. Are you aware of that this issue is not just in My Workspace?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also get the same error when pulling data from the Lakehouse with the Gen2 dataflow. I just used copy to successfully load data into the lakehouse I just created and then attempted to use the Lakehouse as the source and got the login error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

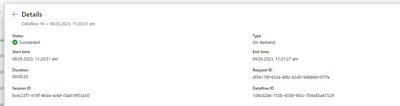

Hello @Dave_De,

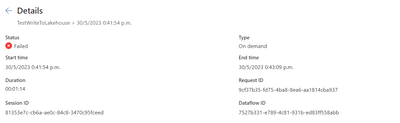

would it be possible to share a screenshot of the failure you are hitting with Request/Session IDs so we can drill down into the issue?

Thanks,

Frank.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

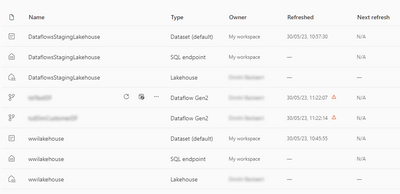

I beleive I may have found the issue. If you change the name of the lakehouse even before you have set it as a desitination in the dataflow, then it will fail, but changing it back to the original name helps it go through. Below are the screenshots:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same problem, once I used a different workspace it worked fine 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! When running my testflow in an other workspace it works.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Something additionally which I notice:

It seems like the owner of the resources created by me are (correctly) assigned to my personal account.

But the auto created ones are having an owner called "My Workspace".

Could this cause the authentication issues with the (non-saved) credentials?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @FilipVDR,

thanks for reporting the issue. Would you be able to share additional information for both cases mentioned above:

- are you using a gateway to load data to the lakehouse destination?

- would you be able to share the RequestID/SessionId from the refresh operations in both cases? this will help with troubleshooting. You can find this information in the refresh history page:

Thanks,

Frank.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

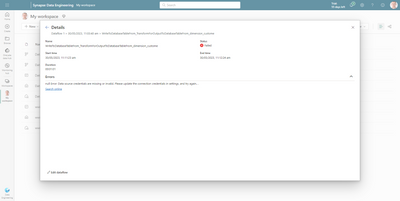

Same issue here. It seems like the credentials used to setup the data connection are not being saved.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes true, when I go back to the dataflow and look for the connection to write to the Datalake, I have to sign in every time I go back.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi Frank,

- no, even if I make a dataflow and I just enter raw data (one line, one column), that fails

-

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seems i got a similar error: even when I create a new dataflow and enter data with one column and one row, and I want to write it to the lakehouse i get an error:

It seems that the dataflow can't save my connection using my Organizational Account

Helpful resources

Fabric Monthly Update - November 2025

Check out the November 2025 Fabric update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!