New Offer! Become a Certified Fabric Data Engineer

Check your eligibility for this 50% exam voucher offer and join us for free live learning sessions to get prepared for Exam DP-700.

Get StartedDon't miss out! 2025 Microsoft Fabric Community Conference, March 31 - April 2, Las Vegas, Nevada. Use code MSCUST for a $150 discount. Prices go up February 11th. Register now.

- Data Factory forums

- Forums

- Get Help with Data Factory

- Dataflow

- Re: DataFlow Choose destination settings "Use auto...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DataFlow Choose destination settings "Use automatic settings" disabled

DataFlow "Choose destination settings" -> "Use automatic settings" is always disabled. I tried multiple times and I have not found any way to change that or the be able to toogle the "automatic settings" on/off. I need to change the "Schema options on publish" and the only way is to have control of all options.

Please advise...

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please check the documentation available for this:

answering some of the questions that I've seen on this thread:

- Automatic settings are only supported for Lakehouse and Azure SQL database

- Automatic settings can only be applied to new tables

- Dynamic table schema options on publish can only be selected when using the "Replace" update method

As mentioned in some previous replies, there are some experiences that perhaps don't exist today in Dataflow Gen2. For any of those please feel free to post an idea in the Fabric ideas portal (aka.ms/FabricIdeas)

if you can't use the "automatic settings" when trying to create a new table to a Lakehouse or an Azure SQL DB, please feel free to open a support ticket and reply to this message with the case number. I'll elevate it and have the engineering team take a closer look as to what might be causing such bug.

Please do refer to the documentation available that covers the current supported scenarios and limitations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please check the documentation available for this:

answering some of the questions that I've seen on this thread:

- Automatic settings are only supported for Lakehouse and Azure SQL database

- Automatic settings can only be applied to new tables

- Dynamic table schema options on publish can only be selected when using the "Replace" update method

As mentioned in some previous replies, there are some experiences that perhaps don't exist today in Dataflow Gen2. For any of those please feel free to post an idea in the Fabric ideas portal (aka.ms/FabricIdeas)

if you can't use the "automatic settings" when trying to create a new table to a Lakehouse or an Azure SQL DB, please feel free to open a support ticket and reply to this message with the case number. I'll elevate it and have the engineering team take a closer look as to what might be causing such bug.

Please do refer to the documentation available that covers the current supported scenarios and limitations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @esobrino ,

Glad to know that your query got resolved. Please continue using Fabric Community on your further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On your last reply you stated that you did tested the functionality successfully layout the steps on how you did so.

From your narrative, it is suggested that "Workflow Gen2" and while choosing the destination target the table should be an "Existing table" and also stated that you could see the database schemas (such as "dbo", and others you may have created).

I have done this exersice multiple times and in the "Choose destination target" no database schemas are shown and therefore none could be selected as a destination.

Furtheremore, I also tried to prefix the schema to the table name as you will normally do such as "[Accounting].[Ledger]" in were [Accounting] is the schema and "Ledger" is the table. But it does not works since it creates a table named "[[Accounting].[Ledger]]" and that is not what is intended.

Maybe the option is to find a way to see the underlying script and with kind of an advance option edit it to specify "Accounting" instead of "dbo", as I could do in the source script.

Anyways, this is not solved yet... as possible scale-this-up to find a way to write to a database schema different from "dbo".

While waiting for the above, I may create the table in a target schema before creating the workflow and then append the data to it which is not the ideal choice since is more work (an additional step), I rather be allowed to identify the schema on the copy.

I can't accept your suggestion as a solution since I can't replicate it and it does behave different from your narrative. Topic is still open...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

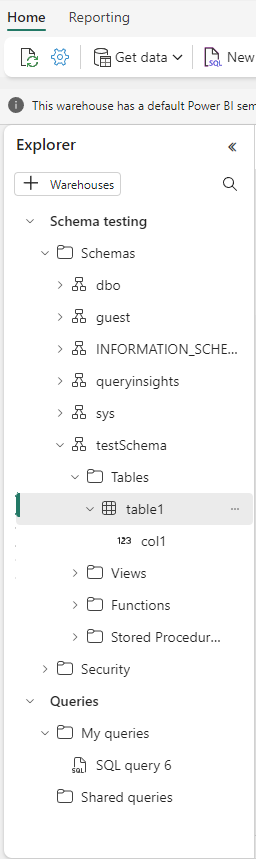

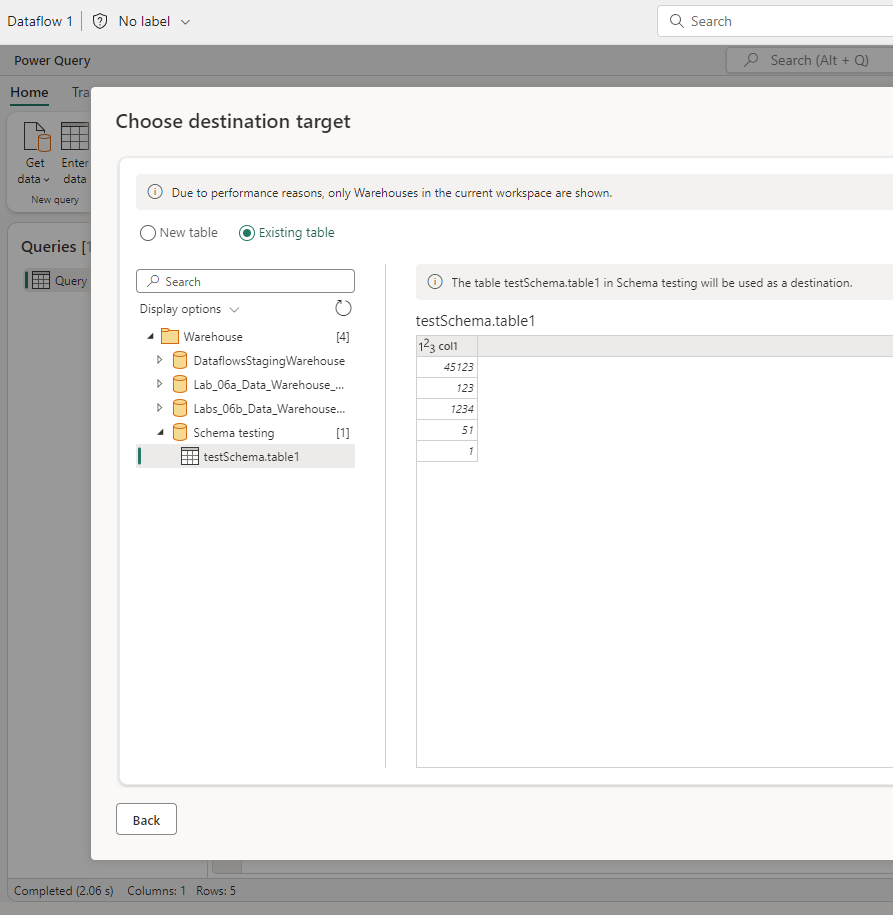

First, I created the schema "testSchema" and the table "table1" in my Data Warehouse:

In my Dataflow Gen2, I can find the table:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

frithjof,

Presicely the point! The table needs to be first created in the schema, and this is not the scenario that I am describing or described... There should be a way to assigned the target schema not forcing the creation of the table before hand.

My vote is to have the as described above and not force the pre-creation of the tables to be targets.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see, now I understand,

Okay I have created an idea for this, please vote:

https://ideas.fabric.microsoft.com/ideas/idea/?ideaid=452b78e0-0300-ef11-a73c-6045bdbe85bd

Here is also another thread about the same topic:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Frithjof,

Got your follow-up email. If the "Dynamic Schema" is related to the table definition and not to database "Schemas" then what I am reporting is an issue since there should be a way to copy the data into a destination schema different from "dbo".

As possible, can you help scalating this issue or let me know were is the best place to follow-up with this issue?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tested it, and it seems it is actually possible in Dataflows Gen2 to write to another database schema instead of "dbo".

Here's what I did:

1. Create a new schema in my Data Warehouse.

2. Create a table in the new schema, inside the Data Warehouse experience.

3. In the Dataflows Gen2, in the destination settings choose "Existing table" and then I could find my schema and table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Frithjof,

I got your reply by email, thanks for the prompt attention to this issue/topic!

The link you provided is the one I am referring in by previous replay (Message 3), and it is there that states that "Use of automatic settings" needs to be switched off to have access to the "Dynamic Schema" options.

I will leave this topic active and see if we can get other contributions that will help to clarify how to enable the "Dyname Schema" options...

Have a great day...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

I did confirmed that Schemas are only available in Data Warehouses not data lakes and therefore the "Schema options on publish" is only available under the Warehouse experience. I created a Data Warehouse within the "Data Engineer" experience and went througt the exercise with the same results, so I guess this confirms that have nothing to do the experience. The "Use automatic settings" is not enabled under any of the tested scenarios. In summary the questions are:

1- How the "Use automatic settings" switch (on/off) gets enabled? (see "Choose destination settings" while defining a Dataflow).

2- In the advance settings I have the option to edit the source selection script, but not for the destination, therefore... how can I have access to the script that define the destination?

Thats it, once I am able to disable the automatic settings I will have full control of the "Schema options" and select the destination.

I hope that I can move up with this roadblock since its escential to be able to create schemas and put data in those as needed, everthing should not go to "dbo", actually "dbo" is at times a dumpster and people just add the groupings as a first word in the table name, making a lot harder to manage group security.

Can you help?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greatly appreciate the feedback. Does it not look inconsistent to you? The Schemas are created within a Data Warehouse (or at least that is how I did it), the workflow destination is a Table within a Data Warehouse. The functionality should be in the place that I was created, I will try to do some work under the Lakehouse experience and see how that goes.

It's confusing... Your thoughts...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I must admit I haven't worked so much with Data Warehouse.

I have mainly just worked with Lakehouse.

Here is some information which I guess may be relevant:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @esobrino,

I'm not sure I entirely understand what you want to achieve.

But if you want to use the Dynamic schema feature, then I think it only works with lakehouse (not warehouse).

Also, in the Destination settings, I think you need to choose New table, even if you are wanting to update the schema of an Existing table. Just use the same table name.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great to have feedback!

A database have a collection of schemas (and in addition I created my own), the default schema is "dbo". When preparing a workflow, while defining/choosing the "Destination settings", to get-data there are 2 "Schema options on publish":

1- Dynamic Schema (default: disabled).

2- Fixed Schema (default: enabled).

Those schema options are shown on the "Choose destination settings" but by default its "Fixed Schema" implying that the data will be copied into a table in the "dbo" schema. Still, the data should be copied into a different schema different from "dbo". According with the documentation to do this you need to switch the "Use automatic settings" "Off" and the "Dynamic Schema" option will then be enabled and allow you to select a destination schema. As far as I can see schemas are only relevant to "Data Warehouses" and therefore I don't see how can those be relevant to Lake Houses. Anyways, I created the schema in a Data Warehouse, I should be able to copy data to any created/available schema as long as I have rights to do so in the schema (I am the admin, so I do have those rights).

Your thoughts...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see... It's different kind of schemas.

The "Dynamic schema" functionality is referring to the table's internal schema (columns, column names, column types).

It's not related to the database schema. Currently I don't think you can change database schema with dataflows gen2. I think you can only write to "dbo" schema. I may be wrong here, but I think I recall reading that somewhere.

Helpful resources

| User | Count |

|---|---|

| 3 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |