New Offer! Become a Certified Fabric Data Engineer

Check your eligibility for this 50% exam voucher offer and join us for free live learning sessions to get prepared for Exam DP-700.

Get StartedDon't miss out! 2025 Microsoft Fabric Community Conference, March 31 - April 2, Las Vegas, Nevada. Use code MSCUST for a $150 discount. Prices go up February 11th. Register now.

- Data Factory forums

- Forums

- Get Help with Data Factory

- Data Pipeline

- End to End data pipeline across workspaces

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

End to End data pipeline across workspaces

Hi All,

Below is my planned architecture. It consists of two workspaces. They are in separated workspaces for security reason. (simplified from https://learn.microsoft.com/en-us/fabric/onelake/get-started-security)

- A Division workspace contains a Bronze Lakehouse as landing zone and Silver Lakehouse as conformed zone.

- A Report Project workspace contains a Gold Lakehouse as currated zone for Power BI Report access.

Now, I want to create a data pipeline to extract data from source system, load and transform into the lakehouses (Bronze, Silver and Gold).

My first approach is to use a single data pipeline (e.g. located in the report project workspace) to ELT data from end to end.

It contains multiple tasks

Source to Landing (S2L) : Copy Activity - copy data from source system to landing zone

- Azure SQL Managed Instance Connector used for Source

- ADLS Gen 2 connector for Destination (Lakehouse connector wont work since it is in other workspace)

Landing to Conformed (L2C): Copy Activity - copy data from landing zone to conformed zone (with union operation to combine data from multiple delta tables)

- ADLS Gen 2 connector for Source (Lakehouse connector wont work since it is in other workspace)

- ADLS Gen 2 connector for Destination (Lakehouse connector wont work since it is in other workspace)

Conformed to Currated (C2C): data flow gen 2 to apply business logic

- Shortcuts are created in Gold lakehouse to reference the delta tables in Silver lakehouse

- data flow gen 2 used to transform those business logic.

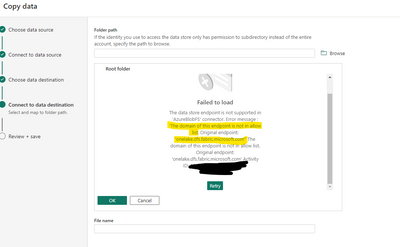

And unluckily, ADLS Gen 2 connector failed to access OneLake data. Although many documentations say that "Microsoft OneLake provides open access to all of your Fabric items through existing ADLS Gen2 APIs and SDKs" (e.g. https://learn.microsoft.com/en-us/fabric/onelake/onelake-access-api), it doesn't work in the ADLS Gen 2 connector. I would be grateful if you could give some advice to make this approach work. (I think it may work with Notebook but i prefer copy activities here, e.g. i even don't know how to read SQL Managed Instance data from a notebook script)

My alternative approach is to create two pipelines

- pipeline in "division workspace" implements the S2L and L2C using copy activities with Azure SQL Managed Instance connector and lakehouse connector

- pipeline in "report project workspace" implements the C2C using data flow gen 2

It works. However, I can't streamline the above two pipeline.

- I can't invoke/trigger the second pipeline from the first pipeline (since they are in different workspaces)

- I can't trigger the second pipeline through event trigger (not yet support)

- I can schedule the above two pipelines with few minutes delay to start the second one. However, it is hard to determine the delay value. In my use case, i need low latency. But a short delay is even worse to get outdated/partially updated data from conformed zone.

- Base on the above "schedule with delay idea", i can a polling loop in the second pipeline (e.g. loop to poll a status table of pipeline1 with some wait between each poll). I think it can work but i don't want to spend computation resource for such a polling

If the first approach (single pipeline work across workspaces) won't work, could you give me some advice on approach 2 (streamline pipelines in different workspaces)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there still no solution to this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is ridiculous. This oversight was reported over eight months ago and still hasn't been addressed. It's like reporting feature issues to the SSIS/SSRS program managers all over again.

Lookup Activity Refencing file in different worksp... - Microsoft Fabric Community

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seriously..what is going on here? It's not even on the release plan which goes out the rest of the year. It would be great if we could have an updated ETA. This is a huge functionality gap for a GA product.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There's a workaround now that semantic-link has gone GA. With the workspaceId and pipelineId known, the Microsoft Fabric REST API can be used to invoke a data pipeline in a different workspace. But this will create the job scheduler instance of the pipeline. I've not figured out how to wait until the execution completes before returning as I can't get the freaking instanceId that should be returned from the call. Documentation on the Fabric API is poor.

import sempy.fabric as fabric

workspaceId = "<your workspace id>"

pipelineId = "<your pipeline id>"

client = fabric.FabricRestClient()

response = client.post(f"/v1/workspaces/{workspaceId}/items/{pipelineId}/jobs/instances?jobType=Pipeline")

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Figured out how to get the instanceId from the Job Scheduler post back a while ago but forgot to put the code up here. Should anybody need to execute a pipeline in a different workspace synchronously, here's the code that works for me. Suggestions on how to improve this greatly welcome.

import sempy.fabric as fabric

import time

workspaceId = "{ workspaceId }"

pipelineId = "{ pipelineId }"

jobStatus = "NotStarted"

elapsedTime = 0

client = fabric.FabricRestClient()

response = client.post(f"/v1/workspaces/{workspaceId}/items/{pipelineId}/jobs/instances?jobType=Pipeline")

if response.status_code == 202:

instanceId = response.headers["Location"][-36:]

while (jobStatus != "Completed") & (jobStatus != "Cancelled") & (jobStatus != "Failed") & (elapsedTime < p_timeout_in_sec):

time.sleep(10)

statusResponse = client.get(f"/v1/workspaces/{workspaceId}/items/{pipelineId}/jobs/instances/{instanceId}")

jobStatus = statusResponse.json()["status"]

elapsedTime += 10

mssparkutils.notebook.exit(f"Status of Pipeline Execution: {jobStatus}")

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Abhishek_Narain Is invoking pipelines across workspaces a feature which is planned or will this be a limitation? If planned, any idea of ETA?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there an update on this? This seems like a massive oversight and means you can't orhestrate a multi workspace medallion architecture, as suggested by Microsoft.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there an update on this or a way around this limitation please?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is becoming a show stopper. Wasn't this suppossed to be finished Q4 2023? Must invoke pipelines across workspaces please, otherwise, Fabric is not going to work for our company.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@RaymondLaw We are actively working on allowing cross-workspace LH in data pipelines.

Sorry for the delayed response but we are listening to your feedback and turning them to features. Current ETA is within Q4 CY 2023.

In the meantime, did you try using LH shortcuts in the other workspace? This way you can bring LH from another WS as shortcut and use pipelines to load into.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thread bumping. It's mind boggling cross-workspaces interaction is not allowed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@RaymondLawWe are actively working on allowing cross-workspace LakeHouse/ DW references within pipelines, which should help your case. We appreciate your patience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A kind reminder that I am still looking for your suggestion.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there still no solution to this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It has been a month already. A kind reminder that I am still looking for your suggestion.

Helpful resources

| User | Count |

|---|---|

| 7 | |

| 3 | |

| 2 | |

| 2 | |

| 1 |

| User | Count |

|---|---|

| 10 | |

| 9 | |

| 5 | |

| 3 | |

| 3 |