FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Data Engineering forums

- Forums

- Get Help with Data Engineering

- Data Engineering

- Re: Spark SQL Query errors - Case Sensitivity issu...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Spark SQL Query errors - Case Sensitivity issues

All of a sudden as of almost 2 weeks ago our spark sql notebooks have been failing due to case sensitivity in the table and field names. This has always worked fine in Synapse before, so I'm not sure if Fabric configs were changed or not.

I'm getting errors like Table or view not found: customers, when I'm running a spark sql query on the customers table

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @12angrymentiger

Apologies for the issue you have been facing. At this time, we are reaching out to the internal team to get some help on this. We will update you once we hear back from them.

A simple workaround to fix this issue is to run the below piece of code before executing the main code.

spark.conf.set('spark.sql.caseSensitive', False)Hope this helps. Please let me know if you have any further questions. Glad to help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

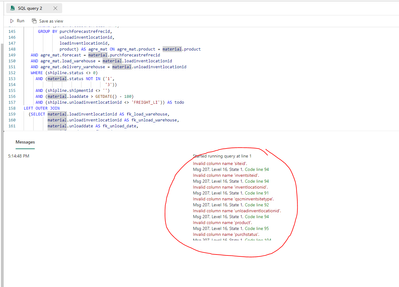

Hi. I am experiencing a similar issue when running an SQL query in an SQL endpoint on some tables I have in a lakehouse within Microsoft Fabric. to my knowledge SQL isnt a case sensetive languaje so not sure why im having issues on the Fabric endpoint yet my queries run fine on apps such as SSMS.

Can anyone help??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous I wanted to check with you regarding this issue.

Please let me know if you have heard of any fixes for this.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @12angrymentiger

Thanks for using Fabric Community. Apologies for the issue you have been facing.

Can you please confirm which Spark Version are you using?

I have confirmed from the internal team that there were no changes done to the case sensitivity. Did you set any spark configurations? If yes can you please give the details about it.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @12angrymentiger ,

I tried to repro the scenario, but did not get any error. I have attached the screenshot.

Please try to check the Spark version which you ar using.

Make sure you use the Spark 1.2 version.

Hope this helps. Please let me know if you have any further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By the way this is still in My Workspace and under the Fabric Trial, but I did change the workspace to use Runtime 1.2 and I'm still getting the error table or view not found.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @12angrymentiger

Apologies for the issue you have been facing. At this time, we are reaching out to the internal team to get some help on this. We will update you once we hear back from them.

A simple workaround to fix this issue is to run the below piece of code before executing the main code.

spark.conf.set('spark.sql.caseSensitive', False)Hope this helps. Please let me know if you have any further questions. Glad to help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We figured out when you call another notebook and start using spark sql further down in the main notebook the spark sql caseSensitive is True.

We have moved this code to run after the 2nd notebook call to make sure the main notebook spark sql is caseInsensitive

spark.conf.set('spark.sql.caseSensitive', False)