Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Synapse forums

- Forums

- Get Help with Synapse

- General Discussion

- Is there a way to create an auto-increment/identit...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

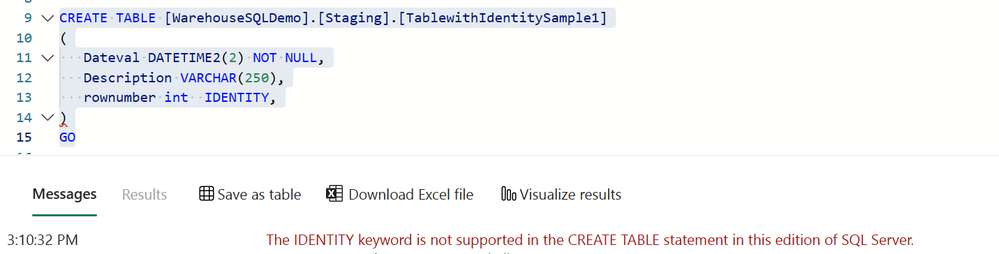

Is there a way to create an auto-increment/identity column?

Greetings, all. Checking out the new Data Warehouse option and am trying to determine what the integrated Fabric solutions offer vs. traditional SQL DB.

One of the big ones is the ability to define an Identity/auto-increment column. I see that isn't currently supported; anyone have ideas on how to achieve this seeing as how UIDs are quite important for a relational DB?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A comment in the Comments area on this post indicates IDENTITY is planned. Not sure who the poster is.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The fascinating thing about the idea of Fabric for me was and is that you would have a common platform into which the data is loaded once, then transformed and used. Coming from the DWH world, everything happens in a DWH for me as much as possible. I very often use Identity to create a performant unique surrogate key. Since this possibility also exists in the Databricks Lakehouse, such a Lakehouse is also useful in other projects.

If Identity is not yet available in OneLake, then there are serious limitations for my way of working, for which I do not have a solution yet:

Using unique composite strings would be possible. However, this makes the final data models much larger, consuming more memory. Presumably, joining via strings instead of integers also affects performance.

A hash value on such long strings would be an extension to reduce the memory requirements of these columns somewhat. It also has the advantage of being unique and traceable.

A GUID is most likely to fulfill the function of a surrogate key.

I can't use Fabric yet anyway, because it is not supported in my regions and there is probably no way to create a Fabric capacity in a different region than the tenant. But as soon as that is possible, I want to try to migrate existing DWH to Fabric. It's going to be hard because of all the limitations.

It would be easiest if with a Fabric capacity you could also get the ability to use and create Azure SQL databases. That could also be a good sell to end customers: "Subscribe to Fabric as the egg-sucking lizard and go to the cloud with the data." I realize that Azure databases are not compatible with OneLake's technical limitations. And I also see this as more of a licensing issue. If you also want to bring masses of small customers into the cloud, then Fabric is the right approach. But if Azure comes into play with the provisioning of Azure databases, then it quickly becomes complicated and discouraging.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suspect the part of the issue is that while GENERATED ALWAYS AS IDENTITY is part of the Databricks version of delta, it hasn't made it into the open source version of delta:

https://github.com/delta-io/delta/issues/1072

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I didn't realize that there are different variants of Lakehouse, and that a Lakehouse with Databricks is different from other Lakehouses. Then I start to understand why we don't get the usual Databricks GUI here in Fabric. And Fabric has less to do with Databricks, but much more to do with the underlying open source technologies like Delta Lake, Delta Sharing etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Databricks and delta Lake support Identity

https://docs.databricks.com/sql/language-manual/sql-ref-syntax-ddl-create-table-using.html

That's why I really don't understand this limitation.

Is it also not supported in the fabric Lakehouse?

If not, what kind of limited Lakehouse is the technical base of OneLake?

If it is supported in the Lakehouse, why not in the warehouse?

I have also seen, that some other common Lakehouse features are not supported in the fabric Lakehouse, for example views. Is this the reason, why we don't the "normal" Databricks GUI, but a very limited Power BI like GUI, missing so much important features?

Is it more marketing? Fabric uses OneLake for storage, which is a great idea.

It would be very helpful to see the roadmap. Is it planned to provide all Lakehouse features or not?

Which warehouse features, we are missing now, are planed to be implemented?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

T-SQL language syntax capabilities of Microsoft Fabric will be constantly improved. Please refer to same link for updates on limitations.

https://learn.microsoft.com/en-us/fabric/data-warehouse/tsql-surface-area

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @arpost,

Thanks for your great question. I see Identity column is not supported in create table statement in Fabric Data warehouse option

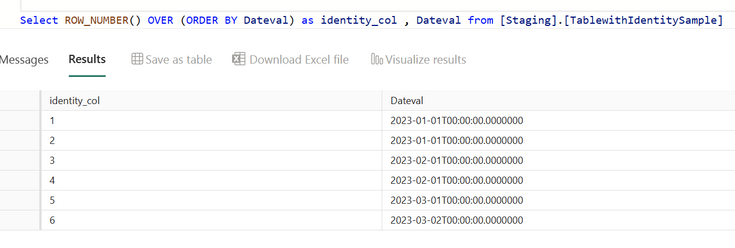

Could you please use ROW_NUMBER() function to generate the Identity/auto-increment column.

All ideas to improve Fabric can be posted using below link . Thanks in Advance

Fabric Ideas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

row_number() is, of course, not a solution. It is fine to use in views or queries, and I often use it.

But here the question was and is, how to create a permanent unique identifier of a row in a table.

I was very surprised, when I found here https://learn.microsoft.com/en-us/fabric/data-warehouse/tsql-surface-area that identity columns are not supported. Maybe, there are technical reasons. But then I would like to know the best practices to generate a permanent unique identifier in a table - a unique surrogate key.

int or bigint datatype are common, fast and storage saving.

Maybe the calculation of a hash value in a calculated column could be used in some cases, to create a unique surrogate key, but not in General. Often we only need a simple ordinal number.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes there are a bunch of limitations with the datalake approach that were solved long ago relationally. In fact I'm just seeing datalake technologies race to catch up to what has existed for decades in relational platforms. Of course Datalake does things that relational can't do so decision to use is not simple.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

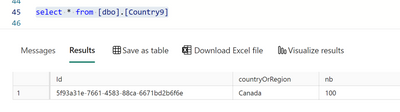

I also found many limitations on this version of SQL, not the same at all as "standard" DB.

For unique ID you can use this:

CREATE TABLE [dbo].[Country9](

[Id] [uniqueidentifier] NOT NULL,

[countryOrRegion] [varchar](100) NOT NULL,

[nb] [int] NULL)

and then new() to generate a unique guid:

INSERT INTO [dbo].[Country9]

([Id]

,[countryOrRegion]

,[nb])

VALUES

( newid()

,'Canada'

,100)

@mahes-a we need to have guidance on why do we have these limitations? is this just temporary because it's in preview, or it's designed this way?

This is documented here:

https://learn.microsoft.com/en-us/fabric/data-warehouse/table-constraints

I have found that you cannot create a primary key enforced., as documented in the reference link. I tried these scenarios:

-When trying to CREATE TABLE directly in Fabric Warehouse with PRIMARY KEY, we get this error:

The PRIMARY KEY keyword is not supported in the CREATE TABLE statement in this edition of SQL Server.

-When trying to CREATE TABLE first without primary key, then ADD CONSTRAINT PRIMARY KEY, we get this error:

Enforced constraints are not supported. To create an unenforced constraint you must include the NOT ENFORCED syntax as part of your statement.

So I am able to create a primary key but not enforced, which might help performance, but will not work well with transactional applications.

So my personal conclusion at this point is that this warehouse is more designed to support OLAP type functions, rather than typical relational OLTP functions. But maybe that will change...

Any thoughts?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

All this products meant for Lakehouses / Olap /DW (Like: Databricks, Snowflake) do not support enforcing primart keys:

"Snowflake supports defining and maintaining constraints, but does not enforce them, except for NOT NULL constraints, which are always enforced."

https://docs.snowflake.com/en/sql-reference/constraints-overview

"Informational primary key and foreign key constraints encode relationships between fields in tables and are not enforced." https://docs.databricks.com/tables/constraints.html

And yes, I believe Fabric is not meant for OLTP.

Cheers,

M

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Don_Miguel, thanks for the reply. Regarding Fabric not being meant for OLTP, that could account for this except I was under the impression based on some things MS said during Build regarding transactional operations as well as the MS docs about Fabric that Fabric is indeed intended to make OLTP possible.

Here are a couple of examples pertaining to OLTP directly or indirectly:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm finding MS doco very misleading and unclear. The OLTP link basically says nothing. The Transaction link, as far as I can tell says "Your SELECTs will honour transactions". Its not clear from that documentation whether the SQL Endpoint is read only or not.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Without a relational platform included, it's definitely not suited for OLTP. The only relational platform included is Synapse Warehouse and that is not an OLTP platform either.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

In my opinion OLTP deals with ACID and Isolation Levels, for example in a ERP system where multiple users are updating multiple records. OLTP DBs like MSSQL have multiple isolation levels, and normally for an ERP you use Read Commited (see here).

Fabric has only Isolation level, this is a great blog about it:

https://www.serverlesssql.com/transaction-isolation-in-fabric-warehouses/

Cheers,

M

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply, @charrington! Definitely a helpful workaround, and I'm curious to see what Microsoft says regarding your questions and echo them.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Fabric Monthly Update - March 2024

Check out the March 2024 Fabric update to learn about new features.

| User | Count |

|---|---|

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 3 |