- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- ISSUE::PBI DESKTOP::QUERY FOLDING TO LAKEHOUSE NOT...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ISSUE::PBI DESKTOP::QUERY FOLDING TO LAKEHOUSE NOT WORKING

CONTEXT:

I have this table schema in a lakehouse:

PBI Desktop Version: 2.128.952.0 64-bit (April 2024)

ISSUE:

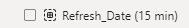

0__I am trying to group the Refresh dates in 15 min bins:

like so:

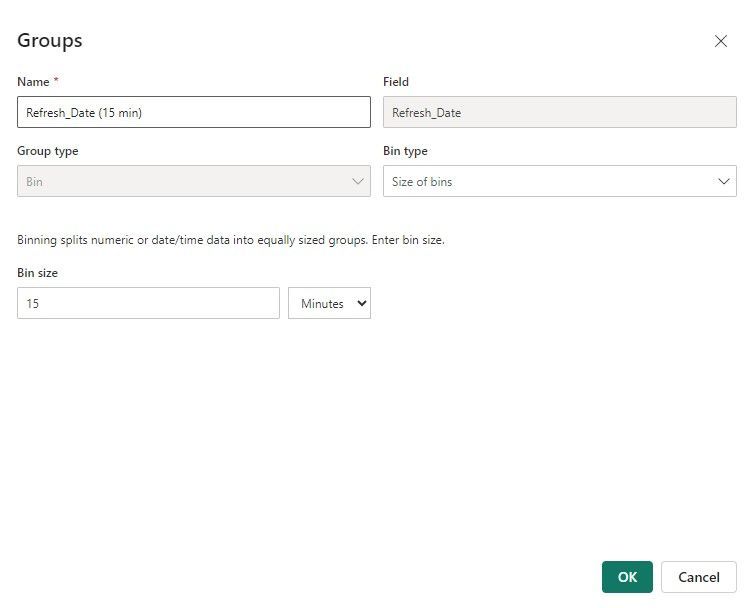

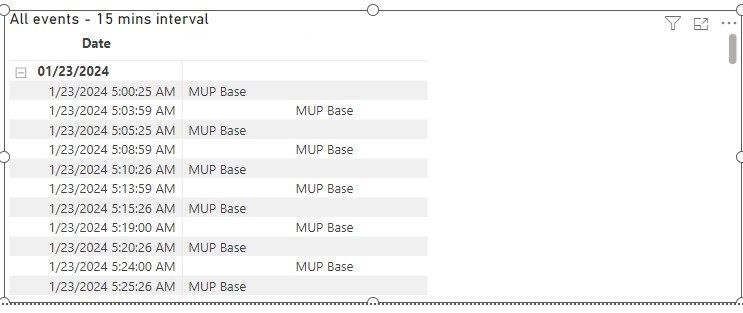

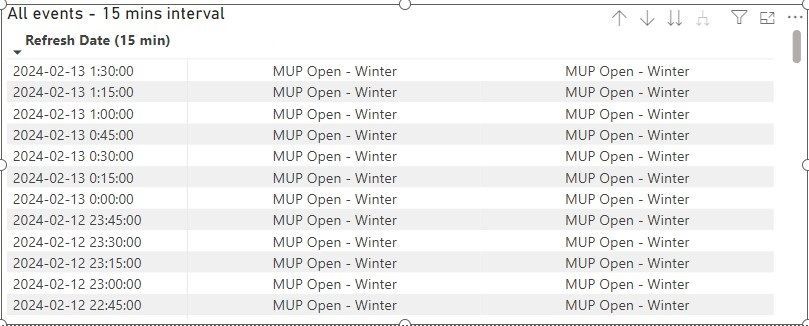

1__the visual I use is a matrix and looks like this before dropping the new field:

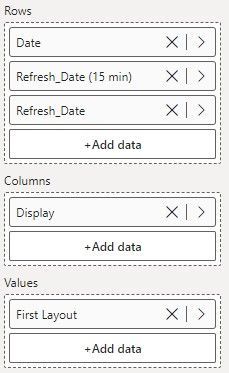

the data well looks like this:

2__after creating the group and dropping the new field into the matrix data well:

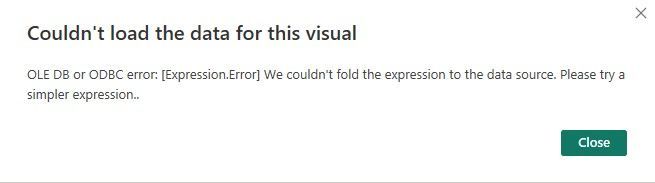

I get this error:

The matrix should look like this:

QUESTION:

Does this mean that the only way of achieving this binning/grouping would be to do it in Fabric with a DFg2 in order to avoid the query folding? This could be an issue as currently I am using DB mirroring in a pipeline to ingest the data incrementally. Not keen to switch it over to a DFg2 as it seems to be less efficient. But perhaps I am mistaken on the latter part.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Element115 , You should create a Bin in Lakehouse using Spark or DataflowGen2 (Using if else). And should ensure a Direct lake connection. This seems like using direct query and can have issues like that

Microsoft Power BI Learning Resources, 2023 !!

Learn Power BI - Full Course with Dec-2022, with Window, Index, Offset, 100+ Topics !!

Did I answer your question? Mark my post as a solution! Appreciate your Kudos !! Proud to be a Super User! !!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your assessment is incorrect. Apparently it is a transient bug and went away on its own as there are no error messages when I try binning a field from a LH table in DirectQuery mode.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@amitchandak Do you know whether query folding would work if I used a warehouse instead of a lakehouse?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the quick response, but I can't use a DirectLake connection as this is a report developped and maintained with PBI Desktop, which supports data access only in Import, DirectQuery, or Dual mode.

The issue I have is that the source has a binary column that needs to be transformed to type text, which can be done in a DFg2 no problem. But the thing is, I do all the initial ingest using a Copy data activity in a pipeline, and when you do this, the binary column is persisted in the LH as a binary column, same as the source.

So if I were to later use a DFg2 to transform this colum to text, I will duplicate my data, once in the LH, and a second time in the default Fabric store because I would have enabled staging.

The only solution I see at this point is to not use the new mirroring capability of the pipeline artifact and instead ingest everything only through a DFg2 which would write to the LH the binary column as a text type, while at the same time binning the data. This way no data duplication occurs.

This means I would lose the new capability of the pipeline bulk copy and consume more CUs because of the additional DFg2.

Not optimal all this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Or just create a view at the source and base64 encode that varbinary column to varchar(MAX). Problem solved. Although it still irks that you can't fold queries to a LH, or for that matter, that a LH can't handle type time.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 109 | |

| 106 | |

| 87 | |

| 75 | |

| 66 |

| User | Count |

|---|---|

| 125 | |

| 114 | |

| 98 | |

| 81 | |

| 73 |