- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Can't import large dataset in desktop and service ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can't import large dataset in desktop and service with ODBC connection

Hi community,

I'm facing an issue. I have a dataset of about 200+ M rows, that grows by million of rows daily, and I cant import the data either in Desktop to create a dataset there, or in Service to create a Dataflow or Datamart.

I'm using an ODBC connection to an Amazon Redshift database.

On desktop the data never load or, when trying to tranform the data, the evaluation never ends in Power Query. Same on Service, when trying to create a Dataflow or Datamart, the evaluation gets cancelled in Power Query when reaching the 10 minute limit. Then when I click save I get a "PQO Evaluation Failed" error.

Are there any suggestions on how to deal with this issue?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have created a filtered pbix file that has only a snapshot of the data. Set up incremental refresh on it. Published and refreshed. That created the partitions.

Then you need to use external tools like SQL Management Studio and XML Toolkit to remove the filtering and refresh the partitions.

Check this video:

https://youtu.be/5AWt6ijJG94

One other way is to create a parameter to filter the data and change it or remove it from the dataset settings on Power BI service.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any ideas on how to import a large dataset with an ODBC connection?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are several strategies you can try to deal with large datasets in Power BI:

Use a direct query connection instead of importing the data. Direct query connections allow you to query the data directly from the database, rather than importing it into Power BI. This can be more efficient when working with large datasets, as it reduces the amount of data that needs to be transferred and stored in Power BI. However, it can also be slower, as the queries are executed in real-time and may require more resources from the database.

Use data sampling to reduce the amount of data that is imported or queried. Data sampling allows you to select a random subset of the data to import or query, rather than the entire dataset. This can be useful for testing and prototyping, as it allows you to work with a smaller and more manageable dataset.

Use incremental refresh to update only the new or modified rows in the dataset. Incremental refresh allows you to set up a schedule to refresh only the rows that have been added or modified since the last refresh, rather than the entire dataset. This can be more efficient when working with large datasets that grow over time, as it reduces the amount of data that needs to be processed and stored in Power BI.

Use data modeling techniques to optimize the data for querying and analysis. Data modeling techniques such as indexing, partitioning, and columnstore indexes can help to improve the performance of queries on large datasets by optimizing the way the data is stored and accessed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your time @MAwwad , I have tried all the steps that you mention and this is not what I need.

1. Direct Query is not an option for ODBC.

2, 3,4. Not my issue, I want to import and load all my data. Incremental refresh comes after that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, did you find the solution to this problem, I am also facing the same issue while importing huge datasets in the Power BI at the initial level.

Please let me know your findings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have created a filtered pbix file that has only a snapshot of the data. Set up incremental refresh on it. Published and refreshed. That created the partitions.

Then you need to use external tools like SQL Management Studio and XML Toolkit to remove the filtering and refresh the partitions.

Check this video:

https://youtu.be/5AWt6ijJG94

One other way is to create a parameter to filter the data and change it or remove it from the dataset settings on Power BI service.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you explain this in details please?

One other way is to create a parameter to filter the data and change it or remove it from the dataset settings on Power BI service.

From where to remove the filter in Power BI Service?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

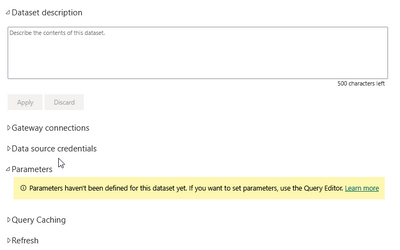

If you create a date parameter in Power Query and filter your data according to it, when the report is published you will be able to see the parameter here and change its value:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply. We use Professional License hence this will not work in our case.

Anyways thanks for your prompt response.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.

| User | Count |

|---|---|

| 116 | |

| 105 | |

| 69 | |

| 68 | |

| 43 |

| User | Count |

|---|---|

| 148 | |

| 103 | |

| 103 | |

| 88 | |

| 66 |