FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- DAX Commands and Tips

- Re: a more efficient way to do running totals: why...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

a more efficient way to do running totals: why is DAX not efficient at doing this?

A common problem that recurs in forums is the calculation of running totals. There are many videos and posts that provide solutions for the particular use case requested.

In general the answer works like this:

- there's a table that has one column containing a Value, another with a Rank (the order of the row in the table). Often the Rank is a date, or datetime.

- use a calculated column to provide a row context or use an iterator function in a measure

- use calculate to sum all values up to the current row by acting on the table of Values that has been filtered so that it only include rows with Ranks before the current row in the row context.

I can't be alone in thinking this is a horribly inefficient way of doing things. In the 100th row of the table, the SUM function is adding up 100 Values. By the time we get to the 10,000th row, there are 10,000 rows to add up. For big tables, processing takes hours.

Surrely we just need to find the value of the Running Total in the PREVIOUS row for the row being currently calculated and add it to the Value in current row. The number of calculations scales linearly with table size, rather than exponentially.

I've use R before for data analysis and the widely-used DPLYR package by Hadley et al. For a table in DPLYR you can access a value from the row before or the row after using the functions 'Lag' and 'Lead'. I'm no expert in SQL, but there's a similar LEAD() function that allows code to access rows offset from the current row. Similar functions would surely be useful in DAX.

I'd appreciate someone from Microsoft posting a sensible reply to this. Are we going to get a function that in DAX that returns the value of a column in the NEXT or PREVIOUS row (within a row context)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.

I'm not from M$ but... you are wrong thinking that this calculation is inefficient 🙂 It's very efficient even though it's a brute-force approach. But Power BI and DAX work mostly like that, using a brute-force approach. There are 2 engines that do calculations in PBI: the Storage Engine and Formula Engine. The Storage Engine is multithreaded, simple and extremely fast. If you write your DAX to mostly use this engine, the brute-force approach will be extremely fast. Please read about the two engines. There are also vids on YT by Marco and Alberto that dive deeper into these topics. Also, it's worth reading their book (if you haven't yet)...

By the way, there is a function (unofficial yet but is coming) called "offset." One can use it even now in DAX, even though there's no intellisense for it yet. It's like the "offset" function in Excel and "lag," or "lead," in SQL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply daXtreme. I think we're agreeing with each other. You call it a 'brute force' approach, while I used the word 'inefficient'.

The algorithm that we currently have to use to calculate running totals is without any doubt inefficient. If you were doing a running total with pen and paper, you'd add the amount in the current row to the running total in the previous row and write the result in the running total column. If the 'offset' function you mentioned enables a similar process in DAX then it's good. That was my point.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

Yes, indeed, "offset" is supposed to make such calculations easier for the end user/coder and - hopefully! - faster. However, the current approach is not inefficient because the engine could be, or is, smart enough to know that, for each cell, it has to add one more number to what it has already calculated. To know for sure how it's done you'll have to read the physical (and logical) DAX plan (similar to what SQL produces when it wants to exec a query). Even though the plan can easily be obtained from DAX Studio, it takes a bit of knowledge and experience to read it correctly and thus know what's going on under the hood.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

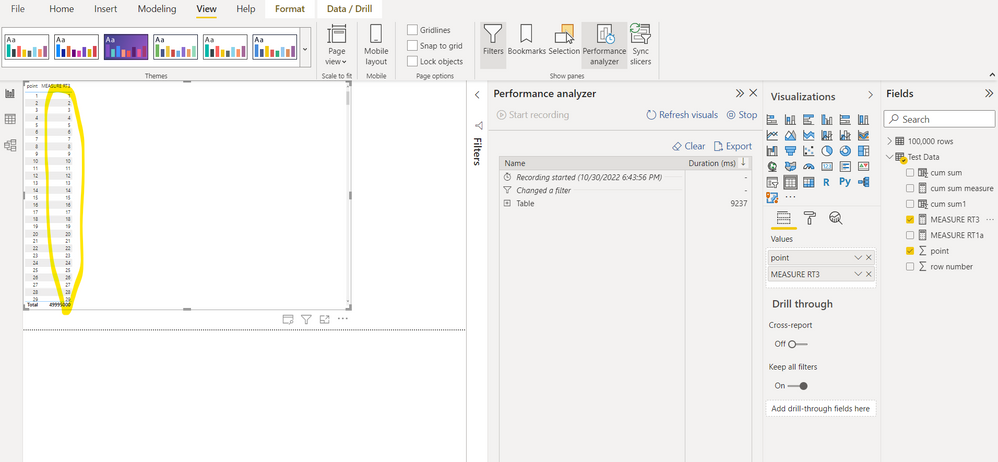

I'm a scientist by training, so I believe the results of experiments over the predictions of theories.

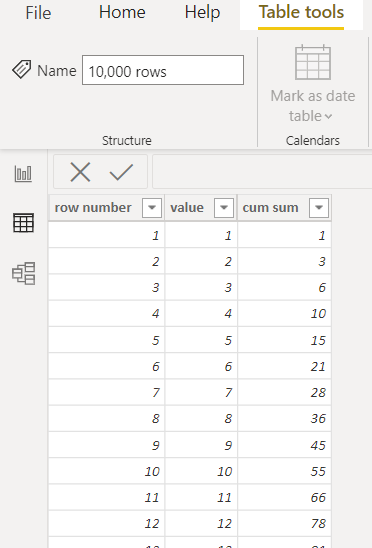

So I decided to test your hypothesis that PBI would be able to run the calculations for a cumulative sum efficiently. To do this I set up a simple table in Excel. The first column is the row number. The second value column is the series of numbers 1 to 10,000. The cumulative sum of the value column should then be the set of triangular numbers: {1,3,6,10,15...}.

I tried a calculate column for the cumulative sum, for which of course there's a row context. I timed the column calculate using my phone and I got a little over 19 seconds.

The code for the calculated column was this:

I then tried using a measure and the performance analyzer. I set up a table visual with all 10,000 rows showing. I then dragged in a measure that performs the cumulative sum:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here are the findings.

Here's the query that I was benchmarking in DAX Studio.

DEFINE

MEASURE 'Test Data'[Running Total] =

var CurrentPoint = MAX( 'Test Data'[Point] )

var Output =

CALCULATE(

SUMX(

'Test Data',

'Test Data'[Point]

),

'Test Data'[Point] <= CurrentPoint

)

return

Output

MEASURE 'Test Data'[Running Total 2] =

SUMX(

ALL( 'Test Data' ),

'Test Data'[Point] * ('Test Data'[Point] <= MAX( 'Test Data'[Point] ))

)

MEASURE 'Test Data'[Running Total 3] =

var CurrentPoint = MAX( 'Test Data'[Point] )

var PrecomputedRTForAllPoints =

ADDCOLUMNS(

'Test Data',

"@RT",

var CurrentPoint = 'Test Data'[Point]

return

SUMX(

FILTER(

'Test Data',

'Test Data'[Point] <= CurrentPoint

),

'Test Data'[Point]

)

)

var Output =

MAXX(

FILTER(

PrecomputedRTForAllPoints,

'Test Data'[Point] = CurrentPoint

),

[@RT]

)

return

Output

MEASURE 'Test Data'[Running Total 4] =

var GeneratedRTForAllPoints =

GENERATE(

'Test Data',

var CurrentPoint = 'Test Data'[Point]

var RT =

SUMX(

FILTER(

'Test Data',

'Test Data'[Point] <= CurrentPoint

),

'Test Data'[Point]

)

return

row( "@RT", RT )

)

var CurrentPoint = MAX( 'Test Data'[Point] )

var Output =

MAXX(

FILTER(

GeneratedRTForAllPoints,

'Test Data'[Point] = CurrentPoint

),

[@RT]

)

return

Output

EVALUATE

SUMMARIZECOLUMNS(

'Test Data'[Point]

, "Running Total", [Running Total]

//, "Running Total 2", [Running Total 2]

//, "Running Total 3", [Running Total 3]

//, "Running Total 4", [Running Total 4]

)

ORDER BY

'Test Data'[Point] ASC

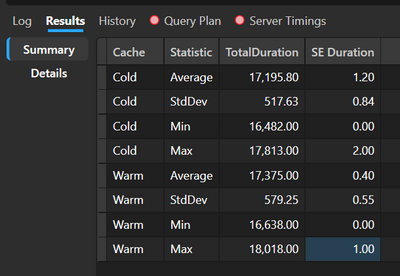

The first (naive) measure took 17 s on average.

The second, also brute-force, measure took 10 sec on average.

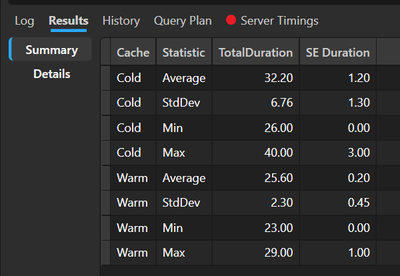

The third one, also brute-force, took... well, between 25 and 32 MILISECONDS on average.

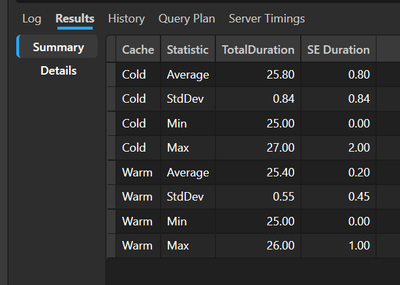

The last one, also brute-force, took... 25 miliseconds on average and this was consistently stable for the warm and cold cache.

You can find the results in the pictures below.

Well, does this blow your mind yet? 🙂

Cheers!

For the 4th measure (the clear winner):

For the 3rd measure:

For the 1st measure:

Sadly, this editor messed up my pictures and my code. So the 3rd measure is not shown but it was 7 secs on average as mentioned above.

Once again, a brute-force method can be as fast as 25 miliseconds on such a dataset if you write your DAX the right way.

Cheers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for you work on this DaXtreme! I'm trying to get my head round what you've done.

I've just tried your measures in Power BI as I don't have DAX studio installed (it's a work computer, and I can't access the admin permission to install this on a Sunday).

I copied your 3rd measure and had to change the name. I also wasn't allowed to use '[@RT]' - it rejected the use of '@' as a special character, so I just deleted it. In the end, the code I deployed was this:

MEASURE RT3 =

var CurrentPoint = MAX( 'Test Data'[Point] )

var PrecomputedRTForAllPoints =

ADDCOLUMNS(

'Test Data',

"RT",

var CurrentPoint = 'Test Data'[Point]

return

SUMX(

FILTER(

'Test Data',

'Test Data'[Point] <= CurrentPoint

),

'Test Data'[Point]

)

)

var Output =

MAXX(

FILTER(

PrecomputedRTForAllPoints,

'Test Data'[Point] = CurrentPoint

),

[RT]

)

return

Output

When I added this measure to the table of numbers 1 through to 10,000 , I didn't get the running total, rather just the original series of numbers {1,2,3,4...}

The calculate showed over 9000 ms on the analyzer.

I couldn't get your 4th measure to work.

I confess I'm a little lost here. It's not clear to me how your measure RT3 produced the times you gave. My DAX isn't good enough I'm afraid.

I think it's wise to revert to my first point: when calculating a running total in DAX I'd like to access a value in the previous column. If I could do this then the code should work quickly AND be easy to read.

thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

Well, I can also show you a screenshot from Power BI itself. The run times are just like the ones that come from DAX Studio. But I did this in DS because I could run this without the visual's rendering overhead and could benchmark the measures via DS native tools with the automation of the number of runs to get means and standard deviations. It just so happens that it's not necessary at all to be able to access the row before and the brute-force approach is more than good enough to make your code as fast as lightning! That's good news, I guess? 🙂

One remark, though. My computer is a beast (Alienware R17) and this obviously had an impact on the numbers. But the relative run times should still stand on any computer, of course.

Please send me a link to your file so that I can see what will happen on my machine. By the way, it's a pity you didn't unfold the "Table" entry in Performance Analyzer. Because of this we can't see the breakdown of run times 😞

Cheers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DaXtreme, as I mentioned before, I couldn't get the result I wanted (a running total) using your measure #3. I just got the original series of numbers 1,2,3,4 etc.

I had to make some changes. I think you named a column [@RT]. My version of PowerBI didn't allow the '@' character.

I'd be grateful if you could post a reply with the code that you successfully used in Power BI that worked well so that I can then try it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

OK. You were right that the 2 last measures do not return the cumulative sum. I've found the bug 🙂 Please find a file with all the measures (corrected!) attached. I was too optimistic because the code was calculating a different thing. I need to research more to find out a way to make it faster. But for the time being... the fastest run I could squeeze out of it is about 11-12 secs. That's obiously not good enough.

Here's the link to the file but I've attached the file itself as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

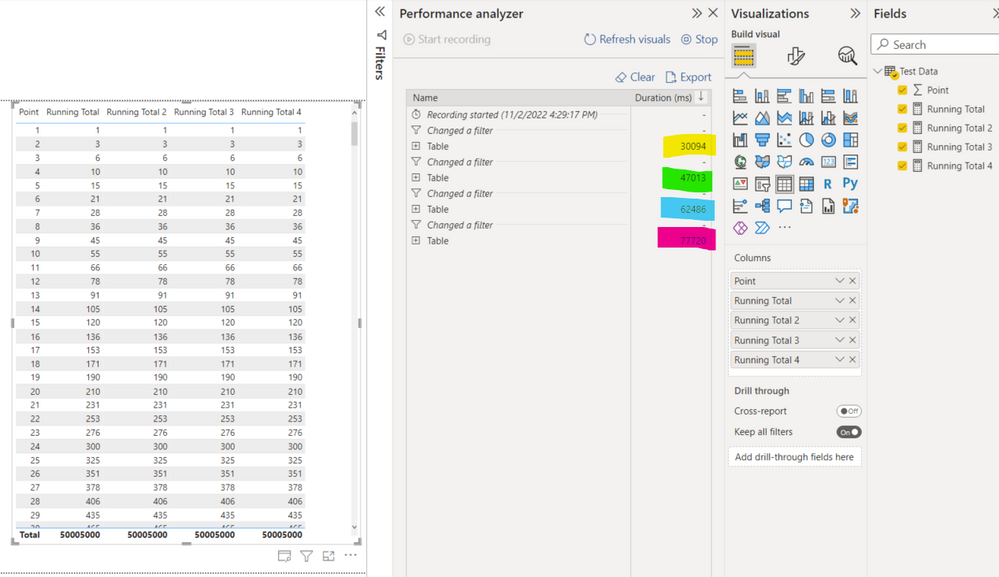

DaXtreme, sorry I got a little tied up. I just downloaded your file and tried it on my machine.

- I set up a table and placed the 'point' value (1 to 10,000) in a column.

- I then added the 4 measures you created one at a time onto the table with the performance analyser running

The results are not encouraging. see screenshot here:

- The yellow highlighted figure was a little over 30,000 ms for measure #1

- The green highlighted figure was a little over 47,000 ms was for measure #2

- The blue highlighted figure was over 62,000 ms for measure #3

- Measure #4 performed worse at nearly 78,000 ms

These figures demonstrate my point. Calculating a cumulative sum without access to the cumulative sum number from the previous row in the table is not efficient.

I spoke to the IT manager in my place. He doesn't know Power BI / DAX, but he does know SQL. I explained the issue to him. He couldn't believe that DAX didn't have a way to access the value in the previous row.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there.

Well, I'm a math'cian by edu and BI Dev (or something similar) by prof 🙂 Hi there. Your code is not written efficiently. You should never put a full table as a filter in CALCULATE. This puts a lot of burden on the engine and hence the run times are awful. I'll run your tests with efficient code for the "ineficcient" algorithm and see what happens 🙂

Bear with me...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

apologies for everyone reading this - it seems the first 3 letters of the word 'cumulative' have been picked up by the sensors and replaced by **bleep**

🙂

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

| User | Count |

|---|---|

| 9 | |

| 9 | |

| 8 | |

| 6 | |

| 6 |

| User | Count |

|---|---|

| 21 | |

| 20 | |

| 20 | |

| 14 | |

| 14 |