Join us at the 2025 Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount! Early bird discount ends December 31.

Register NowBe one of the first to start using Fabric Databases. View on-demand sessions with database experts and the Microsoft product team to learn just how easy it is to get started. Watch now

- Microsoft Fabric Community

- Fabric community blogs

- Power BI Community Blog

- Efficiently Processing Massive Data Volumes using ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Efficiently Processing Massive Data Volumes using Microsoft Fabric: A Comprehensive Guide

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

In this article, you will learn how to process massive volumes of data using Microsoft Fabric.

Prerequisites

- Sample Datasets: Link

- Enable Fabric for your organization

- Fabric Capacity and User license: Microsoft Fabric License, Buy a Fabric capacity

- A virtual machine with On Premises data gateway installed: Install On Premises data gateway

Overview

This architectural overview represents the various fabric artifacts that we will use during the process.

Above architecture follows the guidelines of medallion architecture. The Medallion Lake architecture organizes data in a Lakehouse into distinct layers, following best practices for data management and processing.

Procedure Overview

1. Create a Lakehouse to store the raw data and enriched data in delta format.

2. Create a Data pipeline to copy the raw data from on premises data source to Lakehouse.

3. Create a Notebook to transform the raw data and to build the staging tables.

4. Create a dataflow gen 2 to normalize the staging tables.

5. Create Power BI semantic model and build the report.

Procedure

Create a Lakehouse1. Open app.powerbi.com or app.fabric.microsoft.com Create a new fabric workspace. Make sure the workspace is hosted in a Fabric/Premium capacity.

2. Click on the power bi icon on bottom left and switch to Data Engineering persona. Click on Lakehouse icon to create a new Lakehouse

enter name and select the sensiticity label and click on create.3. As soon as you click on create, a lakehouse, a SQL analytics endpoint and also a default semantic model will be automatically created.

You can click on the three dots icon next to the SQL endpoint and clcik on‘Copy SQL analytics endpoint’. Note it your notepad, we will use this info in the next section.

Before creating the pipeline:

- Make sure your raw data files are on an on-premises server.

-

Create an on-premises gateway connection

a. Open app.fabric.microsoft.com and click on the gear icon on top right Click on ‘Manage gateways and connections’ pageb. Click on ‘+New’ button on top left and a new connection window will open on the right.

After filling all the details of your data source, click on ‘Create’ button.

Note: You can only create an on-premises gateway connection after configuring the on premises gateway machine. Refer the link attached in the prerequisites section for more information.

Create a Data pipeline

1. Open your fabric workspace and switch to the data engineering persona click on pipeline icon to create a new data pipeline.

2. Enter the name of your pipelines and select the appropriate sensitivity label and click on create.

3. Open the pipeline on the top menu click on copy data drop down Click on ‘Add to Canvas’

4. Click on the added ‘Copy data’ activity under ‘General’ section you can enter a meaningful name to your activity.

5. Switch to ‘Source’ section select the on-prem gateway connection from the connection dropdown. Check the box next to ‘Recursively’ Incase if you want to delete the files from on prem server as soon as they moved to the Lakehouse then click on the check box next to ‘Delete files after completion’

6. Switch to ‘Destination’ section From the ‘Connection’ dropdown, select the Lakehouse that was created before Select the ‘File Format’ as binary.

7. Run the pipelines and check the Run status and make sure the files are copied the Lakehouse.

Now that data is copied to the Fabric Lakehouse, In the following section we will create a Fabric notebook to transform the data and to build the staging tables.

Create a Notebook

1. Switch to Synapse Data Engineering persona click on Notebook icon

2. Select the appropriate sensitivity label and click on ‘Save and continue’

3. Open the Notebook and in the left-hand panel ‘Add’ to link the Lakehouse

4. We created the Lakehouse in the previous steps, so select existing Lakehouse and select the Lakehouse.

5. Import the necessary libraries required to transform the raw data Initialize the relative path of raw data folder in Lakehouse Initialize the schema variable and include the columns in the csv files.

6. Run the below spark code to load the data from csv files into spark data frames create staging delta tables.

7. Make sure delta table is created. You can verify it by expanding the table folder in the left-hand panel.

Raw data has been transformed, lets normalize the fact table using Dataflow gen 2

Create a Dataflow gen 2

1. Switch to Data Factory persona and click on the Dataflow Gen2 icon.

2. Click on get data and select SQL server Enter the Lakehouse SQL analytics end point in the ‘Server’ section click on next and select the ‘Staging_Sales’ table created before and click on next.

3. As per the business logic and keeping the downstream analytics solutions in mind. Normalize the fact table into dimensions and facts.

4. On the right bottom you will find an option to add Lakehouse as destination, Click on add and select the Lakehouse. Enter the table name and click on Next Choose the update method as append or overwrite depending on the data pipeline strategy.

5. Select the appropriate data types for each column and click on ‘Save Settings’

6. Repeat step 4 and step 5 for all other tables and run the dataflow.

7. Once the data refresh is completed, open the Lakehouse or SQL analytics endpoint to validate the newly created delta tables.

Note: To reduce the run time of dataflow, make sure you use ‘Native SQL’ option and include the transformation logic within the SQL statement. For example: In the below query, instead of performing the distinct operation in the dataflow after retrieving whole sales table, we included the distinct operation within SQL statement.

Considering the truncating the staging tables and archive the raw data files in the Lakehouse, as soon as the dataflow refresh is completed. This practice can reduce the running time in the subsequent runs and reduces cost.

Create a Power BI Semantic model and build the Power BI Report

1. Switch to Power BI persona open the Lakehouse and on top you will find an option to create a ‘New semantic model’.

2. Enter the name for your semantic model and select all the production grade non staging tables and click on confirm.

3. Open the new semantic model and establish the relationships between the dimension tables and fact tables.

4. After completing the necessary data modelling, create appropriate measures for building the dashboard.

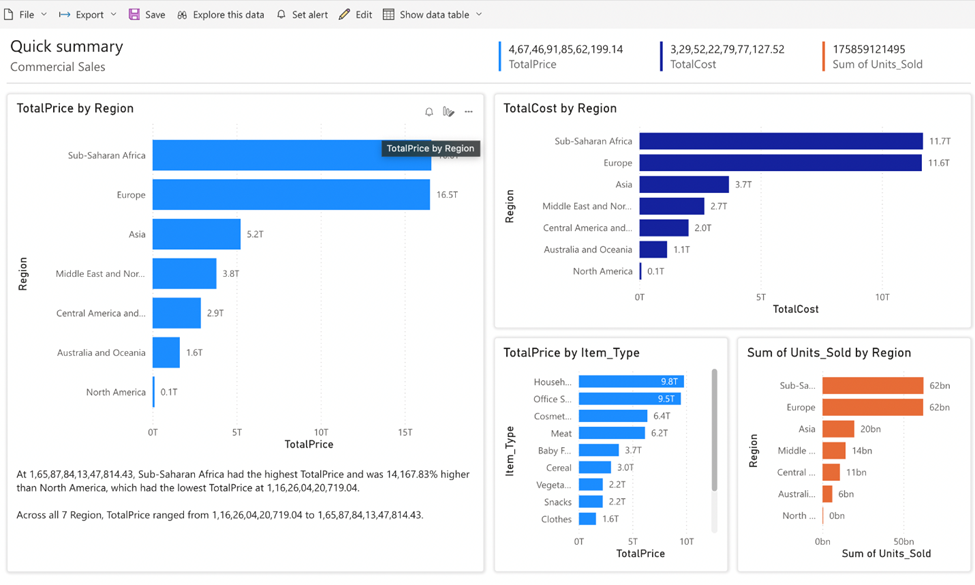

5. Click on the new report button on the top to create the reports as per the A sample report would look like this

Data Refresh

To refresh all the key data artifacts, instead of scheduling them individually or triggering them manually, orchestrating all the activities in the data pipeline would be the best option.

In the same pipeline that we created in the previous steps, include the notebook, dataflow and semantic model refresh activities and schedule the pipeline.You can also consider including failure alerts, as soon as any activity in the pipeline fails you will be notified with an emai

- Make sure your raw data files are on an on-premises server.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Microsoft Power BI vs Microsoft Fabric

- Working with X-Functions in DAX (SUMX, AVERAGEX, e...

- Introducing the new text slicer in Power BI

- Explore Small Multiples for the New Card Visual in...

- Mastering Dynamic Stock Management with Cumulative...

- Data lineage in DAX

- Experimental Custom Pie Chart in Power BI

- Display Hierarchy data while showing cross directi...

- Power BI Export All Visuals - python notebook

- Exploring Hierarchical Directories in Power BI: A ...

- MaximeBo on: Explore Small Multiples for the New Card Visual in...

- Nazrin on: Mastering Dynamic Stock Management with Cumulative...

- cotbadulla on: Power BI Export All Visuals - python notebook

- lilin2020 on: 🎉 FabCon is Back! 🎉

-

kevingauv

on:

Unlocking the Power of Power BI Goals (Metrics): A...

on:

Unlocking the Power of Power BI Goals (Metrics): A...

-

Dangar332

on:

PowerBI - Custom Sort

Dangar332

on:

PowerBI - Custom Sort

-

Jai-Rathinavel

on:

Microsoft Fabric - Designing a Medallion Architect...

on:

Microsoft Fabric - Designing a Medallion Architect...

-

Kedar_Pande

on:

Color Choice: How a Minimalist Approach Enhances U...

on:

Color Choice: How a Minimalist Approach Enhances U...

-

MikeAinOz

on:

Import Microsoft Planner Data into Power BI Using ...

on:

Import Microsoft Planner Data into Power BI Using ...

- akosdemeter on: Get certified in Microsoft Fabric—for free!

-

How to

619 -

Tips & Tricks

584 -

Support insights

121 -

Events

109 -

Opinion

73 -

DAX

66 -

Power BI

65 -

Power Query

62 -

Power BI Dev Camp

45 -

Power BI Desktop

40 -

Roundup

35 -

Power BI Embedded

20 -

Time Intelligence

19 -

Tips&Tricks

18 -

PowerBI REST API

12 -

Featured User Group Leader

10 -

Power Query Tips & Tricks

8 -

finance

8 -

Power BI Service

8 -

Direct Query

7 -

Power BI REST API

6 -

Auto ML

6 -

financial reporting

6 -

Data Analysis

6 -

Power Automate

6 -

Data Visualization

6 -

Python

6 -

Income Statement

5 -

Dax studio

5 -

Dataflow

5 -

powerbi

5 -

service

5 -

Power BI PowerShell

5 -

Machine Learning

5 -

RLS

4 -

M language

4 -

Paginated Reports

4 -

External tool

4 -

Power BI Goals

4 -

PowerShell

4 -

Desktop

4 -

Bookmarks

4 -

Line chart

4 -

Group By

4 -

community

4 -

Data model

3 -

Conditional Formatting

3 -

Visualisation

3 -

Administration

3 -

M code

3 -

Visuals

3 -

SQL Server 2017 Express Edition

3 -

R script

3 -

Aggregation

3 -

calendar

3 -

Gateways

3 -

R

3 -

M Query

3 -

Webinar

3 -

CALCULATE

3 -

R visual

3 -

Reports

3 -

PowerApps

3 -

Data Science

3 -

Azure

3 -

Custom Visual

2 -

VLOOKUP

2 -

pivot

2 -

calculated column

2 -

M

2 -

hierarchies

2 -

Power BI Anniversary

2 -

Language M

2 -

inexact

2 -

Date Comparison

2 -

Power BI Premium Per user

2 -

Forecasting

2 -

REST API

2 -

Editor

2 -

Split

2 -

measure

2 -

Microsoft-flow

2 -

Paginated Report Builder

2 -

Working with Non Standatd Periods

2 -

powerbi.tips

2 -

Custom function

2 -

Reverse

2 -

PUG

2 -

Custom Measures

2 -

Filtering

2 -

Row and column conversion

2 -

Python script

2 -

Nulls

2 -

DVW Analytics

2 -

parameter

2 -

Industrial App Store

2 -

Week

2 -

Date duration

2 -

Formatting

2 -

Weekday Calendar

2 -

Support insights.

2 -

construct list

2 -

slicers

2 -

SAP

2 -

Power Platform

2 -

Workday

2 -

external tools

2 -

index

2 -

RANKX

2 -

PBI Desktop

2 -

Date Dimension

2 -

Integer

2 -

Visualization

2 -

Power BI Challenge

2 -

Query Parameter

2 -

Date

2 -

SharePoint

2 -

Power BI Installation and Updates

2 -

How Things Work

2 -

Tabular Editor

2 -

rank

2 -

ladataweb

2 -

Troubleshooting

2 -

Date DIFF

2 -

Transform data

2 -

Tips and Tricks

2 -

Incremental Refresh

2 -

Number Ranges

2 -

Query Plans

2 -

Power BI & Power Apps

2 -

Random numbers

2 -

Day of the Week

2 -

Retail

1 -

Power BI Report Server

1 -

School

1 -

Cost-Benefit Analysis

1 -

DIisconnected Tables

1 -

Sandbox

1 -

Honeywell

1 -

Combine queries

1 -

X axis at different granularity

1 -

ADLS

1 -

Primary Key

1 -

Microsoft 365 usage analytics data

1 -

Randomly filter

1 -

Week of the Day

1 -

Get latest sign-in data for each user

1 -

query

1 -

Dynamic Visuals

1 -

KPI

1 -

Intro

1 -

Icons

1 -

ISV

1 -

Ties

1 -

unpivot

1 -

Practice Model

1 -

Continuous streak

1 -

ProcessVue

1 -

Create function

1 -

Table.Schema

1 -

Acknowledging

1 -

Postman

1 -

Text.ContainsAny

1 -

Power BI Show

1 -

API

1 -

Kingsley

1 -

Merge

1 -

variable

1 -

Issues

1 -

function

1 -

stacked column chart

1 -

ho

1 -

ABB

1 -

KNN algorithm

1 -

List.Zip

1 -

optimization

1 -

Artificial Intelligence

1 -

Map Visual

1 -

Text.ContainsAll

1 -

Tuesday

1 -

help

1 -

group

1 -

Scorecard

1 -

Json

1 -

Tops

1 -

financial reporting hierarchies RLS

1 -

Featured Data Stories

1 -

MQTT

1 -

Custom Periods

1 -

Partial group

1 -

Reduce Size

1 -

FBL3N

1 -

Wednesday

1 -

Power Pivot

1 -

Quick Tips

1 -

data

1 -

PBIRS

1 -

Usage Metrics in Power BI

1 -

Multivalued column

1 -

Pipeline

1 -

Path

1 -

Yokogawa

1 -

Dynamic calculation

1 -

Data Wrangling

1 -

native folded query

1 -

transform table

1 -

UX

1 -

Cell content

1 -

General Ledger

1 -

Thursday

1 -

Table

1 -

Natural Query Language

1 -

Infographic

1 -

automation

1 -

Prediction

1 -

newworkspacepowerbi

1 -

Performance KPIs

1 -

HR Analytics

1 -

keepfilters

1 -

Connect Data

1 -

Financial Year

1 -

Schneider

1 -

dynamically delete records

1 -

Copy Measures

1 -

Friday

1 -

Q&A

1 -

Event

1 -

Custom Visuals

1 -

Free vs Pro

1 -

Format

1 -

Data Protection

1 -

Active Employee

1 -

Custom Date Range on Date Slicer

1 -

refresh error

1 -

PAS

1 -

certain duration

1 -

DA-100

1 -

bulk renaming of columns

1 -

Single Date Picker

1 -

Monday

1 -

PCS

1 -

Saturday

1 -

update

1 -

Slicer

1 -

Visual

1 -

forecast

1 -

Regression

1 -

CICD

1 -

Current Employees

1 -

date hierarchy

1 -

relationship

1 -

SIEMENS

1 -

Multiple Currency

1 -

Power BI Premium

1 -

On-premises data gateway

1 -

Binary

1 -

Power BI Connector for SAP

1 -

Sunday

1 -

Training

1 -

Announcement

1 -

Features

1 -

domain

1 -

pbiviz

1 -

sport statistics

1 -

Intelligent Plant

1 -

Circular dependency

1 -

GE

1 -

Exchange rate

1 -

Dendrogram

1 -

range of values

1 -

activity log

1 -

Decimal

1 -

Charticulator Challenge

1 -

Field parameters

1 -

deployment

1 -

ssrs traffic light indicators

1 -

SQL

1 -

trick

1 -

Scripts

1 -

Color Map

1 -

Industrial

1 -

Weekday

1 -

Working Date

1 -

Space Issue

1 -

Emerson

1 -

Date Table

1 -

Cluster Analysis

1 -

Stacked Area Chart

1 -

union tables

1 -

Number

1 -

Start of Week

1 -

Tips& Tricks

1 -

Workspace

1 -

Theme Colours

1 -

Text

1 -

Flow

1 -

Publish to Web

1 -

Extract

1 -

Topper Color On Map

1 -

Historians

1 -

context transition

1 -

Custom textbox

1 -

OPC

1 -

Zabbix

1 -

Label: DAX

1 -

Business Analysis

1 -

Supporting Insight

1 -

rank value

1 -

Synapse

1 -

End of Week

1 -

Tips&Trick

1 -

Showcase

1 -

custom connector

1 -

Waterfall Chart

1 -

Power BI On-Premise Data Gateway

1 -

patch

1 -

Top Category Color

1 -

A&E data

1 -

Previous Order

1 -

Substring

1 -

Wonderware

1 -

Power M

1 -

Format DAX

1 -

Custom functions

1 -

accumulative

1 -

DAX&Power Query

1 -

Premium Per User

1 -

GENERATESERIES

1 -

Report Server

1 -

Audit Logs

1 -

analytics pane

1 -

step by step

1 -

Top Brand Color on Map

1 -

Tutorial

1 -

Previous Date

1 -

XMLA End point

1 -

color reference

1 -

Date Time

1 -

Marker

1 -

Lineage

1 -

CSV file

1 -

conditional accumulative

1 -

Matrix Subtotal

1 -

Check

1 -

null value

1 -

Excel

1 -

Cumulative Totals

1 -

Report Theme

1 -

Bookmarking

1 -

oracle

1 -

mahak

1 -

pandas

1 -

Networkdays

1 -

Button

1 -

Dataset list

1 -

Keyboard Shortcuts

1 -

Fill Function

1 -

LOOKUPVALUE()

1 -

Tips &Tricks

1 -

Plotly package

1 -

refresh M language Python script Support Insights

1 -

Sameperiodlastyear

1 -

Office Theme

1 -

matrix

1 -

bar chart

1 -

Measures

1 -

powerbi argentina

1 -

Canvas Apps

1 -

total

1 -

Filter context

1 -

Difference between two dates

1 -

get data

1 -

OSI

1 -

Query format convert

1 -

ETL

1 -

Json files

1 -

Merge Rows

1 -

CONCATENATEX()

1 -

take over Datasets;

1 -

Networkdays.Intl

1 -

Get row and column totals

1 -

Governance

1 -

Fun

1 -

Power BI gateway

1 -

gateway

1 -

Elementary

1 -

Custom filters

1 -

Vertipaq Analyzer

1 -

powerbi cordoba

1 -

Model Driven Apps

1 -

REMOVEFILTERS

1 -

XMLA endpoint

1 -

translations

1 -

OSI pi

1 -

Parquet

1 -

Change rows to columns

1 -

remove spaces

1 -

Azure AAD

1