- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Community Blog

- Power BI Community Blog

- An in depth look at the Automated ML entities in P...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Automated ML integration with Power BI dataflows allows training and applying Binary Prediction, General Classification and Regression models. The ML models are internally represented as specially marked dataflow entities. I’ll describe how the ML related entities are defined in M language and how they can be edited using the Power Query editor.

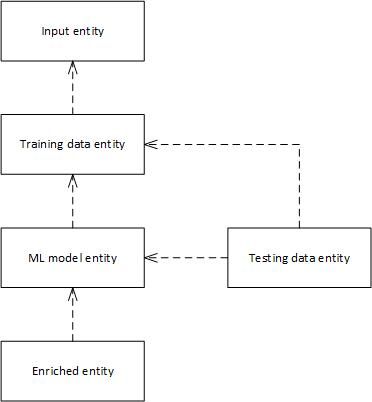

The following diagram illustrates the entities generated during the ML process and the dependencies between them:

As you can see above the process of training an ML model creates 2 additional entities besides the model itself: training data entity and testing data entity.

Training data entity

Let's take an example of training a Binary Prediction model on an entity named “Customers” with selected columns “City”, State”, “CreditCardBalance”, “WebEngagementScore” and “CustomerStatus”. The training data entity will get created with the following M definition:

let

Source = Customers,

#"Selected columns" = Table.SelectColumns(Source, {"City", "State", "CreditCardBalance", "WebEngagementScore", "CustomerStatus"}),

#"Removed nulls" = Table.SelectRows(#"Selected columns", each [CustomerStatus] <> null),

#"Sampled input" = AI.SampleStratifiedWithHoldout("CustomerStatus", Table.RowCount(#"Removed nulls"), #"Removed nulls")

in

#"Sampled input"Note that the training entity does two things:

- Removes all rows that have nulls in the “historical outcome field” selected during model creation in the UI (here “CustomerStatus”). A supervised machine learning model can only learn from rows which have the outcome field set.

- Performs sampling of records of that entity using the AI.SampleStratifiedWithHoldout function. AI.SampleStratifiedWithHoldout takes the following parameters:

- historical outcome field name (text)

- number of records in the input table (number)

- the input table (data).

- Desired sample size (number) as an optional 4th parameter

- Desired ratio (number) between training and test holdout as an optional 5th

It returns a table with the same columns as the input table plus a boolean “__IsTraining__” column indicating if a given record should be used for training (true) or testing (false).

ML model entity

The model entity would get created with M definition of:

let

Source = #"CustomerChurnModel Training Data",

#"Invoked TrainPrediction" = AIInsights.Contents(){[Key = "AI.Execute"]}[Data]("AI.TrainPrediction", "Regular", [labelColumnName = "CustomerStatus", data = Source]),

#"Selected training schema columns" = Table.SelectColumns(#"Invoked TrainPrediction", {"TrainingId", "Model", "Stats", "GlobalExplanation", "TrainingSchema", "TrainingAUC", "LabelColumn"})

in

#"Selected training schema columns"It uses the training data entity as input and invokes the “AI.TrainPrediction” transform on the AI workload of your premium capacity. Note that the invocation could be written in a simpler form:

#"Invoked TrainPrediction" = AIInsights.Contents(){[Key = "AI.TrainPredictiontexttable"]}[Data]("CustomerStatus", Source)AIInsights.Contents() returns a table of AI transforms supported by the AI workload with columns such as: Name, Data (the actual M function invoking the transform), Key (unique identifier of the transform). That list includes transforms generated for Azure ML services you have access to and Cognitive Services. The “AI.Execute” transform used in the query generated by the “Add ML model” wizard is a wrapper that allows calling other transforms by passing parameters in a record. That allows adding new optional parameters to existing transforms without breaking existing models with queries generated without passing values for those parameters.

The model entity created by the AI.TrainPrediction transform is currently a table with a single record. This table also contains “Global explanations” for the model. The report works on top of these explanations. In the future there may be multiple records corresponding to versions of the model.

Sample output of AI.TrainPrediction:

Testing data entity

The testing data entity allows you to explore the records used by AutoML for model evaluation and hyperparameter tuning. For the above scenario its generated M definition would be:

let Source = #"CustomerChurnModel Training Data", #"Filtered rows" = Table.SelectRows(Source, each ([__IsTraining__] = false)), #"Invoked Scoring" = CustomerChurnModel.Score(#"Filtered rows", "CustomerChurnModelOutput", 0.5) in #"Invoked Scoring"

It uses the training data entity as input and selects rows where the “__IsTraning__” column added during sampling is set to false. It then applies the scoring function defined as a separate query:

let

ApplyScoringFunction = (inputQuery as table, newColumn as text, decisionThreshold as number) => let

MlModel = CustomerChurnModel,

MlModelJson = try Text.FromBinary(Json.FromValue(MlModel{0})) otherwise "InvalidModel",

Source = inputQuery,

SelectedBaseEntityColumns = {"City", "State", "CreditCardBalance", "WebEngagementScore", "CustomerStatus"},

InputRowCount = Table.RowCount(Source),

InputTableType = Value.Type(Source),

SelectedColumnsTypes = List.Transform(SelectedBaseEntityColumns, each Type.TableColumn(InputTableType, _)),

ScoringFunction =

let

ScoringFunctionScalarType = type function (row as record) as any,

VectorizedScoringFunction = (input as table) =>

let

ExpandedColumns = Table.ExpandRecordColumn(input, "row", SelectedBaseEntityColumns),

ExpandedColumnsWithTypes = Table.TransformColumnTypes(ExpandedColumns, List.Zip({SelectedBaseEntityColumns, SelectedColumnsTypes})),

ErrorList = List.Repeat({[Output = null]}, InputRowCount),

Result = if MlModelJson <> "InvalidModel" then (try Table.ToRecords(AIInsights.Contents(){[Key = "AI.Execute"]}[Data]("AI.ScorePrediction", "Vectorized", [data = ExpandedColumns, scoreParameters = MlModelJson])) otherwise ErrorList) else ErrorList

in

Result,

ScalarVectorScoringFunction = Function.ScalarVector(ScoringFunctionScalarType, VectorizedScoringFunction)

in

ScalarVectorScoringFunction,

AddScoringColumn = Table.AddColumn(Source, newColumn, each ScoringFunction(_)),

ExpandResultColumns = Table.ExpandRecordColumn(AddScoringColumn, newColumn, {"PredictionScore", "PredictionExplanation"}, {Text.Combine({newColumn, "PredictionScore"}, "."), Text.Combine({newColumn, "PredictionExplanation"}, ".")}),

LabeledOutput = Table.AddColumn(ExpandResultColumns, Text.Combine({newColumn, "Outcome"}, "."), each Record.Field(_, Text.Combine({newColumn, "PredictionScore"}, ".")) >= decisionThreshold * 100),

ReplacedErrors = Table.ReplaceErrorValues(LabeledOutput, {{Text.Combine({newColumn, "Outcome"}, "."), null}, {Text.Combine({newColumn, "PredictionScore"}, "."), null}, {Text.Combine({newColumn, "PredictionExplanation"}, "."), null}}),

TransformTypes = Table.TransformColumnTypes(ReplacedErrors, {{Text.Combine({newColumn, "Outcome"}, "."), type logical}, {Text.Combine({newColumn, "PredictionScore"}, "."), type text}, {Text.Combine({newColumn, "PredictionExplanation"}, "."), type text}})

in

TransformTypes

in

ApplyScoringFunctionNote that the decision threshold value passed by the generated testing data entity is 0.5. The scoring function invokes the “AI.ScorePrediction” transform on the AI workload of your premium capacity passing the ML model and the input table. On output 3 new columns get added: Outcome (Boolean), PredictionScore and PredictionExplanation.

Training occurs during dataflow refresh

The “Add ML model” wizard creates the previously defined entities in the dataflow, but it’s only during refresh that the training actually occurs and the resulting model is materialized in the model entity in the dataflow store (Azure Data Lake). After training the model can be applied to another entity with matching schema.

Model application

Applying the trained ML model to an entity, let’s say “NewCustomers” entity, will result in creation on another entity with appended word “enriched” and ML model name, e.g. “NewCustomers enriched CustomerChurnModel”. The definition of such enriched entity would be:

let Source = NewCustomers, #"Invoked CustomerChurnModel.Score" = CustomerChurnModel.Score(Source, "CustomerChurnModel", 0.5) in #"Invoked CustomerChurnModel.Score"

The enriched entity definition uses the same scoring function as the test data entity.

Please note: The application of the model to an entity also occurs during dataflow refresh when the enriched entity is materialized in the dataflow store.

Marek Rycharski | Principal Software Engineer at Microsoft Power BI (Artificial Intelligence) team

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Dynamic rollback of the previous N weeks of data

- Supercharge Your Visuals: Easy Conditional Formatt...

- The using of Cartesian products in many-to-many re...

- How to Filter similar Columns Based on Specific Co...

- Power BI Dynamic Date Filters: Automatically Updat...

- Enhanced Data Profiling in Power Query: GUI and Ta...

- How to use Tooltip to display breakdown data for a...

- Unveiling the Power of Lakehouse's SQL Analytics E...

- [DAX] Time Intelligence vs WINDOW vs OFFSET

- Display data in a fixed order and show data for th...

- joseftantawi on: How to customize open-sourced custom visual.

- kalpeshdangar on: Creating Custom Calendars for Accurate Working Day...

- gwayne on: Embracing TMDL Functionalities in Power BI and Pre...

- jian123 on: Sharing Power Query tables

-

Martin_D

on:

From the Desk of An Experienced Power BI Analyst

Martin_D

on:

From the Desk of An Experienced Power BI Analyst

-

ibarrau

on:

[PowerQuery] Catch errors in a request http

ibarrau

on:

[PowerQuery] Catch errors in a request http

- Aditya07 on: How to import customised themes in Power BI - usin...

-

Martin_D

on:

Currency Conversion in Power BI: Enabling Seamless...

Martin_D

on:

Currency Conversion in Power BI: Enabling Seamless...

-

technolog

on:

Unveiling Top Products with categories: A Guide to...

technolog

on:

Unveiling Top Products with categories: A Guide to...

-

Ritaf1983

on:

When Big Numbers Become Big Problems

Ritaf1983

on:

When Big Numbers Become Big Problems

-

How To

573 -

Tips & Tricks

526 -

Support insights

121 -

Events

107 -

DAX

66 -

Power BI

65 -

Opinion

64 -

Power Query

62 -

Power BI Desktop

40 -

Power BI Dev Camp

36 -

Roundup

31 -

Power BI Embedded

20 -

Time Intelligence

19 -

Tips&Tricks

18 -

PowerBI REST API

12 -

Power Query Tips & Tricks

8 -

finance

8 -

Power BI Service

8 -

Direct Query

7 -

Power BI REST API

6 -

Auto ML

6 -

financial reporting

6 -

Data Analysis

6 -

Power Automate

6 -

Data Visualization

6 -

Python

6 -

Featured User Group Leader

5 -

Dax studio

5 -

Income Statement

5 -

powerbi

5 -

service

5 -

Power BI PowerShell

5 -

Machine Learning

5 -

RLS

4 -

M language

4 -

External tool

4 -

Paginated Reports

4 -

Power BI Goals

4 -

PowerShell

4 -

Desktop

4 -

Bookmarks

4 -

Group By

4 -

Line chart

4 -

community

4 -

Data model

3 -

Conditional Formatting

3 -

Visualisation

3 -

Administration

3 -

M code

3 -

SQL Server 2017 Express Edition

3 -

Visuals

3 -

R script

3 -

Aggregation

3 -

Dataflow

3 -

calendar

3 -

Gateways

3 -

R

3 -

M Query

3 -

R visual

3 -

Webinar

3 -

CALCULATE

3 -

Reports

3 -

PowerApps

3 -

Data Science

3 -

Azure

3 -

Custom Visual

2 -

VLOOKUP

2 -

pivot

2 -

calculated column

2 -

Power BI Premium Per user

2 -

inexact

2 -

Date Comparison

2 -

Split

2 -

Forecasting

2 -

REST API

2 -

Editor

2 -

Working with Non Standatd Periods

2 -

powerbi.tips

2 -

Custom function

2 -

Reverse

2 -

measure

2 -

Microsoft-flow

2 -

Paginated Report Builder

2 -

PUG

2 -

Custom Measures

2 -

Filtering

2 -

Row and column conversion

2 -

Python script

2 -

Nulls

2 -

DVW Analytics

2 -

Industrial App Store

2 -

Week

2 -

Date duration

2 -

parameter

2 -

Weekday Calendar

2 -

Support insights.

2 -

construct list

2 -

Formatting

2 -

Power Platform

2 -

Workday

2 -

external tools

2 -

slicers

2 -

SAP

2 -

index

2 -

RANKX

2 -

Integer

2 -

PBI Desktop

2 -

Date Dimension

2 -

Power BI Challenge

2 -

Query Parameter

2 -

Visualization

2 -

Tabular Editor

2 -

Date

2 -

SharePoint

2 -

Power BI Installation and Updates

2 -

How Things Work

2 -

troubleshooting

2 -

Date DIFF

2 -

Transform data

2 -

rank

2 -

ladataweb

2 -

Tips and Tricks

2 -

Incremental Refresh

2 -

Query Plans

2 -

Power BI & Power Apps

2 -

Random numbers

2 -

Day of the Week

2 -

Number Ranges

2 -

M

2 -

hierarchies

2 -

Power BI Anniversary

2 -

Language M

2 -

Retail

1 -

Power BI Report Server

1 -

School

1 -

Cost-Benefit Analysis

1 -

ISV

1 -

Ties

1 -

unpivot

1 -

Practice Model

1 -

Continuous streak

1 -

ProcessVue

1 -

Create function

1 -

Table.Schema

1 -

Acknowledging

1 -

Postman

1 -

Text.ContainsAny

1 -

Power BI Show

1 -

query

1 -

Dynamic Visuals

1 -

KPI

1 -

Intro

1 -

Icons

1 -

Issues

1 -

function

1 -

stacked column chart

1 -

ho

1 -

ABB

1 -

KNN algorithm

1 -

List.Zip

1 -

optimization

1 -

Artificial Intelligence

1 -

Map Visual

1 -

Text.ContainsAll

1 -

Tuesday

1 -

API

1 -

Kingsley

1 -

Merge

1 -

variable

1 -

financial reporting hierarchies RLS

1 -

Featured Data Stories

1 -

MQTT

1 -

Custom Periods

1 -

Partial group

1 -

Reduce Size

1 -

FBL3N

1 -

Wednesday

1 -

help

1 -

group

1 -

Scorecard

1 -

Json

1 -

Tops

1 -

Multivalued column

1 -

pipeline

1 -

Path

1 -

Yokogawa

1 -

Dynamic calculation

1 -

Data Wrangling

1 -

native folded query

1 -

transform table

1 -

UX

1 -

Cell content

1 -

General Ledger

1 -

Thursday

1 -

Power Pivot

1 -

Quick Tips

1 -

data

1 -

PBIRS

1 -

Usage Metrics in Power BI

1 -

HR Analytics

1 -

keepfilters

1 -

Connect Data

1 -

Financial Year

1 -

Schneider

1 -

dynamically delete records

1 -

Copy Measures

1 -

Friday

1 -

Table

1 -

Natural Query Language

1 -

Infographic

1 -

automation

1 -

Prediction

1 -

newworkspacepowerbi

1 -

Performance KPIs

1 -

Active Employee

1 -

Custom Date Range on Date Slicer

1 -

refresh error

1 -

PAS

1 -

certain duration

1 -

DA-100

1 -

bulk renaming of columns

1 -

Single Date Picker

1 -

Monday

1 -

PCS

1 -

Saturday

1 -

Q&A

1 -

Event

1 -

Custom Visuals

1 -

Free vs Pro

1 -

Format

1 -

Current Employees

1 -

date hierarchy

1 -

relationship

1 -

SIEMENS

1 -

Multiple Currency

1 -

Power BI Premium

1 -

On-premises data gateway

1 -

Binary

1 -

Power BI Connector for SAP

1 -

Sunday

1 -

update

1 -

Slicer

1 -

Visual

1 -

forecast

1 -

Regression

1 -

CICD

1 -

sport statistics

1 -

Intelligent Plant

1 -

Circular dependency

1 -

GE

1 -

Exchange rate

1 -

Dendrogram

1 -

range of values

1 -

activity log

1 -

Decimal

1 -

Charticulator Challenge

1 -

Field parameters

1 -

Training

1 -

Announcement

1 -

Features

1 -

domain

1 -

pbiviz

1 -

Color Map

1 -

Industrial

1 -

Weekday

1 -

Working Date

1 -

Space Issue

1 -

Emerson

1 -

Date Table

1 -

Cluster Analysis

1 -

Stacked Area Chart

1 -

union tables

1 -

Number

1 -

Start of Week

1 -

Tips& Tricks

1 -

deployment

1 -

ssrs traffic light indicators

1 -

SQL

1 -

trick

1 -

Scripts

1 -

Extract

1 -

Topper Color On Map

1 -

Historians

1 -

context transition

1 -

Custom textbox

1 -

OPC

1 -

Zabbix

1 -

Label: DAX

1 -

Business Analysis

1 -

Supporting Insight

1 -

rank value

1 -

Synapse

1 -

End of Week

1 -

Tips&Trick

1 -

Workspace

1 -

Theme Colours

1 -

Text

1 -

Flow

1 -

Publish to Web

1 -

patch

1 -

Top Category Color

1 -

A&E data

1 -

Previous Order

1 -

Substring

1 -

Wonderware

1 -

Power M

1 -

Format DAX

1 -

Custom functions

1 -

accumulative

1 -

DAX&Power Query

1 -

Premium Per User

1 -

GENERATESERIES

1 -

Showcase

1 -

custom connector

1 -

Waterfall Chart

1 -

Power BI On-Premise Data Gateway

1 -

step by step

1 -

Top Brand Color on Map

1 -

Tutorial

1 -

Previous Date

1 -

XMLA End point

1 -

color reference

1 -

Date Time

1 -

Marker

1 -

Lineage

1 -

CSV file

1 -

conditional accumulative

1 -

Matrix Subtotal

1 -

Check

1 -

null value

1 -

Report Server

1 -

Audit Logs

1 -

analytics pane

1 -

mahak

1 -

pandas

1 -

Networkdays

1 -

Button

1 -

Dataset list

1 -

Keyboard Shortcuts

1 -

Fill Function

1 -

LOOKUPVALUE()

1 -

Tips &Tricks

1 -

Plotly package

1 -

refresh M language Python script Support Insights

1 -

Excel

1 -

Cumulative Totals

1 -

Report Theme

1 -

Bookmarking

1 -

oracle

1 -

Canvas Apps

1 -

total

1 -

Filter context

1 -

Difference between two dates

1 -

get data

1 -

OSI

1 -

Query format convert

1 -

ETL

1 -

Json files

1 -

Merge Rows

1 -

CONCATENATEX()

1 -

take over Datasets;

1 -

Networkdays.Intl

1 -

Get row and column totals

1 -

Sameperiodlastyear

1 -

Office Theme

1 -

matrix

1 -

bar chart

1 -

Measures

1 -

powerbi argentina

1 -

Model Driven Apps

1 -

REMOVEFILTERS

1 -

XMLA endpoint

1 -

translations

1 -

OSI pi

1 -

Parquet

1 -

Change rows to columns

1 -

remove spaces

1 -

Azure AAD

1 -

Governance

1 -

Fun

1 -

Power BI gateway

1 -

gateway

1 -

Elementary

1 -

Custom filters

1 -

Vertipaq Analyzer

1 -

powerbi cordoba

1 -

DIisconnected Tables

1 -

Sandbox

1 -

Honeywell

1 -

Combine queries

1 -

X axis at different granularity

1 -

ADLS

1 -

Primary Key

1 -

Microsoft 365 usage analytics data

1 -

Randomly filter

1 -

Week of the Day

1 -

Get latest sign-in data for each user

1